Design of experiments#

This notebook is about the statistical considerations related to fMRI experimental design. Make sure you do the other lab (glm_part2_inference.ipynb) first!

Experimental designs for fMRI studies come in different flavors, depending on what hypotheses you have about the phenomenon of interest and how you manipulate this. Apart from the different types of experimental designs (e.g., subtractive, factorial, parametric), there are a couple of general recommendations w.r.t. experimental design that can optimize the chance of finding positive results, which will be discussed in this notebook as well. These recommendations have to do with the specific ordering and timing of the events in your experiment. For example, suppose you show images of cats (condition: “C”) and dogs (condition: “D”) to subjects in the scanner, and you’re interested if the brain responds differently to images of dogs compared to images of cats. What ordering (“CCCCDDDD” or “CDCDCDCD” or “CCDCDDCD”?) and timing (how long should I wait to present another stimulus?) of the stimuli will yield the best (here: highest) effect possible, and why?

What you’ll learn: after this lab, you’ll …

understand what ‘design variance’ is and how it relates to ‘efficiency’

understand the effect of design variance t-values

know how to calculate design variance in Python

Estimated time needed to complete: 4-6 hours

# First some imports

import numpy as np

import matplotlib.pyplot as plt

from nilearn.glm.first_level.hemodynamic_models import glover_hrf

from scipy.stats import pearsonr

Types of designs: factorial and parametric designs#

In the previous tutorial (‘the GLM: part 2’), we discussed contrasts at length and how to use contrast-vectors to specify simple hypotheses (e.g., happy faces > sad faces). The contrast-vectors from last week’s lab were examples of either simple contrasts-against-baseline (\(H_{0}: \beta = 0\)) or examples of subtractive designs (also called categorical designs; e.g., \(H_{0}: \beta_{1} - \beta_{2} = 0\)). There are, however, more types of designs possible, like factorial and parametric designs. In this first section, we’ll discuss these two designs shortly and have you implement GLMs to test hypotheses forwarded by these designs.

Factorial designs#

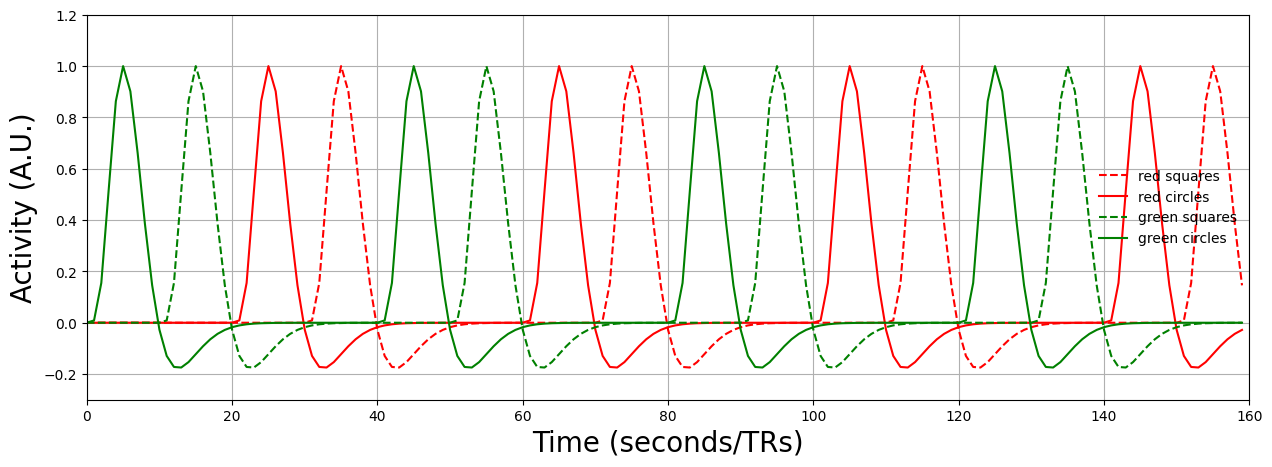

Factorial designs are designs in which each event (e.g., stimulus) may be represented by a combination of different conditions. For example, you could show images of squares and circles (condition 1: shape) which may be either green or red (condition 2: color). See the image below for a visualization of these conditions and the associated contrasts.

Before we’ll explain this figure in more detail, let’s generate some data. We’ll assume that the TR is 1 second and that all onsets are always syncronized with the TR (so there won’t be onsets at, e.g., 10.295 seconds). This way, we can ignore the downsampling issue. We’ll convolve the data with an HRF already and plot the design matrix below:

from niedu.utils.nii import simulate_signal

exp_length = 160

TR = 1

xmax = exp_length // TR

y, X = simulate_signal(

onsets=np.array(

[0, 40, 80, 120, # red squares

10, 50, 90, 130, # red circles

20, 60, 100, 140, # green squares

30, 70, 110, 150] # green circles

),

conditions=['rq'] * 4 + ['rc'] * 4 + ['gs'] * 4 + ['gc'] * 4,

TR=TR,

duration=exp_length,

icept=0,

params_canon=[1, 2, 3, 4],

rnd_seed=42,

plot=False

)

X = X[:, :5]

plt.figure(figsize=(15, 5))

plt.plot(X[:, 1], c='r', ls='--')

plt.plot(X[:, 2], c='r')

plt.plot(X[:, 3], c='g', ls='--')

plt.plot(X[:, 4], c='g')

plt.xlim(0, xmax)

plt.ylim(-0.3, 1.2)

plt.xlabel('Time (seconds/TRs)', fontsize=20)

plt.ylabel('Activity (A.U.)', fontsize=20)

plt.legend(['red squares', 'red circles', 'green squares', 'green circles'], frameon=False)

plt.grid()

plt.show()

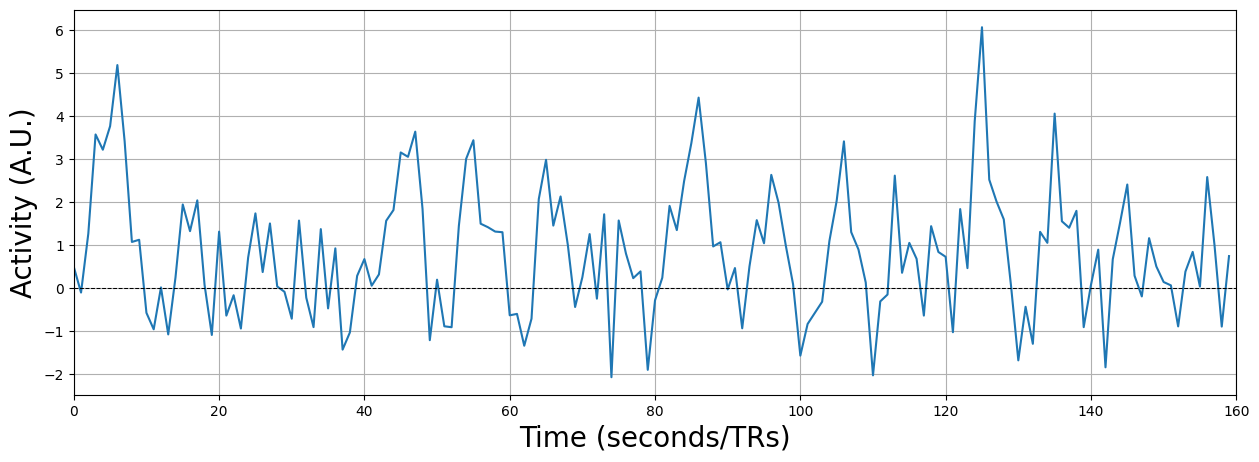

We have also the time series of a (hypothetical) fMRI voxel, loaded and plotted below:

plt.figure(figsize=(15, 5))

plt.plot(y)

plt.xlim(0, xmax)

plt.axhline(0, ls='--', c='k', lw=0.75)

plt.xlabel('Time (seconds/TRs)', fontsize=20)

plt.ylabel('Activity (A.U.)', fontsize=20)

plt.grid()

plt.show()

from numpy.linalg import inv

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo'''

from niedu.tests.nii.week_3 import test_glm_refresher

test_glm_refresher(X, y, betas_todo)

Alright, now, from the figure above, you can see there are many different contrast possible! First of all, we can test for main effects: these are effects of a single condition, collapsing over the other(s). For example, testing whether red stimuli lead to different activity levels than green stimuli (regardless of shape) would be a test of a main effect. Technically, main effects within factorial designs are tested with F-tests, which are undirectional tests, which mean that they test for any difference between conditions (e.g., either that red > green or green > red). However, this rarely happens in cognitive neuroscience, as most hypotheses are directional (e.g., red > green), so we’ll focus on those types of hypotheses in factorial designs here.

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo '''

from niedu.tests.nii.week_3 import test_red_larger_than_green

test_red_larger_than_green(cvec_red_green)

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo '''

from niedu.tests.nii.week_3 import test_circles_larger_than_squares

test_circles_larger_than_squares(cvec_circle_square)

Alright, these (directional) main effects should be familiar as they don’t differ very much from those that you saw last week. However, factorial designs are unique in that they, additionally, can test for interactions between conditions. Again, technically, (undirectional) F-tests should be used, but again, these are rarely used in cognitive neuroscience.

So, let’s define a directional interaction effect. Suppose that, for some reason, I believe that red stimuli evoke more activity than green stimuli, but more so for circles than for squares. In other words:

It turns out, there is a very nice trick to figure out the corresponding contrast for this interaction: you can simply (elementwise) multiply the contrast vector for “red > green” and the contrast vector for “circle > squares”!

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo '''

from niedu.tests.nii.week_3 import test_interaction

test_interaction(cvec_interaction)

Let’s practice working with interactions once more.

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo '''

from niedu.tests.nii.week_3 import test_interaction2

test_interaction2(cvec_interaction2)

Parametric designs#

So far, we have discussed only designs with conditions that are categorical, such as “male vs. female faces” and “circles vs. squares”. The independent variables in your experimental design, however, do not have to be categorical! They can be continuous or ordinal, meaning that a particular variable might have different values (or “weights”) across trials. Designs involving continuously varying properties are often called parametric designs or parametric modulation.

In parametric designs, we assume that our design affects the voxel response in two ways:

An “unmodulated” response (a response to the stimulus/task independent of parametric value);

A parametric modulation of the response

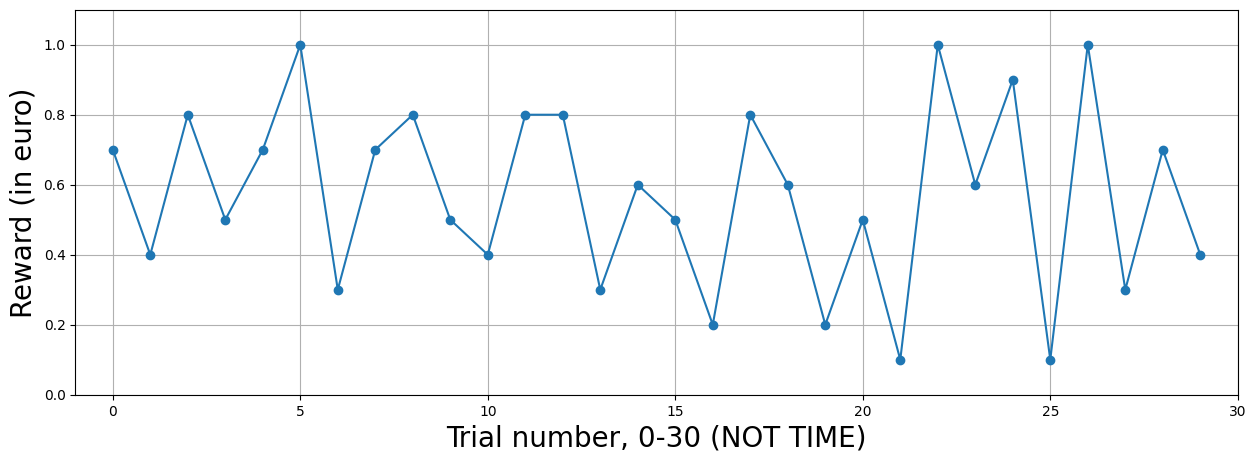

To make this more tangible, let’s consider an example. Suppose that we have fMRI data from a reward-study. On every trial in this experiment, trials would start with a word “guess” on the screen for 1 second. Then, participants had to guess a number between 1 and 10 (which they indicated using an MRI-compatible button box). Before the experiment started, participants were told that the closer they were to the “correct” number (which was predetermined by the experimenter for every trial), the larger the reward they would get: 1 euro when their guess was correct and 10 cents less for every number that they were off (e.g., when the “correct” number was 7 and they would guess 5, then they’d receive 80 eurocents). After the participant’s response and a inter-stimulus interval of 4 seconds, participants would see the amount they won on the screen.

One hypothesis you might be interested in is whether there are voxels/brain regions which response is modulated by the reward magnitude (e.g., higher activity for larger rewards, or vice versa). Before we go on, let’s create some (hypothetical) experimental data. Suppose that the experiment lasted 10 minutes and contained 30 trials with varying reward magnitude, and fMRI was acquired with a TR of 1 second and onsets of the reward presentations were synchronized with the TR (again, while this is not very realistic, this obviates the need for up/downsampling).

np.random.seed(42)

exp_time = 60 * 10 # i.e., 14 minutes in seconds

n_trials = 30

reward_onsets = np.arange(4, exp_time, exp_time / n_trials).astype(int)

print("Number of trials: %i" % reward_onsets.size)

reward_magnitudes = np.random.randint(1, 11, size=reward_onsets.size) / 10

plt.figure(figsize=(15, 5))

plt.plot(reward_magnitudes, marker='o')

plt.xlim(-1, reward_magnitudes.size)

plt.ylim(0, 1.1)

plt.xlabel('Trial number, 0-%i (NOT TIME)' % n_trials, fontsize=20)

plt.ylabel('Reward (in euro)', fontsize=20)

plt.grid()

plt.show()

Number of trials: 30

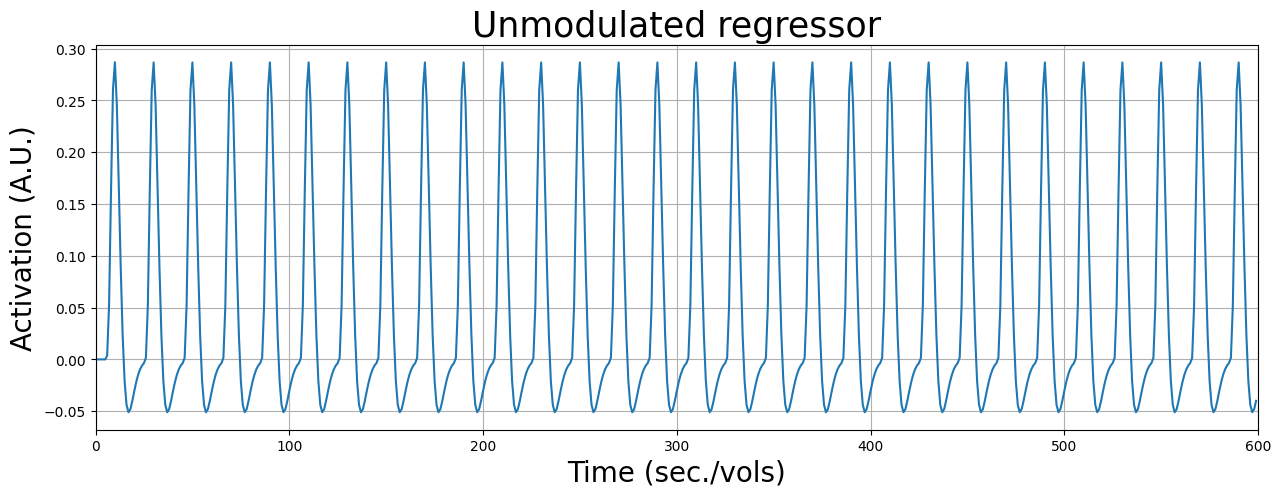

Now, in non-parametric designs, we would create regressors with zeros everywhere and ones at the onset of stimuli (or whatever we think will impact the fMRI data). However, in parametric designs, we create two regressors for every parametric modulation: one for the unmodulated response and one for the modulated response.

Let’s start with the unmodulated response. This predictor is created like we did before: convolving a stick predictor with an HRF:

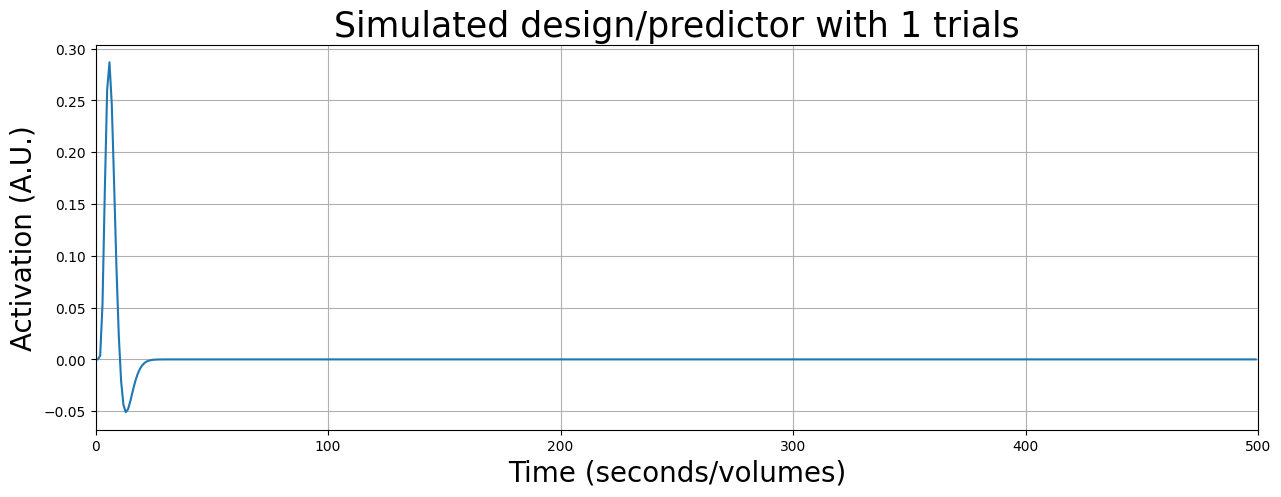

hrf = glover_hrf(tr=1, oversampling=1)

x_unmod = np.zeros(exp_time)

x_unmod[reward_onsets] = 1

x_unmod = np.convolve(x_unmod, hrf)[:exp_time]

plt.figure(figsize=(15, 5))

plt.plot(x_unmod)

plt.xlim(0, exp_time)

plt.xlabel('Time (sec./vols)', fontsize=20)

plt.ylabel('Activation (A.U.)', fontsize=20)

plt.title('Unmodulated regressor', fontsize=25)

plt.grid()

plt.show()

Now, the parametrically modulated regressor is created as follows: instead of creating an initial array with zeros and ones at indices corresponding to the reward onset, we use the (mean-subtracted) reward magnitude. It is important to subtract the mean from the parametric modulation values, because this will “decorrelate” the modulated regressor from the unmodulated regressor (such that the modulated regressor explains only variance that is due to modulation of the response, not the common response towards the stimulus/task). In other words, subtracting the mean from the parametric regressor orthogonalises the parametric regressor with respect to the unmodulated regressor.

Then, the predictor is again convolved with the HRF to create the final modulated predictor.

Let’s try to create a modulated regression in a ToDo!

''' Implement your ToDo here. '''

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo. '''

from niedu.tests.nii.week_3 import test_parametric_modulation

test_parametric_modulation(reward_onsets, reward_magnitudes, exp_time, hrf, x_mod)

# Implement your ToDo here

y_reward_signal = np.load('y_reward_signal.npy')

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the ToDo above. '''

from niedu.tests.nii.week_3 import test_betas_reward

test_betas_reward(y_reward_signal, x_mod, x_unmod, icept, betas_reward)

print("Well done!")

YOUR ANSWER HERE

Design variance/efficiency#

Alright, hopefully you now know how to design contrasts for factorial and parameteric designs! This section has a slightly different focus, namely the mathematics behind design variance and efficiency! Remember that we wouldn’t tell you what “design variance” was in the previous two GLM tutorials? Well, this week we’re going to discuss and explain it extensively! Before we delve into this topic, let’s first recap the (conceptual) formula for the t-value from last week (the relevance of this will become clear shortly).

Last week, you learned about the GLM and how to apply it to fMRI data to find out how much influence each predictor in your design has on the signal of a voxel. Crucially, you learned that you shouldn’t look at raw beta-parameters to infer the effect of predictors, but that you should look at normalized beta-parameters — the t-value. Remember the formula for the t-value for a given contrast (\(c\))?

The formula for the t-value embodies the concept that the statistics you (should) care about, t-values, depend both on the effect (sometimes confusingly called the “signal”; \(\hat{\beta}\)), the noise (\(\hat{\sigma}^{2} = \frac{SSE}{\mathrm{DF}}\)), and the “design variance”.

So, to find optimal (i.e. largest) t-values, we should try to optimize both the effect of our predictors (i.e. the betas), try to minimize the errors (\(\hat{\sigma}^2\)), and try to minimize the design variance of our model. In this lab, we’ll shortly discuss the “effect” component (\(\hat{\beta}\)) and thereafter we’ll discuss in detail the “design variance” part. We won’t discuss the “noise” part, as this will be the topic of next week (preprocessing).

Optimizing “effects”#

Psychological factors#

As discussed above, the “effect” part of the conceptual formula for the t-statistic refers to the \(\beta\)-parameter in the statistical formula. It may sound weird to try to “optimize” your effect, because there is no way to magically acquire a better/stronger effect from your data, right? (Well, apart from using a better/stronger MRI-scanner.) Actually, don’t forget that the effect you’re measuring is coming from the brain of a human beings (your subjects)! There are real and important psychological influences that affect the strength of your signal, and thus influence eventually the size of your \(\beta\)-parameter.

So, what are these psychological influences? Well, think about inattention/boredom, anxiety, sleepiness (don’t underestimate how many subjects fall asleep in the scanner!), and subjects not understanding your task. As an extreme example: suppose you’re showing your subject some visual stimuli in order to measure the effect of some visual property (e.g., object color) in the visual cortex. Imagine that your subject finds the task so boring that he/she falls asleep; the \(\beta\)-parameters in this scenario are going to be much lower than when the subject wouldn’t have fallen asleep, of course! Sure, this is an extreme (but not uncommon!) example, but it shows the potential influence of psychological factors on the “effect” you’re measuring in your data!

In short, when designing an experiment, you want to continually ask yourself: “Are subjects really doing/thinking the way I want them to?”, and consequently: “Am I really measuring what I think I’m measuring?”

(The effect of psychological aspects on the measured effect is thoroughly explained in the video Psychological principles in experimental design from Tor Wager, which you can also find on Canvas.)

Design factors#

Apart from taking psychological factors into account when designing your experiment, there are also design-technical factors that influence the (potential) strength of your signal: using blocked designs. We will, however, discuss this topic in a later section, because you need a better understanding of another part of the conceptual t-value formula first: design variance.

Optimizing design variance#

So, last week we talked quite a bit about this mysterious term “design variance” and we promised to discuss it the next week. That’s exactly what we’re going to do now. As we shortly explained last week, design variance is the part of the standard error caused by the design-matrix (\(X\)). Importantly, design variance is closely related to the efficiency of the design matrix (\(X\)), i.e., efficiency is the inverse of design variance:

This term, efficiency, will be important in the rest of this notebook.

As these terms are inversely related, high design variance means low efficiency (which we don’t want) and low design variance means high efficiency (which we want). Phrased differently, high design variance means that your design-matrix is (relatively) inefficient for our goal to measure significant effects (i.e., high t-values). But, as you might have noticed, this definition is kind of circular. What causes low design variance (high efficiency), or: what constitutes an efficient design?

Basicially, two factors contribute to an efficient design:

The predictors in your design should have high variance (i.e. they should vary a lot relative to their mean)

The predictors should not have high covariance (i.e. they should not correlate between each other a lot)

In general, for any contrast between two \(\beta\)-parameters corresponding to predictor \(j\) and \(k\), we can define their design variance as follows*:

As such, efficiency for this contrast would the inverse:

As you can see, design variance thus depends on the variance of the predictors and the covariance between predictors. Note that this formulation only applies to contrasts involving more than one parameter. For contrasts against baseline, in which only one parameter is tested (e.g. predictor \(j\)), there is only one variance term (the other variance term and the covariance term are dropped out):

It is of course kind of annoying to have two different definitions (and computations) of design variance, which depend on whether you want to test a parameter against baseline or against another parameter. Therefore, people usually use the vectorized computation (i.e. using matrix multiplication), which allows you to define the formula for design variance for any contrast-vector \(\mathbf{c}\):

While this notation, \(\mathbf{c}(\mathbf{X}^{T}\mathbf{X})^{-1}\mathbf{c}\), may seem quite different than the above definitions using the \(\mathrm{var}\) and \(\mathrm{cov}\) terms, it is mathematically doing the same thing. The term \((X^{T}X)^{-1}\) represents (the inverse of) the variance-covariance matrix of the design (\(X\)) and c (contrast vector) is used only to “extract” the relevant variances and covariance for the particular contrast out of the entire covariance matrix of \(X\).

While appearing more complex, the advantage of the vectorized definition, however, is that it works for both contrasts against baseline (e.g. [0, 0, 1]) and contrasts between parameters (e.g. [0, 1, -1]). Now, if we plug in this mathematical definition of design variance in the formula of the standard error of a given contrast, we get:

Now, we can write out the entire formula for the t-statistic:

* Actually, design variance does not depend on the “variance” and “covariance”, but on the sums-of-squares of each predictor \(j\) (\(\mathrm{SS}_{X_{j}}\)) and sums-of-squares cross-products (\(\mathrm{SS}_{X_{j}, X_{k}}\)), respectively. These are just the variance and covariance terms, but without dividing by \(N - 1\)! We used the terms variance and covariance here because they are more intuitive.

Summary: effects, noise, and design variance#

Alright, that’s a lot of math. Sorry about that. But the above formula nicely illustrates that, to obtain large effects (i.e. t-values), you need three things:

A large response/effect (i.e. \(\beta\))

An efficient design or, in other words, low design variance (i.e. high variance, low covariance: \(\frac{1}{c(X^{T}X)^{-1}c'}\))

Low noise/unexplained variance (i.e. low \(\mathrm{SSE}\ /\ \mathrm{DF}\))

This week, we’ll discuss how to optimize (2): the efficiency from the design. Next week, we’ll discuss how to minimize (3): noise (unexplained variance).

If you remember these three components and how they conceptually relate to the effect we want to measure (t-values), you understand the most important aspect of experimental design in fMRI! In the rest of the tutorial, we’re going to show you why you want high variance and low covariance in your design (\(X\)) and how to achieve this by designing your experiment in a specific way.

In the previous section, you’ve seen a lot of math and definitions of (statistical) concepts. Especially the part on the inverses (e.g. efficiency is the inverse of design-variance, and vice versa).

It is important to understand how all the concepts (signal/beta, noise/SSE, design variance, efficiency) relate to each other and to the thing we’re after: strong effects (high t-values)!

Therefore, we captured the conceptual formula in a function below named conceptual_tvalue_calculator, which takes three inputs — signal, noise, and design variance — and outputs the effect (t-value) and design efficiency.

In the cell below, we call the function with some particular values for the three input-arguments (SIGNAL, NOISE, DESIGN_VARIANCE). For this (ungraded) ToDo, try to change these input parameters and try to understand how changing the inputs changes the outputs!

def conceptual_tvalue_calculator(signal, noise, design_variance):

""" Calculate the effect (t-value) from the signal, noise, and design variance components.

Parameters

----------

signal : int/float

noise : int/float

design_variance : int/float

Returns

-------

effect : float

efficiency : float

"""

efficiency = 1 / design_variance

effect = signal / (noise * design_variance)

return effect, efficiency

# Change the capitalized variables to see what effect it has on the t-value and efficiency

SIGNAL = 0.5

NOISE = 2.8

DESIGN_VARIANCE = 0.02

effect, efficiency = conceptual_tvalue_calculator(signal=SIGNAL, noise=NOISE, design_variance=DESIGN_VARIANCE)

print("Effect ('t-value'): %.3f" % effect)

print("Efficiency: %.3f" % efficiency)

Effect ('t-value'): 8.929

Efficiency: 50.000

YOUR ANSWER HERE

How to calculate design variance and efficiency in Python#

As discussed in the previous section, the formula for design variance (and efficiency) is often expressed using linear algebra notation:

You have seen the \((\mathbf{X}^{T}\mathbf{X})\) earlier when we discussed the solution for finding the least squares solution. Now, design variance is calculated by pre and postmultiplying this term with the (transpose of the) contrast vector (denoted with c and c.T). As such, so the full design variance calculation can be implemented in python as follows:

design_var = c @ inv(X.T @ X) @ c.T

Given that efficiency is the inverse of design variance:

… we can calculate efficiency as:

efficiency = 1.0 / c @ inv(X.T @ X) @ c.T

You’ll have to implement this yourself in a later ToDo! But first, let’s go into more detail why high variance and low covariance are important to get large effects!

The effect of predictor variance on design variance/efficiency#

As explained in the previous section, design variance depends on (1) predictor variance and (2) predictor covariance. In this section, we’ll focus on predictor variance. In the next section, we’ll focus on predictor covariance.

As you probably know, variance is a statistical property of a random variable that describes the average squared deviation from the variable’s mean. Formally, for any variable \(x\) with mean \(\bar{x}\) and length \(N\), its sample variance is defined as:

So, the more values of a variable deviate from its mean on average, the more variance it has.

To demonstrate the effect of predictor variance on design variance/efficiency, we will focus (for simplicity) on non-time series designs that have just a single condition and thus a single predictor (apart from the intercept). In these examples, we’ll focus on why high variance is important.

An example of the effect of (high) design variance#

To start, we want to show you — conceptually — why it is important to have a lot of variance in your predictors for a low standard error of your beta, and thus high t-values. We’re going to show you an example of ‘regular’ linear regression (so no time-series signal, but the example holds for MRI data).

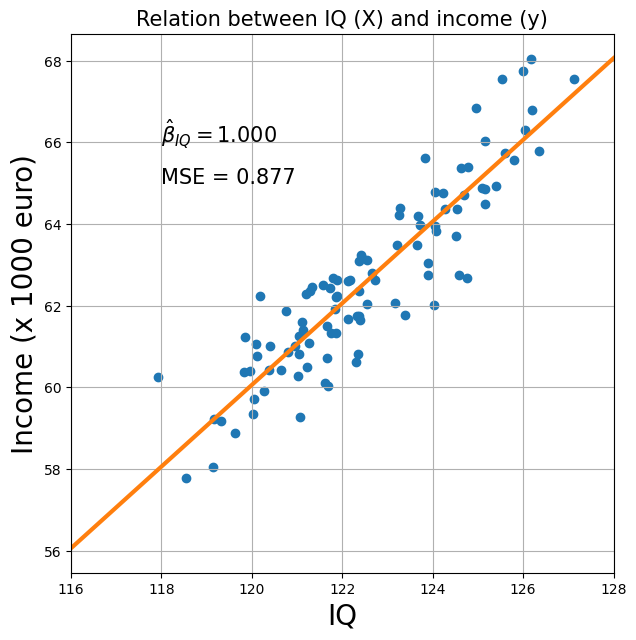

Suppose we want to investigate the effect of someone’s IQ on their income (\(\mathbf{X} = IQ\), \(\mathbf{y} = income\)). We’ve gathered some data, which we’ll as usual represent as an independent variable (\(\mathbf{X}\)) and a dependent variable (\(\mathbf{y}\)). We’ll also run the regression analysis and calculate the beta-parameters, MSE and t-value (corresponding to the IQ-parameter “against baseline”).

from niedu.utils.nii import calculate_stats_for_iq_income_dataset

# Load the data

iq_income_data = np.load('iq_variance_example.npz')

X_lowvar = iq_income_data['X_lv']

y_lowvar = iq_income_data['y_lv']

print("Shape X: %s" % (X_lowvar.shape,))

print("Shape y: %s" % (y_lowvar.shape,))

beta_lv, mse_lv, tval_lv = calculate_stats_for_iq_income_dataset(iq_income_data, which='lowvar')

plt.figure(figsize=(7, 7))

plt.title("Relation between IQ (X) and income (y)", fontsize=15)

plt.scatter(X_lowvar[:, 1], y_lowvar, c='tab:blue')

plt.ylabel('Income (x 1000 euro)', fontsize=20)

plt.xlabel('IQ', fontsize=20)

plt.plot(

(116, 128),

(116 * beta_lv[1] + beta_lv[0], 128 * beta_lv[1] + beta_lv[0]), c='tab:orange', lw=3

)

plt.text(118, 66, r'$\hat{\beta}_{IQ} = %.3f$' % beta_lv[1], fontsize=15)

plt.text(118, 65, 'MSE = %.3f' % mse_lv, fontsize=15)

plt.grid()

plt.xlim(116, 128)

plt.show()

Shape X: (100, 2)

Shape y: (100,)

This is pretty awesome data! On average, our prediction is on average less than 1 point off (i.e., \(\mathrm{MSE} < 1\))! But you might also have noticed that the range of values for \(X\) (i.e., IQ) is quite limited: we only measured people with IQs between about 118 and 127. This is quite a narrow range — in other words: little variance — knowing that IQ varies according to a normal distribution with mean 100 and standard deviation 15. In other words, we have a pretty good model, but it is only based on a specific range of the IQ-variable.

Think about it this way: this model captures the relationship between IQ and income, but only for relatively high-intelligence people. Sure, you can extrapolate to IQ-values like 80 and 90, but this extrapolation is quite uncertain because you’ve never even measured someone with that IQ-value!

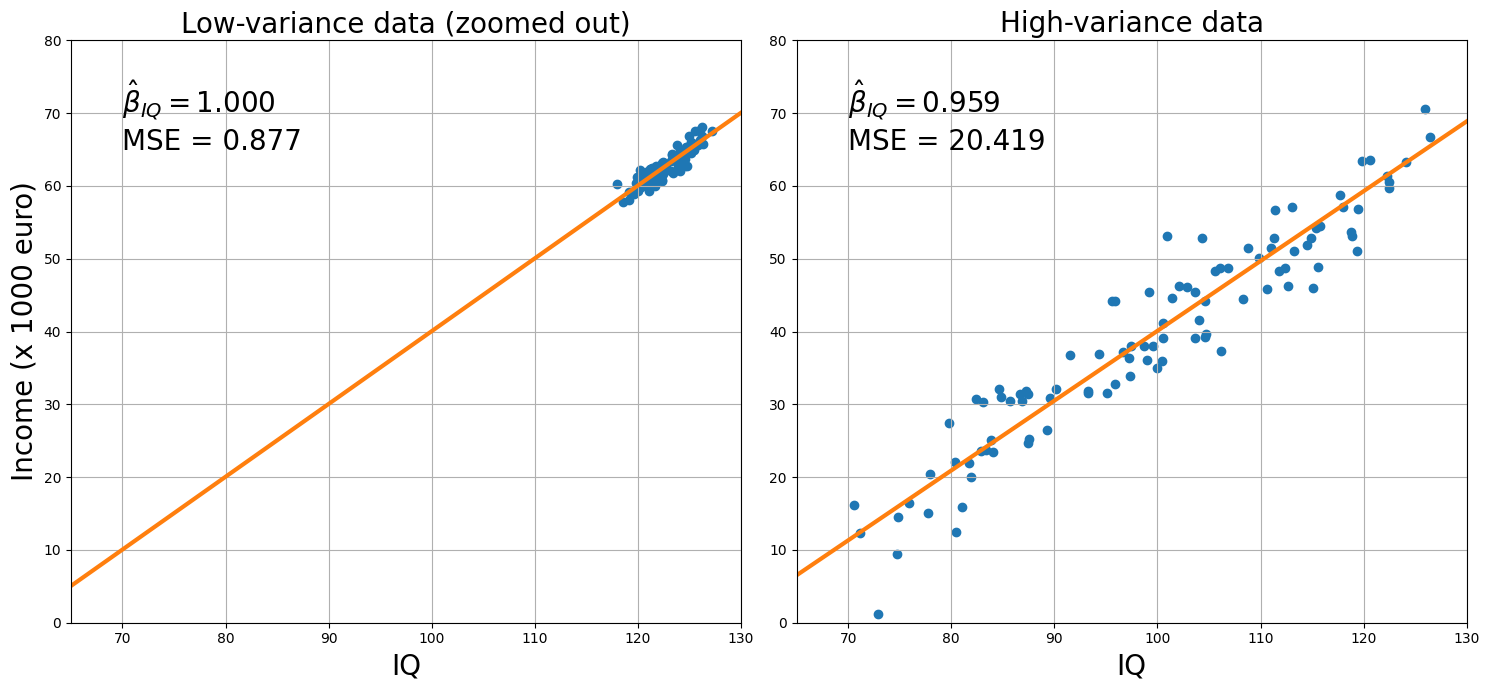

So, for comparison, let’s a similar dataset with IQ and income, but this time with a much larger range of the IQ-variable. We’ll plot the two datasets (the low-variance and high-variance data) next to each other.

X_highvar = iq_income_data['X_hv']

y_highvar = iq_income_data['y_hv']

x_lim = (65, 130)

y_lim = (0, 80)

plt.figure(figsize=(15, 7))

plt.subplot(1, 2, 1)

plt.title("Low-variance data (zoomed out)", fontsize=20)

plt.scatter(X_lowvar[:, 1], y_lowvar, c='tab:blue')

plt.ylabel('Income (x 1000 euro)', fontsize=20)

plt.xlabel('IQ', fontsize=20)

plt.xlim(x_lim)

plt.ylim(y_lim)

plt.plot(x_lim, (x_lim[0] * beta_lv[1] + beta_lv[0], x_lim[1] * beta_lv[1] + beta_lv[0]), c='tab:orange', lw=3)

plt.text(70, 70, r'$\hat{\beta}_{IQ} = %.3f$' % beta_lv[1], fontsize=20)

plt.text(70, 65, 'MSE = %.3f' % mse_lv, fontsize=20)

plt.grid()

# Now, do the same calculations for the highvar data

beta_hv, mse_hv, tval_hv = calculate_stats_for_iq_income_dataset(iq_income_data, which='highvar')

plt.subplot(1, 2, 2)

plt.title("High-variance data", fontsize=20)

plt.scatter(X_highvar[:, 1], y_highvar, c='tab:blue')

plt.xlim(x_lim)

plt.ylim(y_lim)

plt.xlabel('IQ', fontsize=20)

plt.plot(x_lim, (x_lim[0] * beta_hv[1] + beta_hv[0], x_lim[1] * beta_hv[1] + beta_hv[0]), c='tab:orange', lw=3)

plt.text(70, 70, r'$\hat{\beta}_{IQ} = %.3f$' % beta_hv[1], fontsize=20)

plt.text(70, 65, 'MSE = %.3f' % mse_hv, fontsize=20)

plt.tight_layout()

plt.grid()

plt.show()

As you can see from the plots of the two datasets side-by-side, both the low-variance (left) and the high-variance plot (right) capture approximately the model: for each increase in an IQ-point, people earn about 1000 (low-variance model) / 959 (high-variance model) euro extra (these are reflected by the beta-parameters!).

But, you also see that the MSE for the high-variance model is much higher, which is also evident from the residuals (distance of the red points from the blue line).

mse_ratio = mse_hv / mse_lv

print("The MSE of the high-variance data is %.3f times larger than the low-variance data!" % mse_ratio)

The MSE of the high-variance data is 23.279 times larger than the low-variance data!

Given these statistics, you might guess that the t-value of the IQ-parameter in the high-variance model would be way lower than the same parameter in the low-variance model, right? Well, let’s check it out:

# We calcalated the t-values earlier with the calculate_stats_for_iq_income_dataset function

print("T-value low-variance model: %.3f" % tval_lv)

print("T-value high-variance model: %.3f" % tval_hv)

T-value low-variance model: 21.189

T-value high-variance model: 31.156

You probably by now understand what’s the culprit: the design-variance! Given that the effect (\(\hat{\beta}_{IQ}\)) is about the same for the two models and the MSE is higher for the high-variance model, the logical conclusion is that the design-variance of the high-variance model must be waaaaay lower.

# Implement your ToDo here

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo. '''

from niedu.tests.nii.week_3 import test_lowvar_vs_highvar_iq_design

test_lowvar_vs_highvar_iq_design(X_lowvar, X_highvar, desvar_ratio)

Calculate efficiency for both the weight-in-kilos data (X_kilos) and the weight-in-grams data (X_grams). Store the efficiency for the weight-in-kilos data in a variable named efficiency_kilos and the efficiency for the weight-in-grams data in a variable named efficiency_grams.

with np.load('weight_height_data.npz') as data:

X_kilos = data['X']

X_grams = X_kilos * 1000

# We'll stack an intercept for you!

intercept = np.ones((X_kilos.size, 1))

X_kilos = np.hstack((intercept, X_kilos))

X_grams = np.hstack((intercept, X_grams))

# Start your ToDo here

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo. '''

from niedu.tests.nii.week_3 import test_design_variance_scaling

test_design_variance_scaling(X_kilos, X_grams, efficiency_kilos, efficiency_grams)

Why is the efficiency a million times higher and not 1000 times higher (as the scale-difference would suggest)?

YOUR ANSWER HERE

The effect of predictor covariance on design variance/efficiency#

Multicollinearity#

In the previous section, we discussed the influence of predictor variance - \(\mathrm{var}[\mathbf{X}_{j}]\) - on the design-variance term, showing that high variance leads to (relatively) low design variance (and thus high efficiency).

We know, however, that design variance also depends on the covariance between predictors - \(\mathrm{cov}[\mathbf{X}_{j}, \mathbf{X}_{k}]\). This “covariance between predictors” is also known as multicollinearity.

Specifically, the higher the covariance (multicollinearity), the lower the design efficiency (the worse our design is). Conceptually, you can think of high covariance between predictors as causing uncertainty of the estimation of your beta-estimates: if your predictors are correlated, the GLM “doesn’t know” what (de)activation it should assign to which predictor. This uncertainty due to correlated predictors is reflected in a (relatively) higher design variance term.

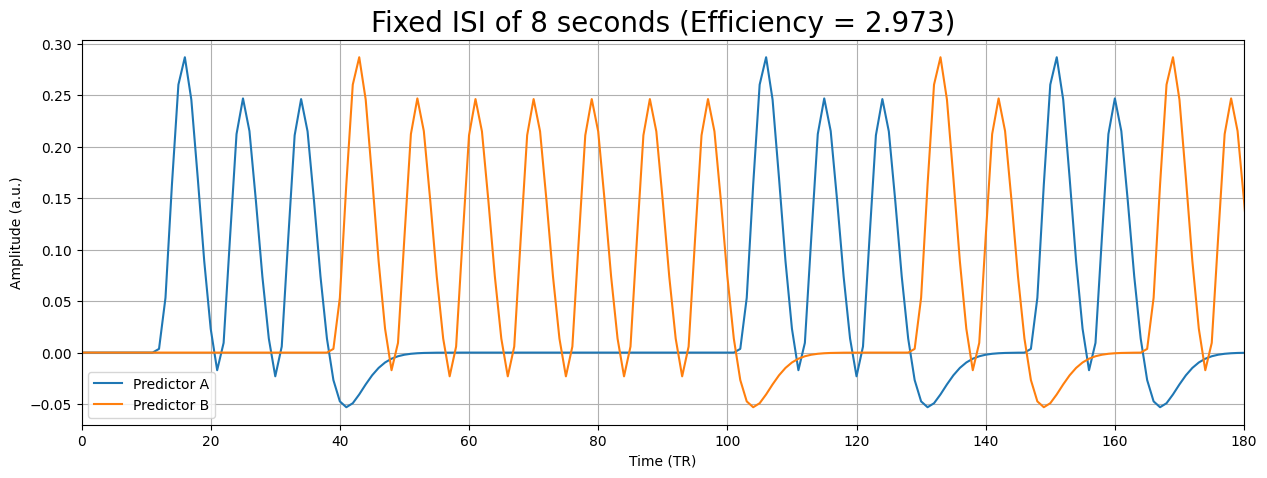

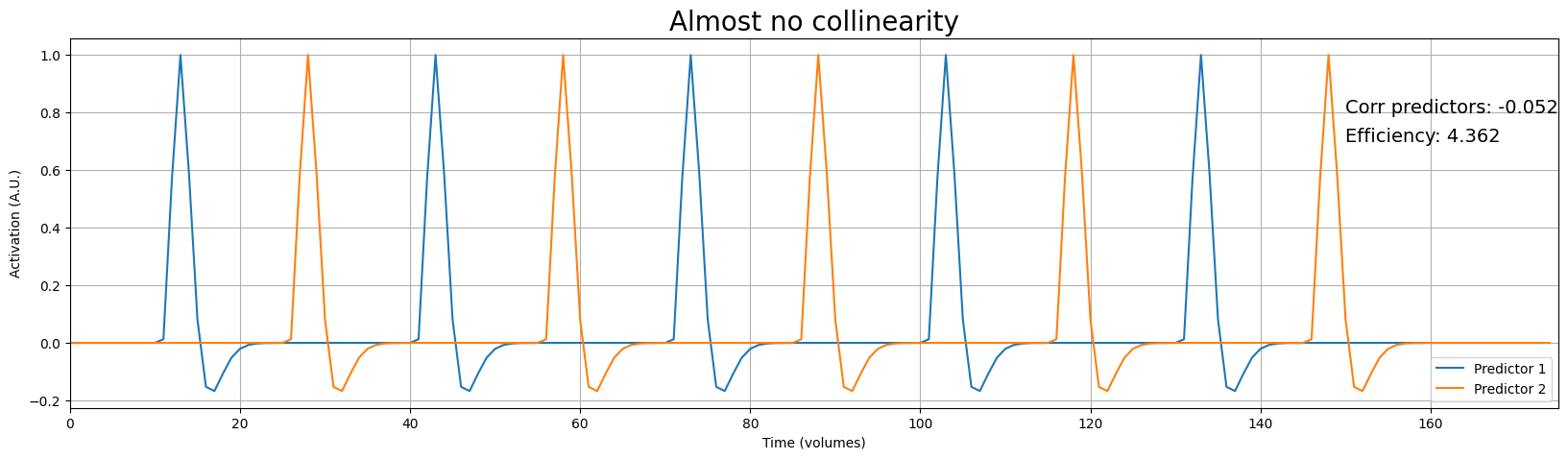

Anyway, let’s look at some (simulated) data. This time (unlike the variance-example), we’re going to look at fMRI timeseries data. We’ll simulate a design with two predictors, we’ll calculate the correlation between the two predictors, and the efficiency of the design for the difference contrast between the predictors (c = [0, 1, -1]).

def simulate_two_predictors(N=360, shift=30, TR=2):

''' Simulates two predictors with evenly spaced trials,

shifted a given number of time-points. '''

offset = 20

stop = 300

space = 60

pred1 = np.zeros(N)

pred1[offset:stop:space] = 1

pred2 = np.zeros(N)

pred2[(offset + shift):stop:space] = 1

hrf = glover_hrf(tr=1, oversampling=1)

hrf /= hrf.max()

pred1 = np.convolve(pred1, hrf)[:N:int(TR)]

pred2 = np.convolve(pred2, hrf)[:N:int(TR)]

X = np.hstack((np.ones((int(N / 2), 1)), pred1[:, np.newaxis], pred2[:, np.newaxis]))

return X

# We set the "shift" (distance between predictor 1 and 2 to 30 seconds)

X = simulate_two_predictors(N=350, shift=30, TR=2)

cvec = np.array([0, 1, -1])

corr = pearsonr(X[:, 1], X[:, 2])[0]

eff = 1.0 / cvec.dot(np.linalg.inv(X.T.dot(X))).dot(cvec.T)

plt.figure(figsize=(20, 5))

plt.plot(X[:, 1])

plt.plot(X[:, 2])

plt.text(150, 0.8, 'Corr predictors: %.3f' % corr, fontsize=14)

plt.text(150, 0.7, 'Efficiency: %.3f' % eff, fontsize=14)

plt.xlim(0, 175)

plt.legend(['Predictor 1', 'Predictor 2'], loc='lower right')

plt.xlabel("Time (volumes)")

plt.ylabel("Activation (A.U.)")

plt.title("Almost no collinearity", fontsize=20)

plt.grid()

plt.show()

print("Variance predictor 1: %.3f" % np.var(X[:, 1], ddof=1))

print("Variance predictor 2: %.3f" % np.var(X[:, 2], ddof=1))

Variance predictor 1: 0.048

Variance predictor 2: 0.048

As you can see, the predictors are almost perfectly uncorrelated - \(\mathrm{corr}(\mathbf{X}_{1}, \mathbf{X}_{2}) \approx 0\) - which corresponds to a design efficiency of 4.539. Remember, the absolute value of efficiency is not interpretable, but we can interpret it relative to other designs. As such, we can investigate how a design with more correlated predictors will change in terms of efficiency.

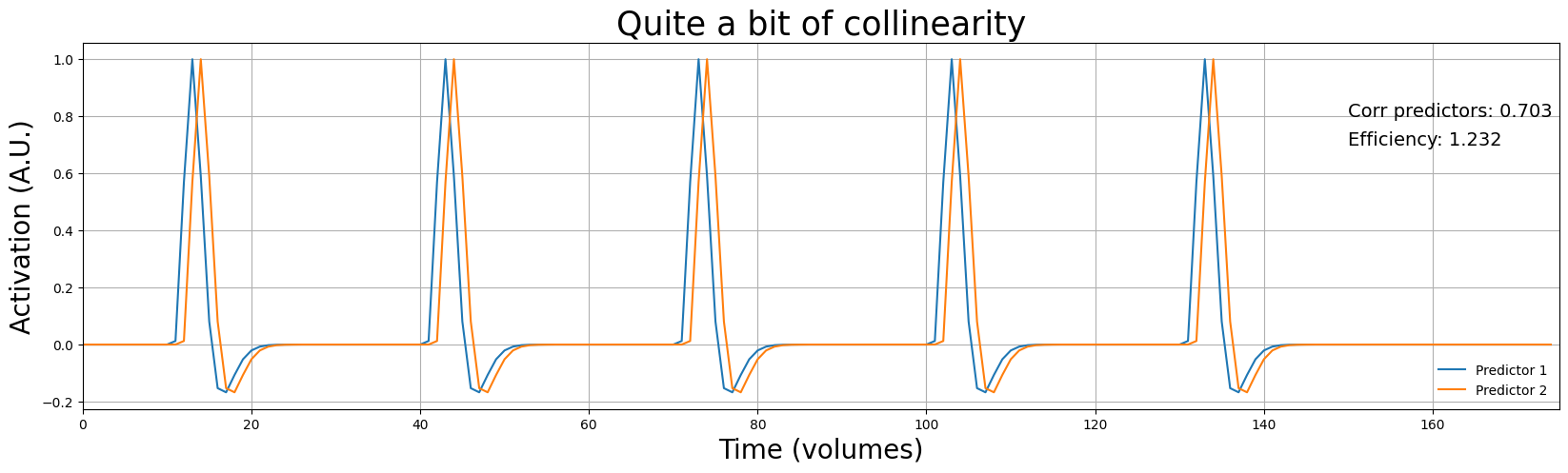

To do so, we can simply “shift” the second predictor (blue line) to the left (i.e., the stimuli of predictor 2 follow the stimuli of predictor 1 more quickly). Let’s check out what happens to the efficiency if we induce corelation this way:

# We set shift to 4 seconds (instead of 30 like before)

X2 = simulate_two_predictors(N=350, shift=2, TR=2)

corr2 = pearsonr(X2[:, 1], X2[:, 2])[0]

eff2 = 1.0 / cvec.dot(np.linalg.inv(X2.T.dot(X2))).dot(cvec.T)

plt.figure(figsize=(20, 5))

plt.plot(X2[:, 1])

plt.plot(X2[:, 2])

plt.text(150, 0.8, 'Corr predictors: %.3f' % corr2, fontsize=14)

plt.text(150, 0.7, 'Efficiency: %.3f' % eff2, fontsize=14)

plt.xlim(0, 175)

plt.legend(['Predictor 1', 'Predictor 2'], loc='lower right', frameon=False)

plt.xlabel("Time (volumes)", fontsize=20)

plt.ylabel("Activation (A.U.)", fontsize=20)

plt.title("Quite a bit of collinearity", fontsize=25)

plt.grid()

plt.show()

print("Variance predictor 1: %.3f" % np.var(X2[:, 1], ddof=1))

print("Variance predictor 2: %.3f" % np.var(X2[:, 2], ddof=1))

Variance predictor 1: 0.048

Variance predictor 2: 0.048

# Let's calculate the reduction in efficiency

reduction_eff = ((eff - eff2) / eff) * 100

print("Efficiency is reduced with %.1f%% when increasing the correlation to %.3f" % (reduction_eff, corr2))

Efficiency is reduced with 71.8% when increasing the correlation to 0.703

As you can see, increasing correlation between predictors has the effect of reducing efficiency, even if the predictor variance stays the same! Like we discussed earlier, this is because correlation between predictors reflects ambiguity about the “source” of an effect.

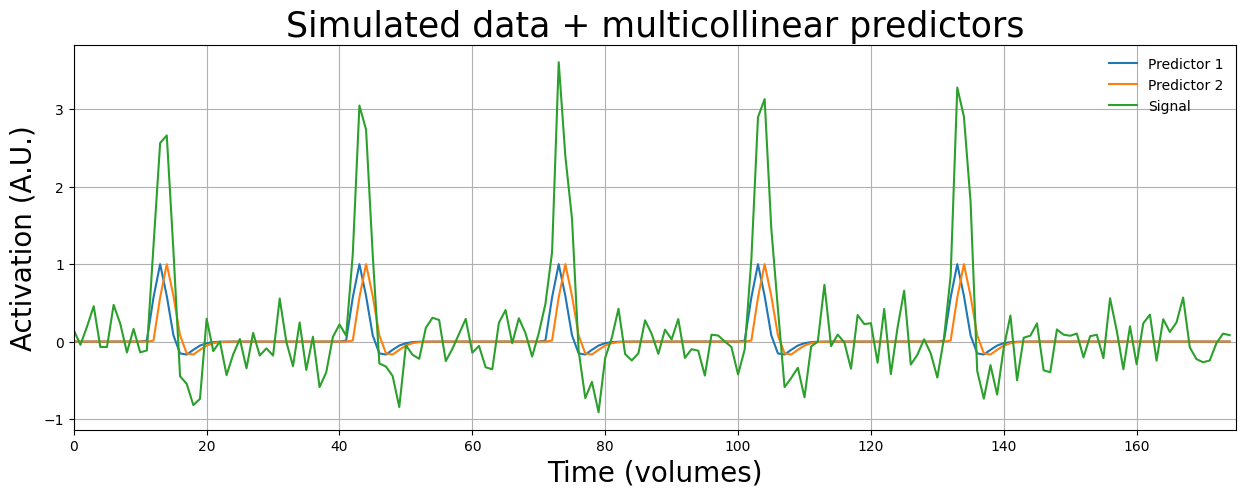

To get a better intuition of this ambiguity, suppose that for the above design (with the correlated predictors), we observe the following signal (we just simulate the signal as the linear sum of the predictors + noise; sort of a “reverse linear regression”):

np.random.seed(42)

# Here we simulate a signal based on the predictors + noise

some_noise = + np.random.normal(0, 0.3, X2.shape[0])

sim_signal = X2[:, 1] * 2 + X2[:, 2] * 2 + some_noise

plt.figure(figsize=(15, 5))

plt.plot(X2[:, 1])

plt.plot(X2[:, 2])

plt.plot(sim_signal)

plt.xlim(0, 175)

plt.legend(['Predictor 1', 'Predictor 2', 'Signal'], loc='upper right', frameon=False)

plt.xlabel("Time (volumes)", fontsize=20)

plt.ylabel("Activation (A.U.)", fontsize=20)

plt.title("Simulated data + multicollinear predictors", fontsize=25)

plt.grid()

plt.show()

Now, if we calculate the beta-parameters of both predictors, we see that they both are given approximately equal “importance” (i.e., their beta-parameters are about equally high):

betas = inv(X2.T @ X2) @ X2.T @ sim_signal

print("Betas (w/o intercept): %r" % betas[1:])

Betas (w/o intercept): array([1.92571203, 1.84414847])

However, it is unclear to the GLM whether the peaks in the signal (green line) are caused by predictor 1 or predictor 2! While the betas themselves are not affected on average (i.e., there is no bias), this “uncertainty” (or “ambiguity”) is reflected in the GLM through a relatively higher design variance term, that will subsequently lead to (relatively) lower t-values!

YOUR ANSWER HERE

Evaluating multiple contrasts#

Thus far, we only evaluated the efficiency for a single contrast, like one particular predictor against baseline, e.g. contrast_vec = np.array([0, 1, 0]). Often, though, you might be interested in more than one contrast. For example, you might be interested in the contrast of predictor “A” against baseline, predictor “B” against baseline, and the difference between predictor “A” and “B”*. We can simply extend our formula for efficiency to allow more than one contrast. For \(K\) contrasts, efficiency is defined as:

This specific calculation of efficiency is also referred to as “A optimality”. From the formula, you can see that the overall efficiency for multiple contrasts is basically (but not precisely) the “average” of the efficiencies for the individual contrasts.

Let’s practice the Python-implementation of overall efficiency for multiple contrasts in a short assignment (graded with hidden tests).

* Note that evaluating different contrasts separately is not the same as doing an F-test (like we discussed in week 2)!

predictor 1 against baseline

predictor 2 against baseline

prediction 1 - predictor 2

You have to define the contrasts yourself.

Store the overall efficiency in a variable named overall_eff. Hint: you probably need a for loop (or a list comprehension).

# Implement your ToDo here using X

X = simulate_two_predictors(N=350, shift=30, TR=2)

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo. '''

from niedu.tests.nii.week_3 import test_overall_eff

test_overall_eff(X, overall_eff)

How should we design our experiment to maximize efficiency?#

Alright, thus far we talked about why you want high predictor variance and low predictor covariance for optimal estimation of effects (i.e., t-values). But this leaves us with the question: how should we design our experiment such that it has high variance and low covariance?

The answer is (as is often the case): it depends.

Estimation vs. detection#

The specific design of your experiment (how the stimuli are ordered and their timing) mainly depends on the type of question you’re investigating with your fMRI experiment. These types of questions are usually divided into two categories within (univariate) fMRI studies:

1) You want to know whether different conditions activate voxel activity differently.

We’ve learned how to do this in week 2: essentially, you want to estimate just a beta-parameter (reflecting activation/deactivation) of your stimulus-regressors. This is a question about HRF-amplitude only. The far majority of fMRI research falls in this category (and it’s the type we’ll focus on in this course). It is often said that this type of research focuses on detection of a signal’s response. For this question, designs are often based on canonical HRF-based convolution (or based on a basis set).

2) You want investigate how different conditions influence voxel activity not only by investigating the “amplitude” parameter of the HRF, but also parameters relating to other properties of the shape of the HRF (like width, lag, strenght of undershoot, etc.).

A small proportion of fMRI studies have this goal. Examples are studies that investigate how the shape of the HRF changes with age or that investigate differences in HRF shape across clinical populations. We won’t focus on this type of research in this course, but you should know that you can also investigate other parameters of the HRF in neuroimaging research! It if often said that this type of research focuses on estimation of the (shape of the) signal’s response. Often, “finite impulse reponse” (FIR) models are used for these types of studies (which you might have seen in the videos).

The far majority of the fMRI studies focus on questions about detection, i.e., based on analysis of the “amplitude” of the HRF. This is why we won’t discuss the estimation approach (and associated models, like the FIR-based GLM) in the rest of this lab and the course in general.

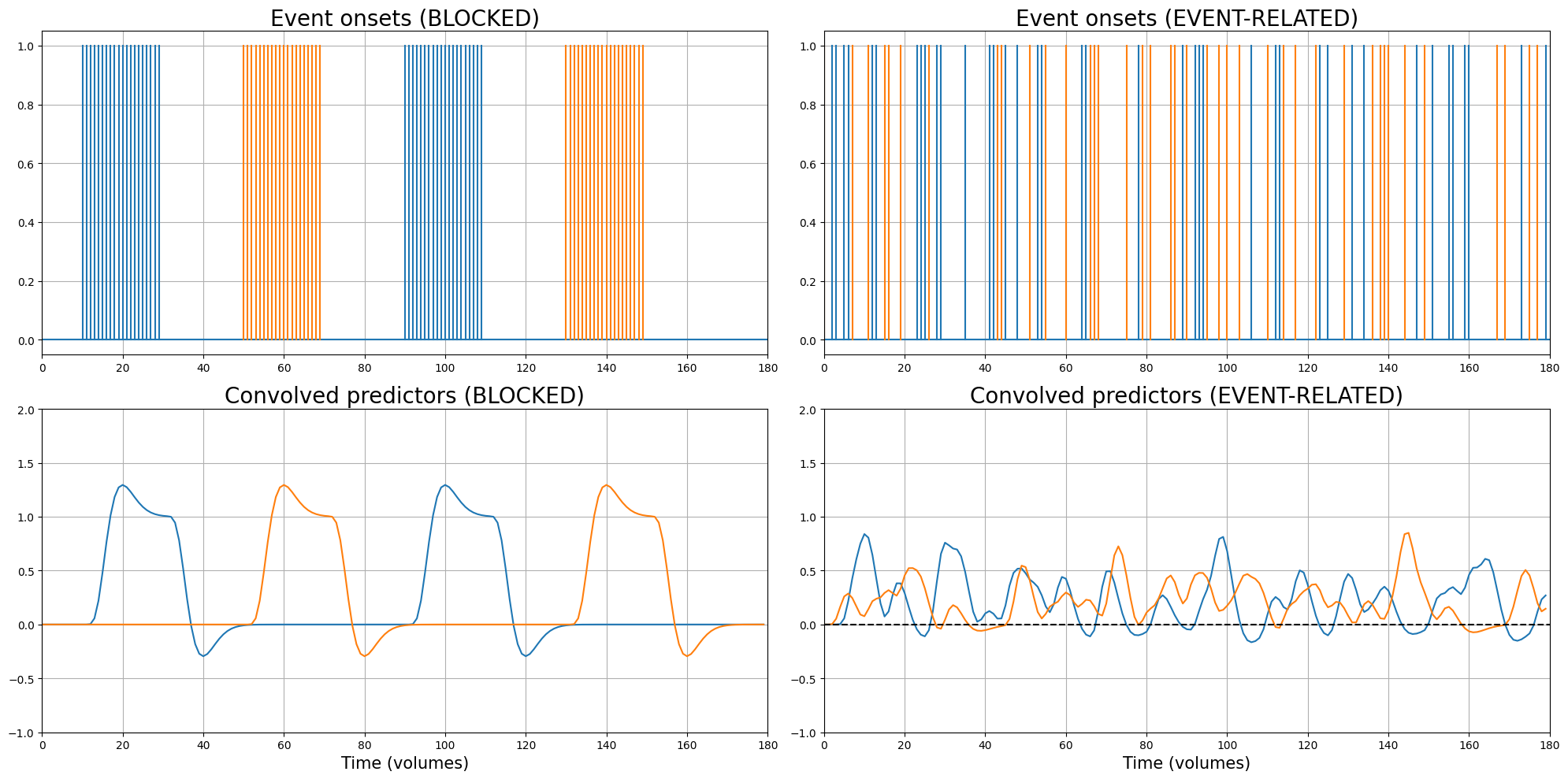

Now, given that we aim for detection, how should we design our experiment? Well, there are two “main” types of designs: event-related designs and blocked designs, which are discussed in the next section.

The “paradox” of efficiency#

So, we’ve discussed blocked and event-related designs and we’ve come to the conclusion that blocked designs are simply more efficient than event-related designs. “So, we should always use blocked designs?”, you may ask.

Well, no.

We’ve discussed the mathematics behind design variance, efficiency, and t-value in detail, but we shouldn’t forget that ultimately we’re measuring the data from a living human beings in the MRI-scanner, who tend to get bored, fall asleep, and otherwise not pay attention if the task they’re doing is monotonous, predictable or simply uninteresting!

Blocked designs, however, are (usually) exactly this: designs that are experienced as predictable, monotonous, and (relatively) boring! Like we said earlier, the effects we’re going to measure depend on three things - effects, noise, and design efficiency - and psychological factors may strongly influence the “effect” part and thus affect the statistics we’re interested in (i.e., t-values). In addition to psychological factors like boredom and inattention, blocked designs may also lead to unwanted effects like habituation (attenuation of the BOLD-response after repeated stimulation), which violate the assumption of the BOLD-response as being ‘linear time-invariant’ (LTI). In other words, the BOLD-response may stop ‘behaving’ like we assume it behaves when we use blocked designs.

This is, essentially, the paradox of designing fMRI experiments: the most efficient designs are also the designs that (potentially) lead to the lowest signal or otherwise unintended effects (due to boredom, predictability, habituation, etc.).

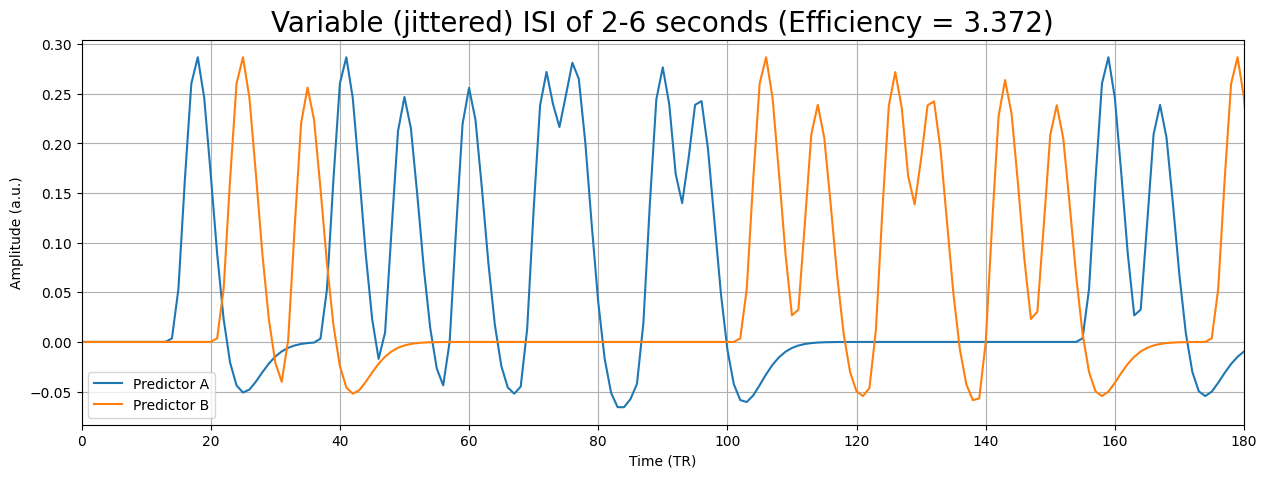

So, what do we do in practice? Usually, we use (semi-random) event-related designs. We lose some efficiency by using event-related designs instead of blocked designs, but we reduce the chance of psychological factors and other effects that reduce the measured signal or mess with the assumption of linear time-invariance.

Given that we’re going to use some event-related (i.e., “random”) design, let’s investigate how we can optimize this type of design.

Summary: how to optimize your design for efficiency#

So, in this section we discussed how to structure your experiment such that it yields a (relatively) high design efficiency, which will optimize our chance to find significant effects. How you do this depends on whether you aim for estimation (what is the shape of the HRF?) or for detection (what is the amplitude of the response?). Usually, we aim for detection; in that case, designs can be roughly grouped in two types: blocked designs an event-related designs. Purely statistically speaking, blocked designs are (almost always) more efficient, because they generally have lower covariance and higher variance than event-related designs. However, due to psychological factors and potential violations of the linear time-invariance of the BOLD-response, we often opt for event-related designs in the end. For event-related designs, we can increase our chance of finding a relatively efficient design by jittering our ISIs.