First-level analyses (using FSL)#

This notebook provides the first part (first-level analysis) of an introduction to multilevel models and how to implement them in FSL. The next notebook, run_level_analysis.ipynb, will continue with run-level analysis, and next week we will conclude this topic with group-level analyses. If you haven’t done so already, please go throught linux_and_the_command_line notebook first!

You actually know most about “first-level analyses” already, as it describes the process of modelling single-subject (single-run) timeseries data using the GLM, which we discussed at length in week 2. In this notebook, you’ll learn how to use FSL for (preprocessing and) first-level analyses. Note that there are several other excellent neuroimaging software packages, such as SPM (Matlab-based), AFNI, BROCCOLI, Freesurfer, and Nilearn. In fact, in week 7, there is a notebook on how to use Nilearn to fit statistical models on fMRI data.

We like FSL in particular, as it’s a mature and well-maintained package, free and open-source, and provides both a graphical interface and a command line interface for most of its tools.

What you’ll learn: after this lab, you’ll be able to …

visualize and inspect (f)MRI in FSLeyes;

set up a first-level model in FSL FEAT

Estimated time needed to complete: 2-4 hours

# Import functionality

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

Using FSLeyes#

In the previous notebook about Linux and the command line, we discussed how to use built-in Linux commands and FSL-specific commands using the command line. Now, let’s focus on using a specific FSL tool called “FSLeyes”, which is a useful program to view (f)MRI files (basically any nifti-file). We can start this graphical tool from the command line with the command: fsleyes &. We append the & to the FSLeyes command because this makes sure FSLeyes will run “in the background” and thus allows us to keep using the command line (if we would not use the &, FSLeyes would run be active “in your terminal”, and we wouldn’t be able to use that anymore).

This should open a new window with FSLeyes. Now, let’s open a file!

Now, FSLeyes should look similar to the figure below.

As you can see, FSLeyes consists of multiple views, panels, and toolbars. You should see an anatomical MRI image shown in three perspectives (sagittal, coronal, and axial) with green crosshairs in the “Ortho view”.

Notice the labels on the sides of the image: P (posterior), A (anterior), I (inferior), S (superior), L (left), and R (right).

In the “Overlay toolbar”, you can adjust both the brightness and contrast of the image. One could argue that the default settings are a little bit too dark (for this scan at least).

Importantly, while moving the location of the crosshairs, you see in the lower right corner (under the “Location panel”) that some numbers change. The left side of the panel shows you both the location of the crosshairs in “world coordinates” (relative to the isocenter of the scanner, in millimeters) and in “voxel coordinates” (starting from 0).

The right side of the panel shows you the voxel coordinates of the crosshairs together with the signal intensity, e.g., \([156\ 123\ 87]: 600774.75\). Try changing the crosshairs from a voxel in the CSF, to a voxel in gray matter, and a voxel in white matter and observe how the signal intensity changes.

# Replace the Nones with the correct numbers

X = None

Y = None

Z = None

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo.'''

from niedu.tests.nii.week_5 import test_how_many_slices_fsleyes

test_how_many_slices_fsleyes(X, Y, Z)

Now, let’s check out some functional data (fMRI) in FSLeyes!

FSLeyes neatly plots the functional data on top of the anatomical image. Note that the resolution differs between the anatomical (\(1 \times 1 \times 1\) mm) and functional (\(2.7 \times 2.7 \times 2.97\) mm)! FSLeyes still allows you to view these images on top of each other by interpolating the images.

Now, let’s make our view a little bit nicer.

Setting a minimum value will threshold the image at this value, which will mask most of the background. Now, let’s also change the color scheme for the func file to be able to distinguish the func and anat file a little better:

Now you can clearly see that setting a threshold did not mask all non-brain tissue, as some skull seems to still show “activity”!

Again, just like with the anatomical scan, the “Location panel” shows you both the (voxel and world) coordinates as well as the signal intensity.

Instead of manually adjusting the volume number, you can press the “Video” icon (next to the big “+” icon, which you can use to toggle the crosshairs on or off) to start “Movie mode” in which FSLeyes will loop through all volumes. Due to the lag on the server (or a software bug in FSLeyes, I’m not sure), however, FSLeyes is not fast enough to render the volumes, so you’ll likely see a black screen…

That said, looping through your volumes is usually a great way to visually check for artifacts (e.g. spikes) and (extreme) motion in your data! We highly recommend doing this for all your data!

Another useful way to view your fMRI data is using the “Time series” view.

FSLeyes has many more features than we discussed in this section (e.g., working with standard atlases/parcellations). We’ll demontrate these features throughout the rest of this lab where appropriate!

Skullstripping#

When viewing the anatomical scan in the previous section, you might have noticed that the scan still contained the subject’s skull. Usually, the skull is removed because it improves registration to the subject’s anatomical T1-scan (especially if you use BBR-based registration). This process is often called “skullstripping” and FSL contains the skullstripping-tool bet (which stands for “Brain Extraction Tool”). We’ll demonstrate how to use bet using its command-line interface.

Like all FSL tools with command-line interfaces, you can get more info on how to use it by calling the command with the flag --help.

You see that the “bet” command should be called as follows:

bet <input> <output> [options/flags]

We recommend visually checking the result of skullstripping for imperfections (e.g. part of the brain that are excluded or parts of non-brain that are included). Let’s do this in FSLeyes!

Then, load the non-skullstripped anatomical file (sub-02_desc-preproc_T1w.nii.gz) first. Then, overlay the skullstripped image (the sub-02_desc-preproc+bet_T1w.nii.gz file you just created). The difference between the non-skullstripped and the skullstriped image is, now, however not clear; to make this clearer, we can pick a different colormap for the skullstripped brain! For example, change the “Grayscale” colormap to “Red-Yellow”. Also, change the opacity to about 50% to better see the underlying non-skullstripped anatomical scan.

Scroll through the image to see whether bet did a good job.

Also, it failed to remove some non-brain tissue. For example, what non-brain structure do you think is located at \([X=143, Y=178, Z=72]\)? And at \([X=140, Y=124, Z=44]\)?

You could, of course, just skullstrip all your participants without looking at the resulting skullstripped brain images. However, as we just saw, this may lead to suboptimal results, which may bias subsequent preprocessing steps, such as functional → anatomical registration and spatial normalization to a standard template. As such, we recommend visually inspecting the skullstrip result for each participant in your dataset!

If you find that bet did not do a proper job, you can adjust several parameters to improve the skullstripping operation. The two most useful parameters to adjust are “robust brain centre estimation” (flag -R), “fractional intensity threshold” (flag -f <value>), and the vertical gradient (flag -g <values>). We recommend always enabling “robust brain centre estimation” (which iterates the operation multiple times, which usually gives more robust results).

The proper value for fractional intensity threshold (-f) and the vertical gradient (-g), however, you need to “tune” yourself (although the default, 0.5, is a good starting value). Smaller values for fractional intensity threshold give you larger brain outline estimates (i.e., “threshold” to be used for image is lower). With the vertical gradient you can tell bet to be relatively more “aggressive” (with values < 0) or “lenient” (with values > 0) with removing tissue at the bottom (inferior side) of the brain. So, e.g., using -g -0.1 will remove relatively more tissue at the bottom of the brain.

To include these parameters, you can call bet with, for example, a “fractional intensity threshold” of 0.4, a vertical gradient of -0.1, and robust brain centre estimation enabled as follows:

bet <input> <output> -f 0.4 -g -0.1 -R

For this ToDo, try out some different parameters for fractional intensity threshold and vertical gradient, and enable robust brain centre estimation. Note that the output file will be overwritten if you use the same output-name (which is fine if you don’t want to save the previous result). Also, there may not be a combination of settings that creates a “perfect” skullstrip result (at least for this brain).

Once you’re happy with the segmentation, overlay it on the original anatomical file in FSLeyes (and use a different colormap). Then, make a snapshot using the camera icon and save it in your week_5 directory (name it “my_skullstripped_image.png”). After you created and saved the snapshot, add the following line to the answer-cell below:

After adding this to the text-cell below and running the cell, the snapshot should be visible in the notebook. (This allows us to check whether you actually did the ToDo; there is not necessarily a “correct” answer to this ToDo — we just want you to mess around with bet and the command line).

YOUR ANSWER HERE

Note that adjusting the parameters of bet may in fact not completely solve all the imperfections in the skullstripping procedure. If you really care about accurate segmentation (e.g., when doing anatomical analyses such as voxel-based morphometry or surface-based analyses), you may consider editing your skullstrip result manually. FSLeyes has an “edit mode” (Tools → Edit mode) in which can manually fill or erase voxels based on your own judgment what is actual brain tissue and what is not.

Skullstripping is actually the only preprocessing operation you have to do manually using the command line (which might be a good idea, anyway, as you should inspect the result manually). The rest of the preprocessing steps within FSL are actually neatly integrated in the FSL tool “FEAT” (FMRI Expert Analysis Tool), which is FSL’s main analysis workhorse (implementing first-level and grouplevel analyses). We’ll discuss doing first-level and fixed-effect analyses with FEAT later in this tutorial, but first let’s talk about the dataset that we’ll use for the upcoming lab!

The “NI-edu” dataset#

So far in this course, we have used primarily simulated data. From this week onwards, we’ll also use some real data from a dataset (which we’ll call “NI-edu”) acquired at our 3T scanner at the University of Amsterdam. The NI-edu study has been specifically “designed” for this (and the follow-up “pattern analysis”) course. The experimental paradigms used for this dataset are meant to investigate how the brain processes information from faces and the representation of their subjective impressions (e.g., their perceived attractiveness, dominance, and trustworthiness). In addition to tasks that investigate face processing specifically, the dataset also includes several runs with a “functional localizer” task, which can be used to identify regions that preferentially respond to a particular object category (like faces, bodies, characters, etc.). In this course, we’ll mostly use the localizer data, because the associated task (design) is relativelly simple and generates a robust signal.

Below, we download the data needed for this tutorial, which is the anatomical data and functional data from a single run of the flocBLOCKED task (see below) from a single subject (sub-03). The data will be saved in your home directory in a folder named “NI-edu-data”.

import os

data_dir = os.path.join(os.path.expanduser("~"), 'NI-edu-data')

print("Data (+- 110MB) will be saved in %s ..." % data_dir)

print("Go get some coffee. This may take a while.\n")

# Using the CLI interface of the `awscli` Python package, we'll download the data

# Note that we only download the preprocessed data from a single run from a single subject

# This may take about 2-10 minutes or so (depending on your download speed)

# Download anatomical files

!aws s3 sync --no-sign-request s3://openneuro.org/ds003965 {data_dir} --exclude "*" --include "sub-03/ses-1/anat/*"

# And functional files

!aws s3 sync --no-sign-request s3://openneuro.org/ds003965 {data_dir} --exclude "*" --include "sub-03/ses-1/func/*task-flocBLOCKED*"

# And a brain mask (needed for FEAT, later)

!aws s3 sync --no-sign-request s3://openneuro.org/ds003965 {data_dir} --exclude "*" --include "derivatives/fmriprep/sub-03/ses-1/anat/sub-03_ses-1_acq-AxialNsig2Mm1_desc-brain_mask.nii.gz"

print("\nDone!")

Data (+- 110MB) will be saved in /home/runner/NI-edu-data ...

Go get some coffee. This may take a while.

Completed 256.0 KiB/8.3 MiB (799.5 KiB/s) with 2 file(s) remaining

Completed 512.0 KiB/8.3 MiB (1.6 MiB/s) with 2 file(s) remaining

Completed 768.0 KiB/8.3 MiB (2.3 MiB/s) with 2 file(s) remaining

Completed 1.0 MiB/8.3 MiB (3.1 MiB/s) with 2 file(s) remaining

Completed 1.2 MiB/8.3 MiB (3.8 MiB/s) with 2 file(s) remaining

Completed 1.5 MiB/8.3 MiB (4.5 MiB/s) with 2 file(s) remaining

Completed 1.8 MiB/8.3 MiB (5.3 MiB/s) with 2 file(s) remaining

Completed 2.0 MiB/8.3 MiB (6.0 MiB/s) with 2 file(s) remaining

Completed 2.2 MiB/8.3 MiB (6.7 MiB/s) with 2 file(s) remaining

Completed 2.5 MiB/8.3 MiB (7.4 MiB/s) with 2 file(s) remaining

Completed 2.8 MiB/8.3 MiB (8.1 MiB/s) with 2 file(s) remaining

Completed 2.8 MiB/8.3 MiB (8.2 MiB/s) with 2 file(s) remaining

Completed 3.0 MiB/8.3 MiB (8.9 MiB/s) with 2 file(s) remaining

Completed 3.3 MiB/8.3 MiB (9.6 MiB/s) with 2 file(s) remaining

Completed 3.5 MiB/8.3 MiB (10.3 MiB/s) with 2 file(s) remaining

Completed 3.8 MiB/8.3 MiB (11.0 MiB/s) with 2 file(s) remaining

Completed 4.0 MiB/8.3 MiB (11.6 MiB/s) with 2 file(s) remaining

Completed 4.3 MiB/8.3 MiB (12.3 MiB/s) with 2 file(s) remaining

Completed 4.5 MiB/8.3 MiB (13.0 MiB/s) with 2 file(s) remaining

Completed 4.8 MiB/8.3 MiB (13.7 MiB/s) with 2 file(s) remaining

Completed 5.0 MiB/8.3 MiB (14.4 MiB/s) with 2 file(s) remaining

Completed 5.3 MiB/8.3 MiB (15.0 MiB/s) with 2 file(s) remaining

Completed 5.5 MiB/8.3 MiB (15.7 MiB/s) with 2 file(s) remaining

Completed 5.8 MiB/8.3 MiB (16.3 MiB/s) with 2 file(s) remaining

Completed 5.8 MiB/8.3 MiB (16.2 MiB/s) with 2 file(s) remaining

download: s3://openneuro.org/ds003965/sub-03/ses-1/anat/sub-03_ses-1_acq-AxialNsig2Mm1_T1w.json to ../../../../../../NI-edu-data/sub-03/ses-1/anat/sub-03_ses-1_acq-AxialNsig2Mm1_T1w.json

Completed 5.8 MiB/8.3 MiB (16.2 MiB/s) with 1 file(s) remaining

Completed 6.0 MiB/8.3 MiB (16.9 MiB/s) with 1 file(s) remaining

Completed 6.3 MiB/8.3 MiB (17.5 MiB/s) with 1 file(s) remaining

Completed 6.5 MiB/8.3 MiB (18.2 MiB/s) with 1 file(s) remaining

Completed 6.8 MiB/8.3 MiB (18.8 MiB/s) with 1 file(s) remaining

Completed 7.0 MiB/8.3 MiB (19.5 MiB/s) with 1 file(s) remaining

Completed 7.3 MiB/8.3 MiB (20.1 MiB/s) with 1 file(s) remaining

Completed 7.5 MiB/8.3 MiB (20.7 MiB/s) with 1 file(s) remaining

Completed 7.8 MiB/8.3 MiB (21.4 MiB/s) with 1 file(s) remaining

Completed 8.0 MiB/8.3 MiB (22.0 MiB/s) with 1 file(s) remaining

Completed 8.3 MiB/8.3 MiB (22.6 MiB/s) with 1 file(s) remaining

download: s3://openneuro.org/ds003965/sub-03/ses-1/anat/sub-03_ses-1_acq-AxialNsig2Mm1_T1w.nii.gz to ../../../../../../NI-edu-data/sub-03/ses-1/anat/sub-03_ses-1_acq-AxialNsig2Mm1_T1w.nii.gz

Completed 256.0 KiB/114.2 MiB (427.4 KiB/s) with 6 file(s) remaining

Completed 512.0 KiB/114.2 MiB (851.0 KiB/s) with 6 file(s) remaining

Completed 768.0 KiB/114.2 MiB (1.2 MiB/s) with 6 file(s) remaining

Completed 1.0 MiB/114.2 MiB (1.4 MiB/s) with 6 file(s) remaining

Completed 1.2 MiB/114.2 MiB (1.8 MiB/s) with 6 file(s) remaining

Completed 1.5 MiB/114.2 MiB (2.1 MiB/s) with 6 file(s) remaining

Completed 1.8 MiB/114.2 MiB (2.5 MiB/s) with 6 file(s) remaining

Completed 2.0 MiB/114.2 MiB (2.8 MiB/s) with 6 file(s) remaining

Completed 2.2 MiB/114.2 MiB (3.2 MiB/s) with 6 file(s) remaining

Completed 2.5 MiB/114.2 MiB (3.5 MiB/s) with 6 file(s) remaining

Completed 2.8 MiB/114.2 MiB (3.9 MiB/s) with 6 file(s) remaining

Completed 3.0 MiB/114.2 MiB (4.2 MiB/s) with 6 file(s) remaining

Completed 3.2 MiB/114.2 MiB (4.5 MiB/s) with 6 file(s) remaining

Completed 3.5 MiB/114.2 MiB (4.9 MiB/s) with 6 file(s) remaining

Completed 3.8 MiB/114.2 MiB (5.2 MiB/s) with 6 file(s) remaining

Completed 4.0 MiB/114.2 MiB (5.5 MiB/s) with 6 file(s) remaining

Completed 4.2 MiB/114.2 MiB (5.8 MiB/s) with 6 file(s) remaining

Completed 4.5 MiB/114.2 MiB (6.1 MiB/s) with 6 file(s) remaining

Completed 4.8 MiB/114.2 MiB (6.5 MiB/s) with 6 file(s) remaining

Completed 5.0 MiB/114.2 MiB (6.8 MiB/s) with 6 file(s) remaining

Completed 5.2 MiB/114.2 MiB (7.1 MiB/s) with 6 file(s) remaining

Completed 5.5 MiB/114.2 MiB (7.5 MiB/s) with 6 file(s) remaining

Completed 5.8 MiB/114.2 MiB (7.8 MiB/s) with 6 file(s) remaining

Completed 6.0 MiB/114.2 MiB (8.1 MiB/s) with 6 file(s) remaining

Completed 6.2 MiB/114.2 MiB (8.4 MiB/s) with 6 file(s) remaining

Completed 6.5 MiB/114.2 MiB (8.7 MiB/s) with 6 file(s) remaining

Completed 6.8 MiB/114.2 MiB (9.0 MiB/s) with 6 file(s) remaining

Completed 7.0 MiB/114.2 MiB (9.3 MiB/s) with 6 file(s) remaining

Completed 7.2 MiB/114.2 MiB (9.7 MiB/s) with 6 file(s) remaining

Completed 7.5 MiB/114.2 MiB (10.0 MiB/s) with 6 file(s) remaining

Completed 7.8 MiB/114.2 MiB (10.3 MiB/s) with 6 file(s) remaining

Completed 8.0 MiB/114.2 MiB (10.6 MiB/s) with 6 file(s) remaining

Completed 8.2 MiB/114.2 MiB (10.9 MiB/s) with 6 file(s) remaining

Completed 8.5 MiB/114.2 MiB (11.3 MiB/s) with 6 file(s) remaining

Completed 8.8 MiB/114.2 MiB (11.6 MiB/s) with 6 file(s) remaining

Completed 9.0 MiB/114.2 MiB (11.9 MiB/s) with 6 file(s) remaining

Completed 9.2 MiB/114.2 MiB (12.2 MiB/s) with 6 file(s) remaining

Completed 9.5 MiB/114.2 MiB (12.5 MiB/s) with 6 file(s) remaining

Completed 9.8 MiB/114.2 MiB (12.8 MiB/s) with 6 file(s) remaining

Completed 10.0 MiB/114.2 MiB (13.1 MiB/s) with 6 file(s) remaining

Completed 10.2 MiB/114.2 MiB (13.4 MiB/s) with 6 file(s) remaining

Completed 10.5 MiB/114.2 MiB (13.8 MiB/s) with 6 file(s) remaining

Completed 10.8 MiB/114.2 MiB (14.1 MiB/s) with 6 file(s) remaining

Completed 11.0 MiB/114.2 MiB (14.3 MiB/s) with 6 file(s) remaining

Completed 11.2 MiB/114.2 MiB (14.6 MiB/s) with 6 file(s) remaining

Completed 11.5 MiB/114.2 MiB (15.0 MiB/s) with 6 file(s) remaining

Completed 11.8 MiB/114.2 MiB (15.3 MiB/s) with 6 file(s) remaining

Completed 12.0 MiB/114.2 MiB (15.5 MiB/s) with 6 file(s) remaining

Completed 12.2 MiB/114.2 MiB (15.8 MiB/s) with 6 file(s) remaining

Completed 12.5 MiB/114.2 MiB (16.0 MiB/s) with 6 file(s) remaining

Completed 12.8 MiB/114.2 MiB (16.3 MiB/s) with 6 file(s) remaining

Completed 13.0 MiB/114.2 MiB (16.6 MiB/s) with 6 file(s) remaining

Completed 13.2 MiB/114.2 MiB (16.8 MiB/s) with 6 file(s) remaining

Completed 13.5 MiB/114.2 MiB (17.2 MiB/s) with 6 file(s) remaining

Completed 13.8 MiB/114.2 MiB (17.5 MiB/s) with 6 file(s) remaining

Completed 14.0 MiB/114.2 MiB (17.8 MiB/s) with 6 file(s) remaining

Completed 14.2 MiB/114.2 MiB (18.1 MiB/s) with 6 file(s) remaining

Completed 14.5 MiB/114.2 MiB (18.3 MiB/s) with 6 file(s) remaining

Completed 14.8 MiB/114.2 MiB (18.6 MiB/s) with 6 file(s) remaining

Completed 15.0 MiB/114.2 MiB (18.9 MiB/s) with 6 file(s) remaining

Completed 15.2 MiB/114.2 MiB (19.2 MiB/s) with 6 file(s) remaining

Completed 15.5 MiB/114.2 MiB (19.4 MiB/s) with 6 file(s) remaining

Completed 15.8 MiB/114.2 MiB (19.7 MiB/s) with 6 file(s) remaining

Completed 16.0 MiB/114.2 MiB (20.0 MiB/s) with 6 file(s) remaining

Completed 16.2 MiB/114.2 MiB (20.3 MiB/s) with 6 file(s) remaining

Completed 16.5 MiB/114.2 MiB (20.6 MiB/s) with 6 file(s) remaining

Completed 16.8 MiB/114.2 MiB (20.9 MiB/s) with 6 file(s) remaining

Completed 17.0 MiB/114.2 MiB (21.2 MiB/s) with 6 file(s) remaining

Completed 17.2 MiB/114.2 MiB (21.5 MiB/s) with 6 file(s) remaining

Completed 17.5 MiB/114.2 MiB (21.7 MiB/s) with 6 file(s) remaining

Completed 17.8 MiB/114.2 MiB (22.0 MiB/s) with 6 file(s) remaining

Completed 18.0 MiB/114.2 MiB (22.3 MiB/s) with 6 file(s) remaining

Completed 18.2 MiB/114.2 MiB (22.5 MiB/s) with 6 file(s) remaining

Completed 18.5 MiB/114.2 MiB (22.8 MiB/s) with 6 file(s) remaining

Completed 18.8 MiB/114.2 MiB (23.1 MiB/s) with 6 file(s) remaining

Completed 19.0 MiB/114.2 MiB (23.4 MiB/s) with 6 file(s) remaining

Completed 19.2 MiB/114.2 MiB (23.6 MiB/s) with 6 file(s) remaining

Completed 19.5 MiB/114.2 MiB (23.9 MiB/s) with 6 file(s) remaining

Completed 19.8 MiB/114.2 MiB (24.1 MiB/s) with 6 file(s) remaining

Completed 19.8 MiB/114.2 MiB (24.1 MiB/s) with 6 file(s) remaining

download: s3://openneuro.org/ds003965/sub-03/ses-1/func/sub-03_ses-1_task-flocBLOCKED_recording-respcardiac_physio.tsv.gz to ../../../../../../NI-edu-data/sub-03/ses-1/func/sub-03_ses-1_task-flocBLOCKED_recording-respcardiac_physio.tsv.gz

Completed 19.8 MiB/114.2 MiB (24.1 MiB/s) with 5 file(s) remaining

Completed 20.0 MiB/114.2 MiB (24.4 MiB/s) with 5 file(s) remaining

Completed 20.3 MiB/114.2 MiB (24.6 MiB/s) with 5 file(s) remaining

Completed 20.5 MiB/114.2 MiB (24.9 MiB/s) with 5 file(s) remaining

Completed 20.8 MiB/114.2 MiB (25.1 MiB/s) with 5 file(s) remaining

Completed 21.0 MiB/114.2 MiB (25.3 MiB/s) with 5 file(s) remaining

Completed 21.0 MiB/114.2 MiB (25.3 MiB/s) with 5 file(s) remaining

download: s3://openneuro.org/ds003965/sub-03/ses-1/func/sub-03_ses-1_task-flocBLOCKED_bold.json to ../../../../../../NI-edu-data/sub-03/ses-1/func/sub-03_ses-1_task-flocBLOCKED_bold.json

Completed 21.0 MiB/114.2 MiB (25.3 MiB/s) with 4 file(s) remaining

Completed 21.3 MiB/114.2 MiB (25.5 MiB/s) with 4 file(s) remaining

Completed 21.5 MiB/114.2 MiB (25.8 MiB/s) with 4 file(s) remaining

Completed 21.8 MiB/114.2 MiB (26.0 MiB/s) with 4 file(s) remaining

Completed 22.0 MiB/114.2 MiB (26.2 MiB/s) with 4 file(s) remaining

Completed 22.3 MiB/114.2 MiB (26.4 MiB/s) with 4 file(s) remaining

Completed 22.5 MiB/114.2 MiB (26.7 MiB/s) with 4 file(s) remaining

Completed 22.8 MiB/114.2 MiB (26.9 MiB/s) with 4 file(s) remaining

Completed 23.0 MiB/114.2 MiB (27.2 MiB/s) with 4 file(s) remaining

Completed 23.3 MiB/114.2 MiB (27.4 MiB/s) with 4 file(s) remaining

Completed 23.5 MiB/114.2 MiB (27.6 MiB/s) with 4 file(s) remaining

Completed 23.8 MiB/114.2 MiB (27.9 MiB/s) with 4 file(s) remaining

Completed 24.0 MiB/114.2 MiB (28.1 MiB/s) with 4 file(s) remaining

Completed 24.3 MiB/114.2 MiB (28.4 MiB/s) with 4 file(s) remaining

Completed 24.5 MiB/114.2 MiB (28.6 MiB/s) with 4 file(s) remaining

Completed 24.8 MiB/114.2 MiB (28.9 MiB/s) with 4 file(s) remaining

Completed 25.0 MiB/114.2 MiB (29.1 MiB/s) with 4 file(s) remaining

Completed 25.3 MiB/114.2 MiB (29.3 MiB/s) with 4 file(s) remaining

Completed 25.5 MiB/114.2 MiB (29.6 MiB/s) with 4 file(s) remaining

Completed 25.8 MiB/114.2 MiB (29.8 MiB/s) with 4 file(s) remaining

Completed 26.0 MiB/114.2 MiB (30.1 MiB/s) with 4 file(s) remaining

Completed 26.3 MiB/114.2 MiB (30.3 MiB/s) with 4 file(s) remaining

Completed 26.5 MiB/114.2 MiB (30.6 MiB/s) with 4 file(s) remaining

Completed 26.8 MiB/114.2 MiB (30.8 MiB/s) with 4 file(s) remaining

Completed 27.0 MiB/114.2 MiB (31.0 MiB/s) with 4 file(s) remaining

Completed 27.3 MiB/114.2 MiB (31.2 MiB/s) with 4 file(s) remaining

Completed 27.5 MiB/114.2 MiB (31.3 MiB/s) with 4 file(s) remaining

Completed 27.8 MiB/114.2 MiB (31.6 MiB/s) with 4 file(s) remaining

Completed 28.0 MiB/114.2 MiB (31.8 MiB/s) with 4 file(s) remaining

Completed 28.3 MiB/114.2 MiB (32.1 MiB/s) with 4 file(s) remaining

Completed 28.5 MiB/114.2 MiB (32.4 MiB/s) with 4 file(s) remaining

Completed 28.8 MiB/114.2 MiB (32.6 MiB/s) with 4 file(s) remaining

Completed 29.0 MiB/114.2 MiB (32.8 MiB/s) with 4 file(s) remaining

Completed 29.3 MiB/114.2 MiB (32.8 MiB/s) with 4 file(s) remaining

Completed 29.5 MiB/114.2 MiB (33.0 MiB/s) with 4 file(s) remaining

Completed 29.8 MiB/114.2 MiB (33.2 MiB/s) with 4 file(s) remaining

Completed 30.0 MiB/114.2 MiB (33.5 MiB/s) with 4 file(s) remaining

Completed 30.3 MiB/114.2 MiB (33.7 MiB/s) with 4 file(s) remaining

Completed 30.5 MiB/114.2 MiB (34.0 MiB/s) with 4 file(s) remaining

Completed 30.8 MiB/114.2 MiB (34.0 MiB/s) with 4 file(s) remaining

Completed 31.0 MiB/114.2 MiB (34.2 MiB/s) with 4 file(s) remaining

Completed 31.3 MiB/114.2 MiB (34.5 MiB/s) with 4 file(s) remaining

Completed 31.5 MiB/114.2 MiB (34.7 MiB/s) with 4 file(s) remaining

Completed 31.8 MiB/114.2 MiB (35.0 MiB/s) with 4 file(s) remaining

Completed 32.0 MiB/114.2 MiB (35.2 MiB/s) with 4 file(s) remaining

Completed 32.3 MiB/114.2 MiB (35.4 MiB/s) with 4 file(s) remaining

Completed 32.5 MiB/114.2 MiB (35.7 MiB/s) with 4 file(s) remaining

Completed 32.8 MiB/114.2 MiB (35.9 MiB/s) with 4 file(s) remaining

Completed 33.0 MiB/114.2 MiB (36.1 MiB/s) with 4 file(s) remaining

Completed 33.3 MiB/114.2 MiB (36.3 MiB/s) with 4 file(s) remaining

Completed 33.5 MiB/114.2 MiB (36.5 MiB/s) with 4 file(s) remaining

Completed 33.8 MiB/114.2 MiB (36.7 MiB/s) with 4 file(s) remaining

Completed 34.0 MiB/114.2 MiB (36.9 MiB/s) with 4 file(s) remaining

Completed 34.3 MiB/114.2 MiB (36.9 MiB/s) with 4 file(s) remaining

Completed 34.5 MiB/114.2 MiB (37.2 MiB/s) with 4 file(s) remaining

Completed 34.8 MiB/114.2 MiB (37.4 MiB/s) with 4 file(s) remaining

Completed 35.0 MiB/114.2 MiB (37.7 MiB/s) with 4 file(s) remaining

Completed 35.3 MiB/114.2 MiB (37.9 MiB/s) with 4 file(s) remaining

Completed 35.5 MiB/114.2 MiB (38.1 MiB/s) with 4 file(s) remaining

Completed 35.8 MiB/114.2 MiB (38.3 MiB/s) with 4 file(s) remaining

Completed 36.0 MiB/114.2 MiB (38.4 MiB/s) with 4 file(s) remaining

Completed 36.3 MiB/114.2 MiB (38.7 MiB/s) with 4 file(s) remaining

Completed 36.5 MiB/114.2 MiB (38.9 MiB/s) with 4 file(s) remaining

Completed 36.8 MiB/114.2 MiB (39.1 MiB/s) with 4 file(s) remaining

Completed 37.0 MiB/114.2 MiB (39.3 MiB/s) with 4 file(s) remaining

Completed 37.3 MiB/114.2 MiB (39.4 MiB/s) with 4 file(s) remaining

Completed 37.5 MiB/114.2 MiB (39.6 MiB/s) with 4 file(s) remaining

Completed 37.8 MiB/114.2 MiB (39.8 MiB/s) with 4 file(s) remaining

Completed 38.0 MiB/114.2 MiB (40.0 MiB/s) with 4 file(s) remaining

Completed 38.3 MiB/114.2 MiB (40.1 MiB/s) with 4 file(s) remaining

Completed 38.5 MiB/114.2 MiB (40.3 MiB/s) with 4 file(s) remaining

Completed 38.8 MiB/114.2 MiB (40.5 MiB/s) with 4 file(s) remaining

Completed 39.0 MiB/114.2 MiB (40.7 MiB/s) with 4 file(s) remaining

Completed 39.3 MiB/114.2 MiB (41.0 MiB/s) with 4 file(s) remaining

Completed 39.5 MiB/114.2 MiB (41.2 MiB/s) with 4 file(s) remaining

Completed 39.8 MiB/114.2 MiB (41.4 MiB/s) with 4 file(s) remaining

Completed 40.0 MiB/114.2 MiB (41.6 MiB/s) with 4 file(s) remaining

Completed 40.1 MiB/114.2 MiB (41.5 MiB/s) with 4 file(s) remaining

Completed 40.3 MiB/114.2 MiB (41.6 MiB/s) with 4 file(s) remaining

Completed 40.6 MiB/114.2 MiB (41.8 MiB/s) with 4 file(s) remaining

Completed 40.8 MiB/114.2 MiB (42.1 MiB/s) with 4 file(s) remaining

download: s3://openneuro.org/ds003965/sub-03/ses-1/func/sub-03_ses-1_task-flocBLOCKED_events.tsv to ../../../../../../NI-edu-data/sub-03/ses-1/func/sub-03_ses-1_task-flocBLOCKED_events.tsv

Completed 40.8 MiB/114.2 MiB (42.1 MiB/s) with 3 file(s) remaining

Completed 40.8 MiB/114.2 MiB (41.9 MiB/s) with 3 file(s) remaining

Completed 41.1 MiB/114.2 MiB (42.1 MiB/s) with 3 file(s) remaining

Completed 41.3 MiB/114.2 MiB (42.3 MiB/s) with 3 file(s) remaining

Completed 41.6 MiB/114.2 MiB (42.5 MiB/s) with 3 file(s) remaining

Completed 41.8 MiB/114.2 MiB (42.6 MiB/s) with 3 file(s) remaining

Completed 42.1 MiB/114.2 MiB (42.9 MiB/s) with 3 file(s) remaining

download: s3://openneuro.org/ds003965/sub-03/ses-1/func/sub-03_ses-1_task-flocBLOCKED_recording-respcardiac_physio.json to ../../../../../../NI-edu-data/sub-03/ses-1/func/sub-03_ses-1_task-flocBLOCKED_recording-respcardiac_physio.json

Completed 42.1 MiB/114.2 MiB (42.9 MiB/s) with 2 file(s) remaining

Completed 42.3 MiB/114.2 MiB (43.0 MiB/s) with 2 file(s) remaining

Completed 42.6 MiB/114.2 MiB (43.3 MiB/s) with 2 file(s) remaining

Completed 42.8 MiB/114.2 MiB (43.5 MiB/s) with 2 file(s) remaining

Completed 43.1 MiB/114.2 MiB (43.7 MiB/s) with 2 file(s) remaining

Completed 43.3 MiB/114.2 MiB (43.9 MiB/s) with 2 file(s) remaining

Completed 43.6 MiB/114.2 MiB (44.0 MiB/s) with 2 file(s) remaining

Completed 43.8 MiB/114.2 MiB (44.2 MiB/s) with 2 file(s) remaining

Completed 44.1 MiB/114.2 MiB (44.4 MiB/s) with 2 file(s) remaining

Completed 44.3 MiB/114.2 MiB (44.7 MiB/s) with 2 file(s) remaining

Completed 44.6 MiB/114.2 MiB (44.9 MiB/s) with 2 file(s) remaining

Completed 44.8 MiB/114.2 MiB (45.1 MiB/s) with 2 file(s) remaining

Completed 45.1 MiB/114.2 MiB (45.4 MiB/s) with 2 file(s) remaining

Completed 45.3 MiB/114.2 MiB (45.6 MiB/s) with 2 file(s) remaining

Completed 45.6 MiB/114.2 MiB (45.8 MiB/s) with 2 file(s) remaining

Completed 45.8 MiB/114.2 MiB (46.0 MiB/s) with 2 file(s) remaining

Completed 46.1 MiB/114.2 MiB (46.1 MiB/s) with 2 file(s) remaining

Completed 46.3 MiB/114.2 MiB (46.4 MiB/s) with 2 file(s) remaining

Completed 46.6 MiB/114.2 MiB (46.6 MiB/s) with 2 file(s) remaining

Completed 46.8 MiB/114.2 MiB (46.8 MiB/s) with 2 file(s) remaining

Completed 47.1 MiB/114.2 MiB (47.0 MiB/s) with 2 file(s) remaining

Completed 47.3 MiB/114.2 MiB (47.1 MiB/s) with 2 file(s) remaining

Completed 47.6 MiB/114.2 MiB (47.3 MiB/s) with 2 file(s) remaining

Completed 47.8 MiB/114.2 MiB (47.5 MiB/s) with 2 file(s) remaining

Completed 48.1 MiB/114.2 MiB (47.7 MiB/s) with 2 file(s) remaining

Completed 48.3 MiB/114.2 MiB (47.9 MiB/s) with 2 file(s) remaining

Completed 48.6 MiB/114.2 MiB (48.1 MiB/s) with 2 file(s) remaining

Completed 48.8 MiB/114.2 MiB (48.3 MiB/s) with 2 file(s) remaining

Completed 49.1 MiB/114.2 MiB (48.6 MiB/s) with 2 file(s) remaining

Completed 49.3 MiB/114.2 MiB (48.7 MiB/s) with 2 file(s) remaining

Completed 49.6 MiB/114.2 MiB (48.9 MiB/s) with 2 file(s) remaining

Completed 49.8 MiB/114.2 MiB (49.1 MiB/s) with 2 file(s) remaining

Completed 50.1 MiB/114.2 MiB (49.3 MiB/s) with 2 file(s) remaining

Completed 50.3 MiB/114.2 MiB (49.4 MiB/s) with 2 file(s) remaining

Completed 50.6 MiB/114.2 MiB (49.6 MiB/s) with 2 file(s) remaining

Completed 50.8 MiB/114.2 MiB (49.8 MiB/s) with 2 file(s) remaining

Completed 51.1 MiB/114.2 MiB (49.9 MiB/s) with 2 file(s) remaining

Completed 51.3 MiB/114.2 MiB (50.1 MiB/s) with 2 file(s) remaining

Completed 51.6 MiB/114.2 MiB (50.3 MiB/s) with 2 file(s) remaining

Completed 51.8 MiB/114.2 MiB (50.5 MiB/s) with 2 file(s) remaining

Completed 52.1 MiB/114.2 MiB (50.6 MiB/s) with 2 file(s) remaining

Completed 52.3 MiB/114.2 MiB (50.8 MiB/s) with 2 file(s) remaining

Completed 52.6 MiB/114.2 MiB (51.0 MiB/s) with 2 file(s) remaining

Completed 52.8 MiB/114.2 MiB (51.2 MiB/s) with 2 file(s) remaining

Completed 53.1 MiB/114.2 MiB (51.4 MiB/s) with 2 file(s) remaining

Completed 53.3 MiB/114.2 MiB (51.6 MiB/s) with 2 file(s) remaining

Completed 53.6 MiB/114.2 MiB (51.8 MiB/s) with 2 file(s) remaining

Completed 53.8 MiB/114.2 MiB (52.0 MiB/s) with 2 file(s) remaining

Completed 54.1 MiB/114.2 MiB (52.2 MiB/s) with 2 file(s) remaining

Completed 54.3 MiB/114.2 MiB (52.4 MiB/s) with 2 file(s) remaining

Completed 54.6 MiB/114.2 MiB (52.5 MiB/s) with 2 file(s) remaining

Completed 54.8 MiB/114.2 MiB (52.6 MiB/s) with 2 file(s) remaining

Completed 55.1 MiB/114.2 MiB (52.9 MiB/s) with 2 file(s) remaining

Completed 55.3 MiB/114.2 MiB (53.1 MiB/s) with 2 file(s) remaining

Completed 55.6 MiB/114.2 MiB (53.2 MiB/s) with 2 file(s) remaining

Completed 55.8 MiB/114.2 MiB (53.4 MiB/s) with 2 file(s) remaining

Completed 56.1 MiB/114.2 MiB (53.6 MiB/s) with 2 file(s) remaining

Completed 56.3 MiB/114.2 MiB (53.8 MiB/s) with 2 file(s) remaining

Completed 56.6 MiB/114.2 MiB (54.0 MiB/s) with 2 file(s) remaining

Completed 56.8 MiB/114.2 MiB (54.1 MiB/s) with 2 file(s) remaining

Completed 57.1 MiB/114.2 MiB (54.3 MiB/s) with 2 file(s) remaining

Completed 57.3 MiB/114.2 MiB (54.5 MiB/s) with 2 file(s) remaining

Completed 57.6 MiB/114.2 MiB (54.7 MiB/s) with 2 file(s) remaining

Completed 57.8 MiB/114.2 MiB (54.9 MiB/s) with 2 file(s) remaining

Completed 58.1 MiB/114.2 MiB (55.1 MiB/s) with 2 file(s) remaining

Completed 58.3 MiB/114.2 MiB (55.3 MiB/s) with 2 file(s) remaining

Completed 58.6 MiB/114.2 MiB (55.4 MiB/s) with 2 file(s) remaining

Completed 58.8 MiB/114.2 MiB (55.6 MiB/s) with 2 file(s) remaining

Completed 59.1 MiB/114.2 MiB (55.8 MiB/s) with 2 file(s) remaining

Completed 59.3 MiB/114.2 MiB (56.1 MiB/s) with 2 file(s) remaining

Completed 59.6 MiB/114.2 MiB (56.2 MiB/s) with 2 file(s) remaining

Completed 59.8 MiB/114.2 MiB (56.3 MiB/s) with 2 file(s) remaining

Completed 60.1 MiB/114.2 MiB (56.5 MiB/s) with 2 file(s) remaining

Completed 60.3 MiB/114.2 MiB (56.7 MiB/s) with 2 file(s) remaining

Completed 60.6 MiB/114.2 MiB (56.9 MiB/s) with 2 file(s) remaining

Completed 60.8 MiB/114.2 MiB (57.1 MiB/s) with 2 file(s) remaining

Completed 61.1 MiB/114.2 MiB (57.2 MiB/s) with 2 file(s) remaining

Completed 61.3 MiB/114.2 MiB (57.5 MiB/s) with 2 file(s) remaining

Completed 61.6 MiB/114.2 MiB (57.7 MiB/s) with 2 file(s) remaining

Completed 61.8 MiB/114.2 MiB (57.9 MiB/s) with 2 file(s) remaining

Completed 62.1 MiB/114.2 MiB (58.1 MiB/s) with 2 file(s) remaining

Completed 62.3 MiB/114.2 MiB (58.3 MiB/s) with 2 file(s) remaining

Completed 62.6 MiB/114.2 MiB (58.4 MiB/s) with 2 file(s) remaining

Completed 62.8 MiB/114.2 MiB (58.5 MiB/s) with 2 file(s) remaining

Completed 63.1 MiB/114.2 MiB (58.7 MiB/s) with 2 file(s) remaining

Completed 63.3 MiB/114.2 MiB (58.8 MiB/s) with 2 file(s) remaining

Completed 63.6 MiB/114.2 MiB (59.0 MiB/s) with 2 file(s) remaining

Completed 63.8 MiB/114.2 MiB (59.2 MiB/s) with 2 file(s) remaining

Completed 64.1 MiB/114.2 MiB (59.4 MiB/s) with 2 file(s) remaining

Completed 64.3 MiB/114.2 MiB (59.6 MiB/s) with 2 file(s) remaining

Completed 64.6 MiB/114.2 MiB (59.8 MiB/s) with 2 file(s) remaining

Completed 64.8 MiB/114.2 MiB (59.9 MiB/s) with 2 file(s) remaining

Completed 65.1 MiB/114.2 MiB (60.0 MiB/s) with 2 file(s) remaining

Completed 65.3 MiB/114.2 MiB (60.3 MiB/s) with 2 file(s) remaining

Completed 65.6 MiB/114.2 MiB (60.4 MiB/s) with 2 file(s) remaining

Completed 65.8 MiB/114.2 MiB (60.6 MiB/s) with 2 file(s) remaining

Completed 66.1 MiB/114.2 MiB (60.8 MiB/s) with 2 file(s) remaining

Completed 66.3 MiB/114.2 MiB (60.9 MiB/s) with 2 file(s) remaining

Completed 66.6 MiB/114.2 MiB (61.0 MiB/s) with 2 file(s) remaining

Completed 66.8 MiB/114.2 MiB (61.2 MiB/s) with 2 file(s) remaining

Completed 67.1 MiB/114.2 MiB (61.3 MiB/s) with 2 file(s) remaining

Completed 67.3 MiB/114.2 MiB (61.5 MiB/s) with 2 file(s) remaining

Completed 67.6 MiB/114.2 MiB (61.6 MiB/s) with 2 file(s) remaining

Completed 67.8 MiB/114.2 MiB (61.8 MiB/s) with 2 file(s) remaining

Completed 68.1 MiB/114.2 MiB (61.9 MiB/s) with 2 file(s) remaining

Completed 68.3 MiB/114.2 MiB (62.1 MiB/s) with 2 file(s) remaining

Completed 68.6 MiB/114.2 MiB (62.3 MiB/s) with 2 file(s) remaining

Completed 68.8 MiB/114.2 MiB (62.4 MiB/s) with 2 file(s) remaining

Completed 69.1 MiB/114.2 MiB (62.6 MiB/s) with 2 file(s) remaining

Completed 69.3 MiB/114.2 MiB (62.7 MiB/s) with 2 file(s) remaining

Completed 69.6 MiB/114.2 MiB (62.8 MiB/s) with 2 file(s) remaining

Completed 69.8 MiB/114.2 MiB (62.9 MiB/s) with 2 file(s) remaining

Completed 70.1 MiB/114.2 MiB (63.1 MiB/s) with 2 file(s) remaining

Completed 70.3 MiB/114.2 MiB (63.3 MiB/s) with 2 file(s) remaining

Completed 70.6 MiB/114.2 MiB (63.5 MiB/s) with 2 file(s) remaining

Completed 70.8 MiB/114.2 MiB (63.7 MiB/s) with 2 file(s) remaining

Completed 71.1 MiB/114.2 MiB (63.8 MiB/s) with 2 file(s) remaining

Completed 71.3 MiB/114.2 MiB (63.9 MiB/s) with 2 file(s) remaining

Completed 71.6 MiB/114.2 MiB (64.0 MiB/s) with 2 file(s) remaining

Completed 71.8 MiB/114.2 MiB (64.2 MiB/s) with 2 file(s) remaining

Completed 72.1 MiB/114.2 MiB (64.4 MiB/s) with 2 file(s) remaining

Completed 72.3 MiB/114.2 MiB (64.6 MiB/s) with 2 file(s) remaining

Completed 72.6 MiB/114.2 MiB (64.8 MiB/s) with 2 file(s) remaining

Completed 72.8 MiB/114.2 MiB (64.9 MiB/s) with 2 file(s) remaining

Completed 73.1 MiB/114.2 MiB (65.1 MiB/s) with 2 file(s) remaining

Completed 73.3 MiB/114.2 MiB (65.1 MiB/s) with 2 file(s) remaining

Completed 73.6 MiB/114.2 MiB (65.2 MiB/s) with 2 file(s) remaining

Completed 73.8 MiB/114.2 MiB (65.4 MiB/s) with 2 file(s) remaining

Completed 74.1 MiB/114.2 MiB (65.4 MiB/s) with 2 file(s) remaining

Completed 74.3 MiB/114.2 MiB (65.6 MiB/s) with 2 file(s) remaining

Completed 74.6 MiB/114.2 MiB (65.8 MiB/s) with 2 file(s) remaining

Completed 74.8 MiB/114.2 MiB (66.0 MiB/s) with 2 file(s) remaining

Completed 75.1 MiB/114.2 MiB (66.1 MiB/s) with 2 file(s) remaining

Completed 75.3 MiB/114.2 MiB (66.3 MiB/s) with 2 file(s) remaining

Completed 75.6 MiB/114.2 MiB (66.4 MiB/s) with 2 file(s) remaining

Completed 75.8 MiB/114.2 MiB (66.6 MiB/s) with 2 file(s) remaining

Completed 76.1 MiB/114.2 MiB (66.7 MiB/s) with 2 file(s) remaining

Completed 76.3 MiB/114.2 MiB (66.9 MiB/s) with 2 file(s) remaining

Completed 76.6 MiB/114.2 MiB (67.0 MiB/s) with 2 file(s) remaining

Completed 76.8 MiB/114.2 MiB (67.1 MiB/s) with 2 file(s) remaining

Completed 77.1 MiB/114.2 MiB (67.2 MiB/s) with 2 file(s) remaining

Completed 77.3 MiB/114.2 MiB (67.3 MiB/s) with 2 file(s) remaining

Completed 77.6 MiB/114.2 MiB (67.5 MiB/s) with 2 file(s) remaining

Completed 77.8 MiB/114.2 MiB (67.7 MiB/s) with 2 file(s) remaining

Completed 78.1 MiB/114.2 MiB (67.9 MiB/s) with 2 file(s) remaining

Completed 78.3 MiB/114.2 MiB (68.0 MiB/s) with 2 file(s) remaining

Completed 78.6 MiB/114.2 MiB (68.2 MiB/s) with 2 file(s) remaining

Completed 78.8 MiB/114.2 MiB (68.4 MiB/s) with 2 file(s) remaining

Completed 79.1 MiB/114.2 MiB (68.5 MiB/s) with 2 file(s) remaining

Completed 79.3 MiB/114.2 MiB (68.6 MiB/s) with 2 file(s) remaining

Completed 79.6 MiB/114.2 MiB (68.8 MiB/s) with 2 file(s) remaining

Completed 79.8 MiB/114.2 MiB (69.0 MiB/s) with 2 file(s) remaining

Completed 80.1 MiB/114.2 MiB (69.1 MiB/s) with 2 file(s) remaining

Completed 80.3 MiB/114.2 MiB (69.3 MiB/s) with 2 file(s) remaining

Completed 80.6 MiB/114.2 MiB (69.5 MiB/s) with 2 file(s) remaining

Completed 80.8 MiB/114.2 MiB (69.6 MiB/s) with 2 file(s) remaining

Completed 81.1 MiB/114.2 MiB (69.7 MiB/s) with 2 file(s) remaining

Completed 81.3 MiB/114.2 MiB (69.9 MiB/s) with 2 file(s) remaining

Completed 81.6 MiB/114.2 MiB (70.0 MiB/s) with 2 file(s) remaining

Completed 81.8 MiB/114.2 MiB (70.2 MiB/s) with 2 file(s) remaining

Completed 82.1 MiB/114.2 MiB (70.4 MiB/s) with 2 file(s) remaining

Completed 82.3 MiB/114.2 MiB (70.5 MiB/s) with 2 file(s) remaining

Completed 82.6 MiB/114.2 MiB (70.7 MiB/s) with 2 file(s) remaining

Completed 82.8 MiB/114.2 MiB (70.8 MiB/s) with 2 file(s) remaining

Completed 83.1 MiB/114.2 MiB (71.0 MiB/s) with 2 file(s) remaining

Completed 83.3 MiB/114.2 MiB (71.2 MiB/s) with 2 file(s) remaining

Completed 83.6 MiB/114.2 MiB (71.3 MiB/s) with 2 file(s) remaining

Completed 83.8 MiB/114.2 MiB (71.5 MiB/s) with 2 file(s) remaining

Completed 84.1 MiB/114.2 MiB (71.6 MiB/s) with 2 file(s) remaining

Completed 84.3 MiB/114.2 MiB (71.8 MiB/s) with 2 file(s) remaining

Completed 84.6 MiB/114.2 MiB (72.0 MiB/s) with 2 file(s) remaining

Completed 84.8 MiB/114.2 MiB (72.1 MiB/s) with 2 file(s) remaining

Completed 85.1 MiB/114.2 MiB (72.3 MiB/s) with 2 file(s) remaining

Completed 85.3 MiB/114.2 MiB (72.4 MiB/s) with 2 file(s) remaining

Completed 85.6 MiB/114.2 MiB (72.6 MiB/s) with 2 file(s) remaining

Completed 85.8 MiB/114.2 MiB (72.8 MiB/s) with 2 file(s) remaining

Completed 86.1 MiB/114.2 MiB (72.9 MiB/s) with 2 file(s) remaining

Completed 86.3 MiB/114.2 MiB (73.1 MiB/s) with 2 file(s) remaining

Completed 86.6 MiB/114.2 MiB (73.2 MiB/s) with 2 file(s) remaining

Completed 86.8 MiB/114.2 MiB (73.4 MiB/s) with 2 file(s) remaining

Completed 87.1 MiB/114.2 MiB (73.6 MiB/s) with 2 file(s) remaining

Completed 87.3 MiB/114.2 MiB (73.7 MiB/s) with 2 file(s) remaining

Completed 87.6 MiB/114.2 MiB (73.9 MiB/s) with 2 file(s) remaining

Completed 87.8 MiB/114.2 MiB (74.0 MiB/s) with 2 file(s) remaining

Completed 88.1 MiB/114.2 MiB (74.1 MiB/s) with 2 file(s) remaining

Completed 88.3 MiB/114.2 MiB (74.3 MiB/s) with 2 file(s) remaining

Completed 88.6 MiB/114.2 MiB (74.4 MiB/s) with 2 file(s) remaining

Completed 88.8 MiB/114.2 MiB (74.6 MiB/s) with 2 file(s) remaining

Completed 89.1 MiB/114.2 MiB (74.8 MiB/s) with 2 file(s) remaining

Completed 89.3 MiB/114.2 MiB (74.9 MiB/s) with 2 file(s) remaining

Completed 89.6 MiB/114.2 MiB (75.1 MiB/s) with 2 file(s) remaining

Completed 89.8 MiB/114.2 MiB (75.3 MiB/s) with 2 file(s) remaining

Completed 90.1 MiB/114.2 MiB (75.5 MiB/s) with 2 file(s) remaining

Completed 90.3 MiB/114.2 MiB (75.6 MiB/s) with 2 file(s) remaining

Completed 90.6 MiB/114.2 MiB (75.8 MiB/s) with 2 file(s) remaining

Completed 90.6 MiB/114.2 MiB (75.9 MiB/s) with 2 file(s) remaining

Completed 90.9 MiB/114.2 MiB (76.0 MiB/s) with 2 file(s) remaining

Completed 91.1 MiB/114.2 MiB (76.2 MiB/s) with 2 file(s) remaining

Completed 91.4 MiB/114.2 MiB (76.3 MiB/s) with 2 file(s) remaining

Completed 91.6 MiB/114.2 MiB (76.5 MiB/s) with 2 file(s) remaining

Completed 91.9 MiB/114.2 MiB (76.6 MiB/s) with 2 file(s) remaining

Completed 92.1 MiB/114.2 MiB (76.8 MiB/s) with 2 file(s) remaining

Completed 92.4 MiB/114.2 MiB (76.9 MiB/s) with 2 file(s) remaining

Completed 92.6 MiB/114.2 MiB (77.1 MiB/s) with 2 file(s) remaining

Completed 92.9 MiB/114.2 MiB (77.2 MiB/s) with 2 file(s) remaining

Completed 93.1 MiB/114.2 MiB (77.4 MiB/s) with 2 file(s) remaining

Completed 93.4 MiB/114.2 MiB (77.5 MiB/s) with 2 file(s) remaining

Completed 93.6 MiB/114.2 MiB (77.7 MiB/s) with 2 file(s) remaining

Completed 93.9 MiB/114.2 MiB (77.8 MiB/s) with 2 file(s) remaining

Completed 94.1 MiB/114.2 MiB (78.0 MiB/s) with 2 file(s) remaining

Completed 94.4 MiB/114.2 MiB (78.1 MiB/s) with 2 file(s) remaining

Completed 94.6 MiB/114.2 MiB (78.2 MiB/s) with 2 file(s) remaining

Completed 94.9 MiB/114.2 MiB (78.4 MiB/s) with 2 file(s) remaining

Completed 95.1 MiB/114.2 MiB (78.6 MiB/s) with 2 file(s) remaining

Completed 95.4 MiB/114.2 MiB (78.2 MiB/s) with 2 file(s) remaining

Completed 95.6 MiB/114.2 MiB (78.4 MiB/s) with 2 file(s) remaining

Completed 95.9 MiB/114.2 MiB (78.5 MiB/s) with 2 file(s) remaining

Completed 96.1 MiB/114.2 MiB (78.7 MiB/s) with 2 file(s) remaining

Completed 96.4 MiB/114.2 MiB (78.8 MiB/s) with 2 file(s) remaining

Completed 96.6 MiB/114.2 MiB (79.0 MiB/s) with 2 file(s) remaining

Completed 96.9 MiB/114.2 MiB (79.1 MiB/s) with 2 file(s) remaining

Completed 97.1 MiB/114.2 MiB (79.3 MiB/s) with 2 file(s) remaining

Completed 97.4 MiB/114.2 MiB (79.4 MiB/s) with 2 file(s) remaining

Completed 97.6 MiB/114.2 MiB (79.5 MiB/s) with 2 file(s) remaining

Completed 97.9 MiB/114.2 MiB (79.7 MiB/s) with 2 file(s) remaining

Completed 98.1 MiB/114.2 MiB (79.8 MiB/s) with 2 file(s) remaining

Completed 98.4 MiB/114.2 MiB (79.9 MiB/s) with 2 file(s) remaining

Completed 98.6 MiB/114.2 MiB (80.1 MiB/s) with 2 file(s) remaining

Completed 98.9 MiB/114.2 MiB (80.2 MiB/s) with 2 file(s) remaining

Completed 99.1 MiB/114.2 MiB (80.4 MiB/s) with 2 file(s) remaining

Completed 99.4 MiB/114.2 MiB (80.5 MiB/s) with 2 file(s) remaining

Completed 99.6 MiB/114.2 MiB (80.6 MiB/s) with 2 file(s) remaining

Completed 99.9 MiB/114.2 MiB (80.8 MiB/s) with 2 file(s) remaining

Completed 100.1 MiB/114.2 MiB (80.9 MiB/s) with 2 file(s) remaining

Completed 100.4 MiB/114.2 MiB (81.1 MiB/s) with 2 file(s) remaining

Completed 100.6 MiB/114.2 MiB (81.2 MiB/s) with 2 file(s) remaining

Completed 100.9 MiB/114.2 MiB (81.4 MiB/s) with 2 file(s) remaining

Completed 101.1 MiB/114.2 MiB (81.6 MiB/s) with 2 file(s) remaining

Completed 101.4 MiB/114.2 MiB (81.7 MiB/s) with 2 file(s) remaining

Completed 101.6 MiB/114.2 MiB (81.9 MiB/s) with 2 file(s) remaining

Completed 101.9 MiB/114.2 MiB (82.0 MiB/s) with 2 file(s) remaining

Completed 102.1 MiB/114.2 MiB (82.2 MiB/s) with 2 file(s) remaining

Completed 102.4 MiB/114.2 MiB (82.4 MiB/s) with 2 file(s) remaining

Completed 102.6 MiB/114.2 MiB (82.5 MiB/s) with 2 file(s) remaining

Completed 102.9 MiB/114.2 MiB (82.6 MiB/s) with 2 file(s) remaining

Completed 103.1 MiB/114.2 MiB (82.7 MiB/s) with 2 file(s) remaining

Completed 103.4 MiB/114.2 MiB (82.9 MiB/s) with 2 file(s) remaining

Completed 103.6 MiB/114.2 MiB (83.0 MiB/s) with 2 file(s) remaining

Completed 103.9 MiB/114.2 MiB (83.1 MiB/s) with 2 file(s) remaining

Completed 104.1 MiB/114.2 MiB (83.2 MiB/s) with 2 file(s) remaining

Completed 104.4 MiB/114.2 MiB (83.4 MiB/s) with 2 file(s) remaining

Completed 104.6 MiB/114.2 MiB (83.5 MiB/s) with 2 file(s) remaining

Completed 104.9 MiB/114.2 MiB (83.6 MiB/s) with 2 file(s) remaining

Completed 105.1 MiB/114.2 MiB (83.7 MiB/s) with 2 file(s) remaining

Completed 105.4 MiB/114.2 MiB (83.9 MiB/s) with 2 file(s) remaining

Completed 105.6 MiB/114.2 MiB (84.1 MiB/s) with 2 file(s) remaining

Completed 105.9 MiB/114.2 MiB (84.2 MiB/s) with 2 file(s) remaining

Completed 106.1 MiB/114.2 MiB (84.4 MiB/s) with 2 file(s) remaining

Completed 106.4 MiB/114.2 MiB (84.5 MiB/s) with 2 file(s) remaining

Completed 106.6 MiB/114.2 MiB (84.7 MiB/s) with 2 file(s) remaining

Completed 106.9 MiB/114.2 MiB (84.9 MiB/s) with 2 file(s) remaining

Completed 107.1 MiB/114.2 MiB (85.0 MiB/s) with 2 file(s) remaining

Completed 107.4 MiB/114.2 MiB (85.2 MiB/s) with 2 file(s) remaining

Completed 107.6 MiB/114.2 MiB (85.3 MiB/s) with 2 file(s) remaining

Completed 107.9 MiB/114.2 MiB (85.3 MiB/s) with 2 file(s) remaining

Completed 108.1 MiB/114.2 MiB (85.5 MiB/s) with 2 file(s) remaining

Completed 108.4 MiB/114.2 MiB (85.6 MiB/s) with 2 file(s) remaining

Completed 108.6 MiB/114.2 MiB (85.8 MiB/s) with 2 file(s) remaining

Completed 108.9 MiB/114.2 MiB (85.8 MiB/s) with 2 file(s) remaining

Completed 109.1 MiB/114.2 MiB (86.0 MiB/s) with 2 file(s) remaining

Completed 109.4 MiB/114.2 MiB (86.1 MiB/s) with 2 file(s) remaining

Completed 109.6 MiB/114.2 MiB (86.2 MiB/s) with 2 file(s) remaining

Completed 109.9 MiB/114.2 MiB (86.2 MiB/s) with 2 file(s) remaining

Completed 110.1 MiB/114.2 MiB (86.4 MiB/s) with 2 file(s) remaining

Completed 110.4 MiB/114.2 MiB (86.5 MiB/s) with 2 file(s) remaining

Completed 110.6 MiB/114.2 MiB (86.6 MiB/s) with 2 file(s) remaining

Completed 110.9 MiB/114.2 MiB (86.7 MiB/s) with 2 file(s) remaining

Completed 111.1 MiB/114.2 MiB (86.9 MiB/s) with 2 file(s) remaining

Completed 111.4 MiB/114.2 MiB (87.0 MiB/s) with 2 file(s) remaining

Completed 111.6 MiB/114.2 MiB (87.2 MiB/s) with 2 file(s) remaining

Completed 111.9 MiB/114.2 MiB (87.3 MiB/s) with 2 file(s) remaining

Completed 112.1 MiB/114.2 MiB (87.5 MiB/s) with 2 file(s) remaining

Completed 112.4 MiB/114.2 MiB (87.6 MiB/s) with 2 file(s) remaining

Completed 112.6 MiB/114.2 MiB (87.7 MiB/s) with 2 file(s) remaining

Completed 112.7 MiB/114.2 MiB (87.8 MiB/s) with 2 file(s) remaining

download: s3://openneuro.org/ds003965/sub-03/ses-1/func/sub-03_ses-1_task-flocBLOCKED_recording-respcardiac_physio.log to ../../../../../../NI-edu-data/sub-03/ses-1/func/sub-03_ses-1_task-flocBLOCKED_recording-respcardiac_physio.log

Completed 112.7 MiB/114.2 MiB (87.8 MiB/s) with 1 file(s) remaining

Completed 113.0 MiB/114.2 MiB (87.9 MiB/s) with 1 file(s) remaining

Completed 113.2 MiB/114.2 MiB (88.0 MiB/s) with 1 file(s) remaining

Completed 113.5 MiB/114.2 MiB (88.1 MiB/s) with 1 file(s) remaining

Completed 113.7 MiB/114.2 MiB (88.0 MiB/s) with 1 file(s) remaining

Completed 114.0 MiB/114.2 MiB (88.2 MiB/s) with 1 file(s) remaining

Completed 114.2 MiB/114.2 MiB (88.3 MiB/s) with 1 file(s) remaining

download: s3://openneuro.org/ds003965/sub-03/ses-1/func/sub-03_ses-1_task-flocBLOCKED_bold.nii.gz to ../../../../../../NI-edu-data/sub-03/ses-1/func/sub-03_ses-1_task-flocBLOCKED_bold.nii.gz

Completed 162.6 KiB/~162.6 KiB (848.8 KiB/s) with ~1 file(s) remaining (calculating...)

download: s3://openneuro.org/ds003965/derivatives/fmriprep/sub-03/ses-1/anat/sub-03_ses-1_acq-AxialNsig2Mm1_desc-brain_mask.nii.gz to ../../../../../../NI-edu-data/derivatives/fmriprep/sub-03/ses-1/anat/sub-03_ses-1_acq-AxialNsig2Mm1_desc-brain_mask.nii.gz

Completed 162.6 KiB/~162.6 KiB (848.8 KiB/s) with ~0 file(s) remaining (calculating...)

Done!

Anyway, let’s check out the structure of the directory with the data. The niedu package has a utility function (in the submodule utils) that returns the path to the root of the dataset:

if os.path.isdir(data_dir):

print("The dataset is located here: %s" % data_dir)

else:

print("WARNING: the dataset could not be found here: %s !" % data_dir)

The dataset is located here: /home/runner/NI-edu-data

Contents of the dataset#

Throughout the course, we’ll explain the contents of the dataset and use them for exercises and visualizations. For now, it suffices to know that the full dataset contains data from 13 subjects, each scanned twice (i.e., two sessions acquired at different days). Each subject has it’s own sub-directory, which contains an “anat” directory (with anatomical MRI files) and/or a “func” directory (with functional MRI files).

We can “peek” at the structure/contents of the dataset using the listdir function from the built-in os package (which stands for “Operating System”):

import os

print(os.listdir(data_dir))

['derivatives', 'sub-03']

The sub-03 directory contain the “raw” (i.e., not-preprocessed) data of subject 3. Let’s check out its contents. We’ll do this using a custom function show_tree from the niedu package:

from niedu.global_utils import show_directory_tree

# To create the full path, we use the function `os.path.join` instead of just writing out

# {data_dir}/sub-03. Effectively, `os.path.join` does the same, but takes into account your

# operating system, which is important because Windows paths are separated by backslashes

# (e.g., `sub-03\ses-1\anat`) but Mac and Linux paths are separated by forward slashes

# (e.g., `sub-03/ses-1/anat`).

sub_03_dir = os.path.join(data_dir, 'sub-03')

show_directory_tree(sub_03_dir, ignore='physio')

sub-03/

ses-1/

anat/

sub-03_ses-1_acq-AxialNsig2Mm1_T1w.json

sub-03_ses-1_acq-AxialNsig2Mm1_T1w.nii.gz

func/

sub-03_ses-1_task-flocBLOCKED_bold.json

sub-03_ses-1_task-flocBLOCKED_bold.nii.gz

sub-03_ses-1_task-flocBLOCKED_events.tsv

As you can see, the sub-03 directory contains a single session directory, “ses-1”. “Sessions” ususally refer to different days that the subject was scanned. Here, we only downloaded data from a single session (while, in fact, there is data from two sessions) in order to limit the amount of data that needed to be downloaded.

Within the ses-1 directory, there is a func directory (with functional MRI files) and an anat directory (with anatomical MRI files). These directories contain a bunch of different files, with different file types:

(Compressed) nifti files: these are the files with the extension

nii.gz, which contain the actual MRI data. These are discussed extensively in the next section;Json files: these are the files with the extension

json, which are plain-text files containing important metadata about the MRI-files (such as acquisition parameters). We won’t use these much in this course.Event files: these are the files ending in

_events.tsv, which contain information about the onset/duration of events during the fMRI paradigm.

This particular organization of the data is in accordance with the “Brain Imaging Data Structure” (BIDS; read more about BIDS here). If you are going to analyze a new or existing MRI dataset, we strongly suggest to convert it to BIDS first, not only because makes your life a lot easier, but also because there exist many tools geared towards BIDS-formatted datasets (such as fmriprep and mriqc)!

MRI acquisition parameters#

For each participant, we acquired one T1-weighted, high-resolution (\(1 \times 1 \times 1\) mm.) anatomical scan. Our functional scans were acquired using a (T2*-weighted) gradient-echo echo-planar imaging (GE-EPI) scan sequence with a TR of 700 milleseconds and a TE of 30 milliseconds and a spatial resolution of \(2.7 \times 2.7 \times 2.7\) mm. These functional scans used a “multiband acceleration factor” of 4 (which is a “simultaneous multislice” technique to acquire data from multiple slices within a single RF pulse, greatly accellerating acquisition time).

Experimental paradigms#

In this dataset, we used two types of experimental paradigms: a “functional localizer” (which we abbreviate as “floc”) and a simple face perception task (“face”). (We also acquired a resting-state scan, i.e. a scan without an explicit task, but we won’t use that for this course.) The two different floc paradigms (flocBLOCKED and flocER) were each run twice: once in each session. Each session contained 6 face perception runs (so 12 in total).

Functional localizer task#

The functional localizer paradigm we used was developed by the Stanford Vision & Perception Neuroscience Lab. Their stimulus set contains images from different categories:

characters (random strings of letters and numbers);

bodies (full bodies without head and close-ups of limbs);

faces (adults and children);

places (hallways and houses);

objects (musical instruments and cars).

These categories were chosen to target different known, (arguably) category-selective regions in the brain, including the occipital face area (OFA) and fusiform face area (FFA; face-selective), parahippocampal place area (PPA; place/scene selective), visual word form area (VWFA; character-selective) and extrastriate body area (EBA; body-selective).

In the functional localizer task, participants had to do a “one-back” task, in which they had to press a button with their right hand when they saw exactly the same image twice in a row (which was about 10% of the trials).

Importantly, we acquired this localizer in two variants: one with a blocked design (“flocBLOCKED”) and one with an event-related design (“flocER”; with ISIs from an exponential distribution with a 2 second minimum). The blocked version presented stimuli for 400 ms. with a fixed 100 ms. inter-stimulus interval in blocks of 12 stimuli (i.e., 6 seconds). Each run started and ended with a 6 second “null-blocks” (a “block” without stimuli) and additionally contained six randomly inserted null-blocks. The event-related version showed each stimulus for 400 ms. as well, but contained inter-stimulus intervals ranging from 2 to 8 seconds and no null-blocks apart from a null-block at the start and end of each run (see figure below for a visual representation of the paradigms).

These two different versions (blocked vs. event-related) were acquired to demonstrate the difference in detection and estimation efficiency of the two designs. In this course, we only use the blocked version.

Face perception task#

In the face perception task, participants were presented with images of faces (from the publicly available Face Research Lab London Set). In total, frontal face images from 40 different people (“identities”) were used, which were either without expression (“neutral”) or were smiling. Each face image (from in total 80 faces, i.e., 40 identities \(\times\) 2, neutral/smiling) was shown, per participant, 6 times across the 12 runs (3 times per session). We won’t discuss the data from this task, as it won’t be used in this course.

Using FSL FEAT for preprocessing and first-level analysis#

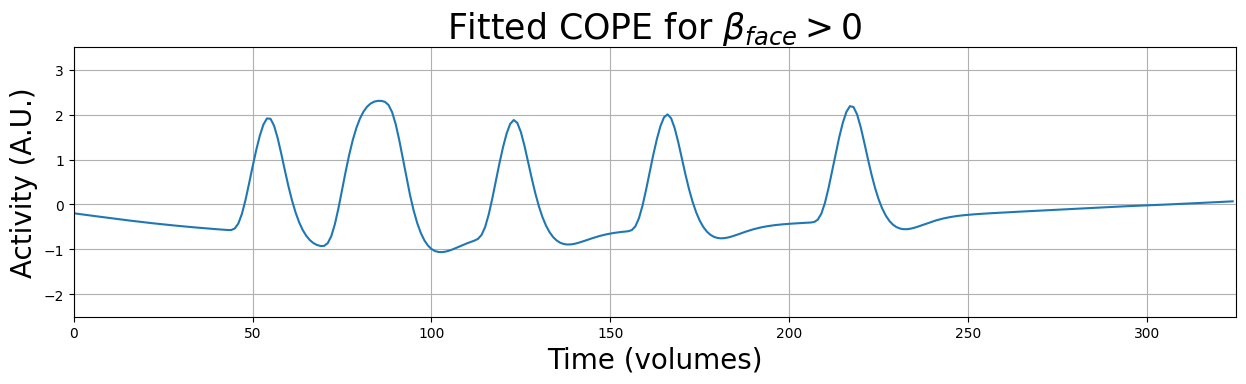

Most univariate fMRI studies contain data from a sample of multiple individuals. Often, the goal of these studies is to (1) estimate some effect (e.g., \(\beta_{face} >0\)) at the group-level, i.e., whether the effect is present within our sample, and subsequently, taking into account the variance (uncertainty) of this group-level effect, (2) to infer whether this effect is likely to be true in the broader population (but beware of the tricky interpretation of null-hypothesis significance tests).

A primer on the “summary statistics” approach#

When you have fMRI data from multiple participants, you are dealing with inherently hierarchical (sometimes called “multilevel”, “mixed”, or simply “nested”) data: you have data from multiple participants, which may be split across different runs or sessions, which each contain multiple observations (i.e., volumes across time).

Often, people use so called “mixed models” (or “multilevel models”) to deal with this kind of hierarchical data. These type of models use the GLM framework for estimating effects, but have a special way of estimating the variance of effects (i.e., \(\mathrm{var}[c\hat{\beta}]\)), which includes variance estimates from all levels within the model (subject-level, run-level, and group-level). In these mixed models, you can simply put all your data (\(y\) and \(\mathbf{X}\)) from different runs, sessions, and subjects, in a single GLM and estimate the effects of interest (and their variance).

Unfortunately, this “traditional” multilevel approach is not feasible for most univariate (whole-brain) analyses, because these models are quite computationally expensive (i.e., they take a long time to run), so doing this for all > 200,000 voxels is the brain is not very practical. Fortunately, the fMRI community came up with a solution: the “summary statistics” approach, which approximates a proper full multilevel model.

This summary statistics approach entails splitting up the analysis of (hierarchical) fMRI data into different steps:

Analysis at the single-subject (time series) level

Analysis at the run level (optional; only if you have >1 run!)

Analysis at the group level

The GLM analyses you did in week 2-4 were examples of “first-level” analyses, because they analyzed the time series of voxels from a single subject. This is the first step in the summary statistics approach. In this section, we’re going to demonstrate how to implement first-level analyses (and preprocessing) using FSL FEAT.

Later in this lab, we’re going to demonstrate how to implement analyses at the run-level. Next week, we’ll discuss group-level models. For now, let’s focus on how to run first-level analyses in FSL using FEAT.

First-level analyses (and preprocessing) in FEAT#

Alright, let’s get started with FEAT. You can start the FEAT graphical interface by typing Feat & in the command line (the & will open FEAT in the background, so you can keep using your command line).*

* Note that the upcoming ToDos do not have test-cells; at the very end of this section, you’ll save your FEAT setup, which we’ll test (with only hidden tests) afterwards.

The “Data” tab#

After calling Feat & in the command line, the FEAT GUI should pop up. This GUI has several “tabs”, of which the “Data” tab is displayed when opening FEAT. Below, we summarized the most important features of the “Data” tab.

print(sub_03_dir)

/home/runner/NI-edu-data/sub-03

We are going to analyze the flocBLOCKED data from the first session (ses-1): sub-03/ses-1/sub-03_ses-1_task-flocBLOCKED_bold.nii.gz.

sub_03_file = os.path.join(sub_03_dir, 'ses-1', 'func', 'sub-03_ses-1_task-flocBLOCKED_bold.nii.gz')

print("We are going to analyse: %s" % sub_03_file)

We are going to analyse: /home/runner/NI-edu-data/sub-03/ses-1/func/sub-03_ses-1_task-flocBLOCKED_bold.nii.gz

Let’s fill in the Data tab in FEAT. Click on “Select 4D data”, click on the “folder” icon, and navigate to the file (sub-03_ses-1_task-flocBLOCKED_bold.nii.gz). Once you’ve selected the file, click on “OK”. Note: within FEAT, you can click on .. to go “up” one folder. This way, you can “navigate” to the location of the file that you want to add.

Then, set the output directory to your home folder + sub-03_ses-1_task-flockBLOCKED. Note: this directory doesn’t exist yet! By setting the output directory to this directory, you’re telling FSL to create a directory here in which the results will be stored.

After selecting the 4D data, the “Total volumes” and “TR” fields should update to 325 and 0.700000, respectively. You can leave the “Delete volumes” field (0) and high-pass filter (100) as it is (no need to remove volumes and 100 second high-pass is a reasonable default).

The “Pre-stats” tab#

Now, let’s check out the “pre-stats” tab, which contains most of the preprocessing settings. Most settings are self-explanatory, but if you want to know more about the different options, hover you cursor above the option (the text, not the yellow box) for a couple of seconds and a green window will pop up with more information about that setting!

Note that the checkbox being yellow means that the option has been selected (and a gray box means it is not selected).

Ignore the “Alternative reference image”, “B0 unwarping”, “Intensity normalization”, “Perfusion subtraction”, and “ICA exploration” settings; these are beyond the scope of this course!

Let’s fill in the Pre-stats tab! Select the following:

Select “MCFLIRT” for motion-correction (this is the name of FSL’s motion-correction function);

Set “Slice timing correction” to “None” (we believe that slice-time correction does more harm than good for fMRI data with short TRs, like ours, but “your mileage may vary”);

Turn on “BET brain extraction” (this refers to skullstripping of the functional file! This can be done automatically, because it’s less error-prone than skullstripping of anatomical images);

Set the FWHM of the spatial smoothing to 4 mm;

Turn off “intensity normalization” (you should never use this for task-based fMRI analysis);

Turn on “highpass filtering”; leave “perfusion subtraction” unchecked;

Leave “ICA exploration” unchecked

The “Registration” tab#

Alright, let’s check out the “Registration” tab, in which you can setup the co-registration plan (from the functional data to the T1-weighted anatomical scan, and from the T1-weighted anatomical scan to standard space; we usually don’t have an “expanded functional image”, so you can ignore that).

Now, for the “Main structural image”, you actually need a high-resolution T1-weighted anatomical file that has already been skullstripped. You could do that with, for example, bet for this particular partipant. Below, we do this slightly differently, by applying an existing mask, computed by Fmriprep, to our structural image. We use the Python package Nilearn for this (you will learn more about this package in week 7)! No need to fully understand the code below.

from nilearn import masking, image

# Define anatomical file ...

anat = os.path.join(sub_03_dir, 'ses-1', 'anat', 'sub-03_ses-1_acq-AxialNsig2Mm1_T1w.nii.gz')

# ... and mask

mask = os.path.join(data_dir, 'derivatives', 'fmriprep', 'sub-03', 'ses-1', 'anat', 'sub-03_ses-1_acq-AxialNsig2Mm1_desc-brain_mask.nii.gz')

# Affine of mask is different than original anatomical file (?),

# so let's resample the mask to the image

mask = image.resample_to_img(mask, anat, interpolation='nearest')

# Set everything outside the mask to 0 using the apply_mask/unmask trick

anat_sks = masking.unmask(masking.apply_mask(anat, mask), mask)

# And finally save the skullstripped image in the same directory as the

# original anatomical file

out = anat.replace('.nii.gz', '_brain.nii.gz')

if not os.path.isfile(out):

anat_sks.to_filename(out)

You can leave the file under “Standard space” as it is (this is the MNI152 2mm template, one of the most used templates in the fMRI research community). Turn on the “Nonlinear” option in the “Standard space” box and do not change the default options of non-linear registration.

The “Stats” tab#

Almost there! Up next is the “Stats” tab, in which you specify the design (\(\mathbf{X}\)) you want to use to model the data.

Then, to specify our predictors-of-interest, we need to click the “Full model setup” button. This creates a new window in which we can create our predictors; see the image below for an explanation of the options.

The most often-used option to define the event onsets and durations is the “Custom (3 column format)” option. This allows you to select a standard text-file with three columns (separated by tabs), in which the first column indicates the event onsets (in seconds), the second column indicates the event duration (in seconds), and the third column indicates the event “weight” (which may vary in case of parametric designs; for non-parametric designs, you should set the weight-value to 1). Different rows define different events of a particular condition.

Our data only has a BIDS-compatible “events” file, which contain the onset, duration, and trial type of trials from all conditions, while FSL requires a separate events file per condition (and without a header). Below, we run a function that creates these separate condition files for us:

from niedu.utils.nii import create_fsl_onset_files

create_fsl_onset_files(data_dir)

Let’s set up the design! For our flocBLOCKED run, we should model each of the image conditions (“face”, “body”, “place”, “character”, and “object”) as separate predictors, assuming that they have a different effect on voxels (in different brain regions). Let’s model the responses (button presses) as well.

So, create six predictors with the names “face”, “body”, “place”, “character”, “object”, and “response” (in this exact order). To save you some work, we have already created the onset-files associated with these six different conditions (which end in: *condition-{name of condition}_events.txt).

For each predictor:

set the convolution to “Double-Gamma HRF”

turn off the “Add temporal derivative” and “Orthogonalise” options

leave the “Apply temporal filtering” option checked (this applies the high-pass filter to the design, \(X\), as well).

YOUR ANSWER HERE

If you did it correctly, you can press the “View design” button and a new window with the design should pop up (with the different predictors as squiggly lines from top top bottom). The red line of the left side of the design matrix represents the (length of the) high-pass filter.

YOUR ANSWER HERE

Now let’s create some contrasts! In the “Contrasts and F-tests” tab, you can specify the contrasts that you want to test. Importantly, FSL makes a difference between “Original EVs” and “Real EVs”. Original EVs are the predictors-of-interest that you defined in the “EVs” tab. Real EVs are the original EVs + the extra predictors that are generated due to the specific HRF-model. For example, if you’d choose a gamma basis set instead of a double-gamma HRF, the original canonical HRF of each predictor would be “Original EVs” while all predictors making up the HRF-components (canonical and temporal and dispersion derivatives) would be the “Real EVs”. In our case, the original and real EVs are the same.

To create contrasts (and F-tests, i.e., collections of contrasts), click on the “Contrasts & F-tests” tab. Here, you define the actual contrast vectors. For each contrast, you can give it a name (under “Title”) and set the contrast weight for each predictor (or “EV”). To create F-tests, set the number of F-tests that you want to do (i.e., increase the number next to the “F-tests” field), and check, per F-test, which contrasts to want to include in them. For example, if you want to include contrasts 1 and 2 in F-test, make sure that these checkboxes are selected under “F1” (or “F2”, “F3”, etc.).

Given the six original EVs (“face”, “body”, “place”, “character”, “object”, and “response”), define the contrasts corresponding to the following null (\(H_{0}\)) and alternative (\(H_{a}\)) hypotheses (give them whatever name you want, but adhere to this order):

\(H_{0}: \beta_{face} = 0\)

\(H_{a}: \beta_{face} > 0\)\(H_{0}: \beta_{place} = 0\)

\(H_{a}: \beta_{place} > 0\)\(H_{0}: \beta_{response} = 0\)

\(H_{a}: \beta_{response} > 0\)\(H_{0}: 4\times\beta_{face} - \beta_{body} - \beta_{place} - \beta_{character} - \beta_{object} = 0\)

\(H_{a}: 4\times\beta_{face} - \beta_{body} - \beta_{place} - \beta_{character} - \beta_{object} > 0\)\(H_{0}: 4\times\beta_{place} - \beta_{body} - \beta_{face} - \beta_{character} - \beta_{object} = 0\)

\(H_{a}: 4\times\beta_{place} - \beta_{body} - \beta_{face} - \beta_{character} - \beta_{object} > 0\)\(H_{0}: \beta_{face} = \beta_{body} = \beta_{place} = \beta_{character} = \beta_{object} = 0\)

\(H_{a}: (\beta_{face} > 0)\ \&\ (\beta_{body} > 0)\ \&\ (\beta_{place} > 0)\ \&\ (\beta_{character} > 0)\ \&\ (\beta_{object} > 0)\)\(H_{0}: \beta_{face} = \beta_{body} = \beta_{place} = \beta_{character} = \beta_{object} = 0\)

\(H_{a}: (\beta_{face} < 0)\ \&\ (\beta_{body} < 0)\ \&\ (\beta_{place} < 0)\ \&\ (\beta_{character} < 0)\ \&\ (\beta_{object} < 0)\)

Also, define the following F-test (where the vertical line stands for “or”):

\(H_{0}: \beta_{face} = \beta_{place} = 0\)

\(H_{a}: (\beta_{face} \neq 0)\ |\ (\beta_{place} \neq 0)\)

After you’re done defining the contrasts and F-test, you can press “View design” again, which will now also include the contrasts/F-tests that you defined. Moreover, if you click on “Efficiency”, a new window opens with information about the effiency of the design. On the left, a correlation matrix of the predictors is shown, where darker off-diagonal cells means lower correlation between predictors (which is a good thing!). On the right, the diagonal of the matrix shows you the “eigenvalues” of the singular value decomposition of the design matrix, which should decrease from top-left to bottom-right. In general, the brighter the values, the more efficient the design matrix. In the lower-right corner, it lists the estimated effect size (as % signal change) required for a significant effect. These estimates depend on your design efficiency (\(\mathbf{c}(\mathbf{X}^{T}\mathbf{X})^{-1}\mathbf{c}^{T}\)) and the assumed level of noise (\(\hat{\sigma}^2\)): the lower these values, the better!

The “Post-stats” tab#

Alright, the last tab! The “Post-stats” tab contains options to threshold the resulting statistics-maps. The only interesting options for our purposes are those in the “Thresholding” box. Technically, we don’t need to threshold any of our statistics, because we are only interested in inference at the group-level (not the first-level analysis; we’re not aiming to infer anything from the results of a single-subject!). But using a p-value threshold of 0.05 “uncorrected” (which means: not corrected for multiple comparisons — a topic we’ll discuss next week!) is usually used to detect any potential problems during the experimental, preprocessing, and analysis process. For example, if we don’t see significant visual cortex activation in response to a task with visual stimuli at \(p < 0.05\) (uncorrected), then it is very likely that something went wrong somewhere in the preprocessing/analysis pipeline (e.g., stimulus onsets are incorrect).

Alright, we’re done setting up FEAT for our first-level analysis! One last thing: let’s save the setup (which we’ll check and grade).

We actually ran the first-level analysis for this participant already, so you don’t have to do this yourself! We’ll check it out in the next section. (The following cells all test your setup_feat.fsf file; as they all have hidden test, you may ignore them.)

''' Tests your setup_feat.fsf file: initial check + Data tab'''

import os

import os.path as op

par_dir = op.basename(op.dirname(op.abspath('.')))

if par_dir != 'solutions': # must be a student-account

fsf = 'setup_feat.fsf'

if not op.isfile(fsf):

raise ValueError("Couldn't find a 'setup_feat.fsf' file in your week_5 directory!")

print("Only hidden tests!")

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

Cell In[12], line 9

7 fsf = 'setup_feat.fsf'

8 if not op.isfile(fsf):

----> 9 raise ValueError("Couldn't find a 'setup_feat.fsf' file in your week_5 directory!")

11 print("Only hidden tests!")

ValueError: Couldn't find a 'setup_feat.fsf' file in your week_5 directory!

''' Tests your setup_feat.fsf file: Pre-stats tab'''

print("Only hidden tests!")

Only hidden tests!

''' Tests your setup_feat.fsf file: Registration tab'''

print("Only hidden tests!")

Only hidden tests!

''' Tests your setup_feat.fsf file: Stats tab (filt) '''

print("Only hidden tests!")

Only hidden tests!

''' Tests your setup_feat.fsf file: Stats tab (EVs) '''

print("Only hidden tests!")

Only hidden tests!

''' Tests your feat_setup.fsf file: Stats tab, (con 1)'''

print("Only hidden tests!")

Only hidden tests!

''' Tests your feat_setup.fsf file: Stats tab, (con 2)'''

print("Only hidden tests!")

Only hidden tests!

''' Tests your feat_setup.fsf file: Stats tab, (con 3)'''

print("Only hidden tests!")

Only hidden tests!

''' Tests your feat_setup.fsf file: Stats tab, (con 4)'''

print("Only hidden tests!")

Only hidden tests!

''' Tests your feat_setup.fsf file: Stats tab, (con 5)'''

print("Only hidden tests!")

Only hidden tests!

''' Tests your feat_setup.fsf file: Stats tab, (con 6)'''

print("Only hidden tests!")

Only hidden tests!

''' Tests your feat_setup.fsf file: Stats tab, (con 7)'''

print("Only hidden tests!")

Only hidden tests!

''' Tests your feat_setup.fsf file: Stats tab (F-test)'''

print("Only hidden tests!")

Only hidden tests!

''' Tests your feat_setup.fsf file: Post-stats tab'''

print("Only hidden tests!")

Only hidden tests!

Making sense of FEAT output#

When FEAT is running the analyis, it will create a results-directory (at the location you specified) ending in .feat, which contains all the information and files necessary for the analysis as well as the results. Fortunately, it also generates an informative report.html file with a nice summary of the preprocessing and analysis results. We already ran a first-level analysis like with the setup that you specified in the previous section, which we download below:

print("Starting download of FEAT directory (+- 285MB) ...\n")

!aws s3 sync --no-sign-request s3://openneuro.org/ds003965 {data_dir} --exclude "*" --include "derivatives/fsl/sub-03/flocBLOCKED/ses-1.feat/*"

print("\nDone!")

Starting download of FEAT directory (+- 285MB) ...

Completed 7 Bytes/~270.1 MiB (9 Bytes/s) with ~626 file(s) remaining (calculating...)

download: s3://openneuro.org/ds003965/derivatives/fsl/sub-03/flocBLOCKED/ses-1.feat/absbrainthresh.txt to ../../../../../../NI-edu-data/derivatives/fsl/sub-03/flocBLOCKED/ses-1.feat/absbrainthresh.txt

Completed 7 Bytes/~270.1 MiB (9 Bytes/s) with ~625 file(s) remaining (calculating...)

Completed 10.8 KiB/~271.3 MiB (13.8 KiB/s) with ~647 file(s) remaining (calculating...)