Machine learning (“decoding”) analyses#

This week’s tutorial is about how to implement “decoding” analyses in Python!

The term “decoding” is often used to denote analyses that aim to predict a single experimental feature (which can be either within-subject or between-subject) based on patterns of neuroimaging data. Some more advanced techniques make it possible to predict more than one experimental feature at once (with Bayesian “reconstruction” techniques and “inverted encoding models”), but these are beyond the scope of this course. Here, we’ll focus on machine learning/statistical models and techniques that allow you to predict a single experimental feature.

We’ll make heavy use of the awesome scikit-learn library — the go-to library for machine learning in Python.

What you’ll learn: at the end of this tutorial, you will be able to:

use and implement feature-selection/extraction methods;

fit machine learning models and predict (new) samples;

implement cross-validation routines;

statistically evaluate model performance estimates;

Estimated time needed to complete: 8-12 hours

Credits: if you use scikit-learn in your research, please cite the corresponding paper

Data representation#

Decoding analyses always need two sets of data: the brain patterns, which we’ll refer to as \(\mathbf{R}\) (for Response), and a single experimental feature that we want to predict, which we’ll refer to as \(\mathbf{S}\) (which traditionally refers to Stimulus). Note that \(\mathbf{s}\) could be a within-subject experimental factor (such as stimulus or response-related factors) or a between-subject variable (such as age or depressed vs. healthy control). Moreover, the to-be-predicted variable* can be either continuous (e.g., reaction time) or categorical (e.g., object category), which are associated with different types of models, regression and classification models respectively (more about this later). Note that “direction” of analysis is the exact opposite of what is done in enconding analyses. In encoding analyses, we try to predict the brain data (dependent variable) using experimental features (independent variables), while in decoding analyses we try to predict an experimental feature (dependent variable) using a a set of brain patterns (independent variables)! But essentially, encoding and decoding models are mathematically the same (they just use different inputs).

In the first part of this lab, we’ll work with simulated data. For now, we’ll assume that our data is from a simple face perception experiment in which participants viewed images with either male (condition: “M”) or female faces (condition: “F”) across four different fMRI runs. So, our experimental feature of interest is a categorical variable with two levels (“M” and “F”), making this a classification analysis (which is more common than regression in the context of cognitive neuroscience). Each run, participants saw fourty images (twenty for each condition) presented in a random order.

We’ll simulate the patterns (\(\mathbf{R}\)) and experimental feature (\(\mathbf{S}\), “M” vs. “F”) below. For now, we’ll generate random data (with some autocorrelation), for reasons that will become clear later. We’ll assume that we are restricting our analysis to a single region-of-interest containing a 1000 voxels.

* Often, the to-be-predicted variable is called the “target” or “dependent variable”. Here, we’ll use the term “target”.

import numpy as np

from scipy.linalg import toeplitz

from niedu.utils.nipa import generate_labels

N_per_run = 40

M = 4 # nr of runs

K = 1000 # nr of voxels

# Generate random data drawn for a multivariate normal

# distribution with AR1 noise (with phi = 0.85) to

# simulate autocorrelated noise in the estimated patterns,

# which is plausible for designs with relatively short ISIs

mu = np.zeros(N_per_run)

V = 0.85 ** toeplitz(np.arange(N_per_run))

# R_runs is a list of M arrays of shape N_per_run x K

R_runs = [np.random.multivariate_normal(mu, V, size=K).T for _ in range(M)]

# S_runs is a list of M arrays of shape N_per_run

# The custom generate_label function creates slightly correlated

# labels

S_runs = [generate_labels(['M', 'F'], N_per_run / 2, [0.7, 0.3]) for _ in range(M)]

print("Example of patterns for run 1:\n", R_runs[0])

print("\nExample of target for run 1:\n", S_runs[0])

Example of patterns for run 1:

[[ 0.70248736 -0.94395733 -0.51906461 ... 1.02065335 -0.5481945

-0.70312707]

[ 0.63195884 -0.87853903 -0.30651633 ... 1.66633316 -0.44055865

-0.15091501]

[ 2.06738308 -0.981851 -0.90944086 ... 1.47832213 0.75926163

-0.4902048 ]

...

[-0.02752864 -0.34174816 -2.05763914 ... 0.30884591 -0.26293549

-0.86723688]

[ 0.62627232 -0.10885171 -3.02955439 ... -0.35126472 -0.94343858

-1.56204958]

[ 0.46080567 -0.029 -1.34808462 ... -0.55228256 -0.13795203

-0.63026681]]

Example of target for run 1:

['M', 'F', 'F', 'M', 'M', 'F', 'F', 'M', 'M', 'M', 'F', 'F', 'F', 'M', 'M', 'M', 'M', 'M', 'F', 'M', 'M', 'F', 'F', 'F', 'F', 'F', 'F', 'M', 'M', 'M', 'M', 'M', 'M', 'M', 'F', 'F', 'F', 'F', 'F', 'F']

Alright, technically, we have everything we need for a decoding analysis. However, machine learning (ML) and statistical models often require all data to be represented numerically, so we need to convert our target (containing the values “M” and “F”) into a numeric format.

S_run1 = S_runs[0]

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo. '''

from niedu.tests.nipa.week_2 import test_lab2num

test_lab2num(S_run1, S_run1_num)

While converting labels to numeric values can be done quite easily using standard Python, it gives us with a nice excuse to introduce some scikit-learn functionality. Specifically, the LabelEncoder class, which allows you to encode your target labels into a numeric format. Let’s start with importing it (from the preprocessing module):

from sklearn.preprocessing import LabelEncoder

The way LabelEncoder is used is similar to some of the Nilearn and Nistats functionality you’ve seen. In short, you first need to initialize the object (with, optionally, some parameters), after which you can give it data to fit and transform. This pattern is something we’ll encounter a lot in this lab when working with scikit-learn.

Let’s initialize a LabelEncoder object below:

# LabelEncoder objects are not initialized with any parameters

lab_enc = LabelEncoder()

Now, let’s “fit” it on the labels of our first run:

lab_enc.fit(S_runs[0])

LabelEncoder()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

LabelEncoder()

Notice that the fit function doesn’t return anything useful (well, technically, it returns itself). Instead, when calling fit, it stores some parameters in the object itself as attributes which are used when calling the transform. For the LabelEncoder specifically, it stores the unique conditions (often called classes in machine learning) in an attribute called classes_:

print(lab_enc.classes_)

['F' 'M']

In general, most “things” that are inferred or computed in the fit method of scikit-learn objects (and are needed later when calling transform) are stored in attributes with a trailing underscore (like classes_). We know, this all sounds incredibly trivial, but explaning these things in detail will give you a better understanding of how scikit-learn works (which is going to help a lot when dealing with more complicated functionality).

Finally, after fitting the LabelEncoder object, we can call the transform method to actually transform the labels:

S_run1_num = lab_enc.transform(S_runs[0])

print(S_run1_num)

[1 0 0 1 1 0 0 1 1 1 0 0 0 1 1 1 1 1 0 1 1 0 0 0 0 0 0 1 1 1 1 1 1 1 0 0 0

0 0 0]

Note that, unlike the fit method, the transform method actually returns something, i.e., the transformed labels. Also note that the numeric labels are assigned alphabetically (i.e., “F” gets assigned 0, “M” gets assigned 1).

Like you’ve seen in the Nilearn notebook, we can often call fit and transform at once using the fit_transform method:

S_run1_num = lab_enc.fit_transform(S_runs[0])

print(S_run1_num)

[1 0 0 1 1 0 0 1 1 1 0 0 0 1 1 1 1 1 0 1 1 0 0 0 0 0 0 1 1 1 1 1 1 1 0 0 0

0 0 0]

Also, after the LabelEncoder has been fit, it can be reused on other data, i.e., you can call the transform method on new arrays. This is how many classes in scikit-learn are actually used (i.e., fit on a particular subset of data and then apply to another subset), as it allows for efficient cross-validation &mdash a topic that will discuss in detail later.

''' Implement your ToDo here . '''

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo. '''

from niedu.tests.nipa.week_2 import test_lab2num_all_runs

test_lab2num_all_runs(S_runs, lab_enc, S_runs_num)

Alright, now we have everything we need to start building our decoding pipeline!

Standardization#

In addition to the “preprocessing” steps for pattern analyses discussed in last week’s lab, when doing decoding, you additionally need to standardize your brain features (i.e., the columns in your pattern matrix) on which you fit your model. With “standardization”, we mean making sure each feature (\(\mathbf{R}_{j}\) for column \(j\)) in your pattern matrix has 0 zero mean and unit (1) standard deviation, which can be achieved as follows for each feature \(j\):

where \(\bar{\mathbf{R}_{j}}\) represents the mean of \(\mathbf{R}_{j}\) and \(\hat{\sigma}(\mathbf{R}_{j})\) represents the standard deviation of \(\mathbf{R}_{j}\). In other words, for each value in \(\mathbf{R}\), you subtract the mean from the column it belongs to and subsequently you divide the result by the standard deviation of the column it belongs to. This process is also known as z-scoring.

This standardization process is done for each brain feature (column) separately. Standardization is important for most ML/statistical models because it makes sure that each brain feature has the same scale, which often helps in efficiently estimating model parameters.

Importantly, when you have patterns from multiple runs (as is often the case in fMRI decoding), these patterns should also be independently standardized, even if you want to pool these patterns later on (see Lee & Kable, 2018). This is because some runs may yield patterns with a relatively higher mean or standard deviation across samples (for example, because participants start moving more towards the end of the experiment, leading to more noisy pattern estimates).

''' Implement your ToDo here. '''

R_run1 = R_runs[0]

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the ToDo above. '''

# ToThink: do you understand how we're testing your answer here?

np.testing.assert_array_almost_equal(R_run1_norm.mean(axis=0), np.zeros(R_run1.shape[1]))

np.testing.assert_array_almost_equal(R_run1_norm.std(axis=0), np.ones(R_run1.shape[1]))

print("Well done!")

We can do this similarly using the StandardScaler class from scikit-learn, which has the same fit/transform interface:

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

R_run1_norm = scaler.fit_transform(R_run1)

In the fitting process, the StandardScaler computed the feature-wise mean and standard deviation, which it stores in the mean_ and _scale attributes:

print(scaler.mean_.shape)

print(scaler.scale_.shape)

(1000,)

(1000,)

For the initial run-wise standardization, we usually don’t want to cross-validate our standardization process (i.e., to for example fit the StandardScaler on run 1 and subsequently transform the other runs) for reasons discussed before. As such, we need to call fit and transform on each run separately:

# Below, we use a "list comprehension" to loop across our runs

# to standardize each run separately!

R_runs_norm = [scaler.fit_transform(this_R) for this_R in R_runs]

We’re almost ready to start fitting models. Before we do so, we are going to concatenate our data (\(\mathbf{R}\) and \(S\)) across runs because we want to give our model as much data as possible!

# Load S_runs_num if you didn't manage to do the last ToDo

S_runs_num = np.load('S_runs_num.npy')

R_all = np.vstack(R_runs_norm) # stack vertically

S_all = np.concatenate(S_runs_num)

print("Shape R_all:", R_all.shape)

print("Shape S_all:", S_all.shape)

Shape R_all: (160, 1000)

Shape S_all: (160,)

Fitting models#

When fitting decoding models, we assume that we can approximate our target variable (\(\mathbf{S}\)) as a function of the input data (\(\mathbf{R}\)):

Usually, the models used in pattern analyses assume linear functions (especially with relatively little data), i.e., functions that approximate the target using a linear combination of input variables (\(\mathbf{R}_{j}\)) weighted by parameters (\(\beta\)). Note that the univariate GLM often used in encoding models is such a linear model. The process of model fitting is estimating parameters that minize the discrepancy (error) between the predicted values (\(\hat{\mathbf{S}}\)) and the actual values (\(\mathbf{S}\)) of the target variable, both for regression (\(\mathbf{S}\) is continuous) and classification (\(\mathbf{S}\) is categorical) models. Different models differ in how they exactly estimate their parameters, but the general process is the same (minimizing error between predictions and target). In this course, we won’t go much into the differences across models (partly because in practice, we found that performance doesn’t differ that much between models).

Alright, let’s get to it. Below, we import the LogisticRegression class, a particular linear classification model (unlike the name suggests).

from sklearn.linear_model import LogisticRegression

We are many “options” (often called “hyperparameters”) we can set upon initialization of a LogisticRegression object, but for now, we will only set the “solver” (for no other reason that this will get rid of a warning during the fitting process):

# clf = CLassiFier

clf = LogisticRegression(solver='lbfgs')

Now, the fitting process using this model (or actually, any model in scikit-learn) is as simple as, guess what, calling the fit method! Unlike the LabelEncoder and StandardScaler that we discussed before, models in scikit-learn (including LogisticRegression) require two arguments when calling their fit method: X and y, which represent the input data (in our case: \(\mathbf{R}\)) and the target variable (in our case: \(\mathbf{S}\)):

# The text in the output cell is there because the

# fit model returns "itself" (you can just ignore this)

clf.fit(R_all, S_all)

LogisticRegression()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

LogisticRegression()

After fitting, the model stores the estimated parameters (\(\beta\)) as an araay in the coef_ (“coefficients”, another term for parameters) attribute:

print("Shape of coef_:", clf.coef_.shape)

Shape of coef_: (1, 1000)

As you can see, the model estimated one parameter for each brain feature (column in \(\mathbf{R}\)). Now, unlike the previously discussed LabelEncoder and StandardScaler, scikit-learn models do not have a transform method; instead, they have a predict method, which takes a single input (a 2D array with observations) and generates discrete* predictions for this input. For now, we’ll call predict on the same data we’ve fit the model on:

* Some models, including the LogisticRegression model, have an additional method called predict_proba which returns probabilistic instead of discrete predictions.

preds = clf.predict(R_all)

print("Predictions for samples in R_all:\n", preds)

Predictions for samples in R_all:

[0 0 0 0 1 1 0 0 1 1 1 1 1 1 1 1 1 1 0 1 1 1 1 0 1 0 1 1 1 0 0 0 0 0 0 0 0

0 0 0 1 1 0 0 1 1 0 0 1 1 1 1 1 0 1 0 0 0 0 0 0 0 0 1 0 0 0 0 0 0 0 1 1 1

1 1 1 1 1 1 0 1 0 0 0 0 1 1 1 0 0 0 1 0 0 0 1 1 1 1 1 1 1 1 1 1 0 0 0 1 1

1 0 0 1 0 1 0 0 0 1 1 0 0 1 1 1 0 0 0 1 1 1 1 0 1 1 1 1 1 1 1 1 1 1 1 0 0

0 0 0 0 0 0 0 0 0 0 0 0]

Model evaluation#

Alright, we have some preditions for our data! But how do we evaluate these predictions? This actually depends on whether you have a classification model (with categorical predictions) or a regression model (with continuous predictions). Because classification analyses are most popular in cognitive neuroscience (and our example data is categorical) and you are already familiar with some regression metrics such as \(R^2\) (discussed in the previous course), we’ll focus on model evaluation metrics for classification here.

Metrics for discrete predictions#

There are many different metrics to evaluate the predictions of classification models. The most well known (but not necessarily always appropriate) metric for discrete predictions is accuracy, which is defined as follows:

For accuracy, the best possible score is 1 (predict all samples correctly) and “chance level” performance (i.e., the expected score when randomly guessing) is, in general, \(\frac{1}{\mathrm{Number\ of\ classes}}\), so for our example with two classes (“M” and “F”), it is 0.5.

''' Implement your ToDo here. '''

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo. '''

from niedu.tests.nipa.week_2 import test_acc

test_acc(preds, S_all, acc)

Metrics for probabilistic predictions#

Some classifiers allow for probabilistic (instead of discrete) predictions. For those classifiers, an additional method called predict_proba exists, which outputs a probability for each class. So, in a two-class classification setting, the predict_proba method will not give a single discrete prediction (i.e., either 0 or 1) but a probability distribution over classes (e.g., 0.92 for class 0 and 0.08 for class 1).

The LogisticRegression model from scikit-learn actually allows for probabilistic prediction. In general, if we’ll give it all \(N\) trials for a target variable with \(M\) classes, it will output a \(N \times M\) array with probabilities:

probas = clf.predict_proba(R_all)

# let's print the first five trials

print(np.round(probas[:5, :], 3))

[[0.994 0.006]

[0.998 0.002]

[0.996 0.004]

[0.989 0.011]

[0.013 0.987]]

One often-used performance metric for probabilistic predictions is the “Area Under the ROC curve” (often abbreviated as AUROC or just AUC). Fortunately, the scikit-learn library contains implementations of many performance metrics, including AUROC (called roc_auc_score in scikit-learn), which can be imported from the metrics module:

from sklearn.metrics import roc_auc_score

AUROC is an excellent metric to use for probabilistic predictions, but there’s one caveat: when evaluating probabilistic predictions (formatted as a \(N \times M\) matrxi), it needs the true target values (dependent variable) in a one-hot-encoded format. One-hot-encoding (OHE) is a technique that transforms a \(N \times 1\) vector with \(M\) classes into a \(N \times M\) binary matrix:

You might know this technique under the name “dummy (en)coding”.

''' Implement the optional ToDo here. '''

def one_hot_encode(y):

''' One-hot-encodes a 1D target vector.

Parameters

----------

y : numpy array

1D target vector with N observations and M classes

Returns

-------

An NxM numpy array

'''

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo. '''

# Test 1

y = np.array([0, 1])

out = one_hot_encode(y)

ans = np.eye(2)

np.testing.assert_array_equal(ans, out)

# Test 2

y = np.array([1, 2, 3, 2, 2, 1])

out = one_hot_encode(y)

ans = np.array([[1, 0, 0], [0, 1, 0], [0, 0, 1], [0, 1, 0], [0, 1, 0], [1, 0, 0]])

np.testing.assert_array_equal(ans, out)

# Test 3

y = np.array([3, 2, 1])

out = one_hot_encode(y)

ans = np.rot90(np.eye(3))

np.testing.assert_array_equal(ans, out)

print("Well done!")

While the above ToDo was a nice way to practice your Python skills, we nonethless recommend using the OneHotEncoder class from scikit-learn to one-hot-encode your target vector. It uses the fit-transform syntax you are familiar with by now. Importantly, as the OneHotEncoder is, in practice, often used to one-hot-encoded predictors (independent variables), it expects a 2D array (not a 1D vector). So, when one-hot-encoding a 1D target variable, you can add a singleton axis (with np.newaxis) to make it work:

from sklearn.preprocessing import OneHotEncoder

ohe = OneHotEncoder(sparse_output=False) # we don't want a "sparse" output

S_all_ohe = ohe.fit_transform(S_all[:, np.newaxis])

print(S_all_ohe.shape)

(160, 2)

Finally, we can use the roc_auc_score function to compute our model performance. Like any metric implementation in scikit-learn, it is called as follows:

score = metric(true_labels, predicted_labels)

where true_labels and predicted_labels are either 1D vectors of length \(N\) (in case of discrete predictions) or 2D \(N \times M\) arrays (in case of probabilistic predictions). Note that by default these metrics output a single score (often the average of the class-specific scores); some (but not all) metrics (including the roc_auc_score) allow the function to return a class-specific score by setting the optional argument average to None:

# Omit average=None to get a single (average) score

scores = roc_auc_score(S_all_ohe, probas, average=None)

print(scores)

[1. 1.]

One of these pseudo \(R^2\) metrics is “Tjur’s pseudo \(R^2\)” which is defined as the difference between the average (across observations) probability of a particular class and the average probability of the other class(es) for a given label. So, suppose we’re dealing with a two-class classification problem (with class 0 and class 1), and the average probability for class 1 of trials belonging to class 1 is 0.9, while the average probability for class 1 of trials belonging to class 0 is 0.3, then the Tjur’s pseudo \(R^2\) score for class 1 is \(0.9-0.3=0.6\). Formally, the Tjur’s pseudo \(R^2\) score for class \(m\) is defined as:

for any set of trials belonging to class 1 (\(p^{m}_{i}\)) and complementary set of trials not beloning to class 1 (\(p^{\neg m}_{i}\)).

Complete the function tjur_r2 below that takes two arguments — target (a 1D array with target labels) and probas (a 2D array with probabilities) — and should output an array of length \(M\) with Tjur’s pseudo \(R^2\) scores for the \(M\) classes in the target array.

''' Implement your ToDo here. '''

def tjur_r2(target, probas):

''' Computes Tjur's R2 score for all classes in `target`.

Parameters

----------

target : numpy array

A 1D array with numerical targets

probas : numpy array

A 2D array with target probabilities

Outputs

-------

A numpy array of length M with R2 scores for all M classes

'''

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the ToDo above. '''

y = np.array([0, 0, 1, 1])

probas = np.array([[0.9, 0.1], [0.95, 0.05], [0.3, 0.7], [0.4, 0.6]])

ans = tjur_r2(y, probas)

np.testing.assert_array_almost_equal(ans, np.array([0.575, 0.575]))

y = np.array([1, 1, 3, 3, 2, 2])

probas = np.array([[0.8, 0.1, 0.1], [0.7, 0.2, 0.1], [0.4, 0.0, 0.6], [0.3, 0.1, 0.6], [0.1, 0.5, 0.4], [0.2, 0.6, 0.2]])

ans = tjur_r2(y, probas)

np.testing.assert_array_almost_equal(ans, np.array([0.5, 0.45, 0.4]))

print("Well done!")

Cross-validation#

If you did the previous ToDos correctly, you should have found that the accuracy was 1 (the maximum possible score)! Amazing! But wait, how is this possible? We generated random data, right?

So, what is the issue here? Well, we fitted the model on the same data that we want to generate predictions for! While this is common practice in many statistical models in psychology and neuroscience (including standard univariate “activation-based” fMRI models), this is not advisable for decoding models. The reason for this is that our decoding models often have many more predictors (i.e., brain features) than observations (i.e., trials). The consequence is that the model has a hard time figuring out what is “signal” and what is “noise”, which will often cause your model to capitalize on spurious correlations between your data (\(\mathbf{R}\)) and the target (\(\mathbf{S}\)). The result is that your model will be overfitted and your model performance estimate will be overly optimistic estimate of generalization performance.

To obtain an unbiased (that is, not overly optimistic) estimate of generalization performance, machine-learning based analyses often perform cross-validation. Cross-validation is, in it’s broadest definition, the process of estimating analysis parameters on a different subset of your data than the data you want to generate predictions for (and thus base generalization performance on). With “analysis parameters”, we do not only mean the parameters of your statistical model (\(\hat{\beta}\)), but this may also involve parameters estimated during preprocessing and feature transformations (which we’ll talk about later). Importantly, cross-validation (if done properly) allows you to derive an unbiased estimate of generalization performance, i.e., how well your analysis would generalize to a new dataset. Importantly, cross-validation does not solve overfitting; it only detects overfitting. To prevent overfitting, you can employ regularization or feature selection/extraction techniques, of which the latter is briefly discussed in this tutorial.

Train vs. test set partitioning#

Usually, the subset of data you use to fit your analysis parameters on is called the train set and the subset you evaluate your model predictions on is often called the test set. Assuming that each observation (i.e., row in \(\mathbf{R}\)) is independent from all other observations, any spurious correlation that is capitalized upon in the train set will not generalize to the test set!

There are different cross-validation schemes (i.e., how you partition your data in a train and set set). For the example in the next cell, we’ll use a simple hold-out scheme, in which we’ll reserve 50% of the data for the test set (note that this could have been a different percentage). (We’ll discuss more intricate cross-validation schemes such as K-fold in the next section).

R_train = R_all[0::2, :] # all even samples

S_train = S_all[0::2]

R_test = R_all[1::2, :] # all odd samples

S_test = S_all[1::2]

After splitting the data into a train and test set, we have introduced a “problem” however: the features within the train and test set may not have 0 mean and unit (1) variance anymore! Given that the features were properly standardized across all samples in our simulated fMRI dataset, this is unlikely to be a problem for our classifier. It is still good practice to make sure your train set is properly standardized. So, before fitting our classifier, let’s standardize the train set:

R_train_norm = scaler.fit_transform(R_train)

Now, we can fit our model on the standardized train set …

clf.fit(R_train_norm, S_train)

LogisticRegression()In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook.

On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

LogisticRegression()

Before predicting the test set, however, we need to decide whether we want to independently standardize the test set or whether to cross-validate our previously estimated standardized parameters (the feature-wise mean and standard deviation). Although opinions differ on this topic (see e.g. this excellent article), if we want a truly unbiased estimate of generalization, we should also cross-validate our standardization procedure in addition to cross-validation of our model. So, to cross-validate our standardization procedure, we do the following for each feature \(j\) of our test set:

# Note that we're *not* calling fit on the test set, i.e.,

# we're cross-validating our standardization procedure!

R_test_norm = scaler.transform(R_test)

So, now we can cross-validate our model and derive predictions for our test set:

preds = clf.predict(R_test_norm)

print("Predictions for our test set samples:\n", preds)

Predictions for our test set samples:

[0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 1 1 0 1 1 1 1 1 0 0 0 0 0 0 0 0 1

1 1 1 0 0 0 1 0 1 1 1 1 1 1 1 0 0 0 0 0 1 0 0 0 1 1 1 0 1 1 1 1 1 1 1 1 0

0 0 0 0 0 0]

These predictions (preds) are made independently from the fitting process. Now, let’s evaluate the model performance on these predictions, this time we’re going to be lazy and use the accuracy_score from the metrics module in scikit-learn:

from sklearn.metrics import accuracy_score

acc_cv = accuracy_score(S_test, preds)

print("Cross-validated accuracy:", acc_cv)

Cross-validated accuracy: 0.725

As you can see, accuracy is not perfect (1.0) anymore, but it is still much higher than you’d expect on random data (for which chance-level performance would be 0.5).

In hold-out cross-validation (which we demonstrated previously), you use your train set only for fitting and your test set only to evaluate model predictions. In other words, you only fit and predict once, but on different subsets of your data. If you have a big dataset (i.e., many samples), your test set can be relatively large, and thus cross-validated accuracy on the test set will probably be a good estimate of how well our model will generalize to future/other data. However, if you have a relatively small dataset, you will probably have a relatively small test-set. If you then estimate cross-validated accuracy on this test-set, the chance of just getting a relatively good (or bad) score by coincidence is quite high (see e.g. Varoquaux et al., 2017)! In other words, the estimate of cross-validation accuracy is not really robust. Fortunately, there are ways to increase robustness of cross-validation accuracy estimates; one of them is by using K-fold cross-validation instead of hold-out cross-validation, in which you divide your dataset into \(K\) folds, which you iteratively use as train and test set.

Can you think of a (practical) reason to prefer hold-out cross-validation over K-fold cross-validation?

YOUR ANSWER HERE

As fMRI data-sets often contain few samples (trials/subjects), K-fold cross-validation is often used. Instead of writing our own K-fold cross-validation scheme, we’ll use some of scikit-learn’s functionality. Specifically, we are going to use the StratifiedKFold class from scikit-learn’s model_selection module. Click the highlighted link above and read through the manual to see how it works.

Importantly, if you’re dealing with a classification analysis, always use StratifiedKFold (instead of the regular KFold), because this version makes sure that each fold contains the same proportion of the different classes (here: 0 and 1).

Anyway, enough talking. Let’s initialize a StratifiedKFold object with, let’s say, 5 folds:

from sklearn.model_selection import StratifiedKFold

# They call folds 'splits' in scikit-learn

skf = StratifiedKFold(n_splits=5)

Alright, we have a StratifiedKFold object now, but not yet any indices for our folds (i.e. indices to split our \(\mathbf{R}\) and \(S\) into different subsets). To do that, we need to call the split method, which takes two inputs: the data (\(\mathbf{R}\)) and the target (\(S\)):

folds = skf.split(R_all, S_all)

Now, we created the variable folds which is, technically, a generator object, but just think of it as a type of list (with indices) which is specialized for looping over it. Each entry in folds is a tuple with two elements: an array with train indices and an array with test indices. Let’s demonstrate that*:

* Note that you can only run the cell below once. After running it, the folds generator object is “exhausted”, and you’ll need to call skf.split(R_all, S_all) again in the above cell.

for i, fold in enumerate(folds):

print("Processing fold %i" % (i + 1))

# Here, we unpack fold (a tuple) to get the train and test indices

train_idx, test_idx = fold

print("Train indices:", train_idx)

print("Test indices:", test_idx, end='\n\n')

Processing fold 1

Train indices: [ 24 26 27 28 36 37 38 39 40 41 42 43 44 45 46 47 48 49

50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67

68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85

86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103

104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121

122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139

140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157

158 159]

Test indices: [ 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23

25 29 30 31 32 33 34 35]

Processing fold 2

Train indices: [ 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17

18 19 20 21 22 23 25 29 30 31 32 33 34 35 62 64 65 66

67 68 69 70 72 73 74 75 76 77 78 79 80 81 82 83 84 85

86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103

104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121

122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139

140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157

158 159]

Test indices: [24 26 27 28 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55

56 57 58 59 60 61 63 71]

Processing fold 3

Train indices: [ 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17

18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35

36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53

54 55 56 57 58 59 60 61 63 71 93 94 95 99 100 101 102 103

104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121

122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139

140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157

158 159]

Test indices: [62 64 65 66 67 68 69 70 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87

88 89 90 91 92 96 97 98]

Processing fold 4

Train indices: [ 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17

18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35

36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53

54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71

72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89

90 91 92 96 97 98 126 129 130 131 132 133 134 135 136 137 138 139

140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157

158 159]

Test indices: [ 93 94 95 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113

114 115 116 117 118 119 120 121 122 123 124 125 127 128]

Processing fold 5

Train indices: [ 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17

18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35

36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53

54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71

72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89

90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107

108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125

127 128]

Test indices: [126 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145

146 147 148 149 150 151 152 153 154 155 156 157 158 159]

In a proper analysis, you would fit a model on the train set, predict the labels for the test set, and compute the cross-validated accuracy for all \(K\) folds separately. Note that, in the case of K-fold cross-validation, you technically estimating \(K\) different models (i.e., the estimated model parameters are likely slightly different across folds). For most decoding purposes, this is not necessarily a problem, as we’re often not interested in the model parameters, but the (cross-validated) model performance. As such, people usually compute the fold-wise model performance and subsequently average these values to get an average model score — which is exactly what you’re going to do in the next ToDo.

In every iteration, divide the data into a train and test set, apply (cross-validated) standardization, fit the model (you can reuse the LogisticRegression object from before) on the train set, predict the test set, and compute the accuracy. Store the accuracy for each iteration. After the loop, you should have 4 cross-validated accuracy scores. Average these and store the result (a single number) in a variable named acc_cv_average.

''' Implement the ToDo here. '''

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo. '''

from niedu.tests.nipa.week_2 import test_skf_loop_with_seed

test_skf_loop_with_seed(R_all, S_all, scaler, clf, skf_4f, acc_cv_average)

Sidenote: scikit-learn Pipelines#

As you might have noticed in the previous ToDo, it takes quite some lines of code to fully cross-validate your standardization step and model: fit your scaler on the train set, transform the train set, transform the test set, fit your model on the train set, and finally predict your test set. This cross-validation routine will only become more complicated and cumbersome when you add extra preprocessing or transformation procedures to it (as we’ll do in the next section). As such, let us introduce one of the most amazing features of scikit-learn: Pipelines.

Scikit-learn Pipelines allow you to “bundle together” a sequence of analysis steps (which may include preprocessing and feature transformation operations) that usually ends in a model (e.g., a LogisticRegression object). Then, you can fit all steps on a particular subset of data by calling the Pipeline’s fit method and subsequently call the predict method on another subset of data, which will automatically cross-validate every step in your analysis pipeline. Instead of initializing Pipeline objects directly, we’ll use the convenience function make_pipeline:

from sklearn.pipeline import make_pipeline

Now, the make_pipeline function accepts an arbitrary number of arguments which should all be either preprocessing or feature transformation objects (i.e., so-called transformator objects) or model objects (i.e., so-called estimator objects), such as a LogisticRegression object. Note that you can only have a single model object in your pipeline, which should be the last step in your pipeline.

Let’s create a very simple pipeline that involves standardization and a logistic regression model (like you implemented in the previous ToDo):

# We re-initialize these objects for clarity

scaler = StandardScaler()

clf = LogisticRegression(solver='lbfgs')

# The make_pipeline function returns a Pipeline object

pipe = make_pipeline(scaler, clf)

print(pipe)

Pipeline(steps=[('standardscaler', StandardScaler()),

('logisticregression', LogisticRegression())])

Now, let’s use the data from the simple hold-out split from before to demonstrate the fiting and cross-validation of our complete pipeline. Just like a normal model, we can call the fit and predict methods to do so:

R_train = R_all[0::2, :]

R_test = R_all[1::2, :]

S_train = S_all[0::2]

S_test = S_all[1::2]

# First, let's fit *all* the steps

pipe.fit(R_train, S_train)

# And now cross-validate *all* the steps

preds = pipe.predict(R_test)

Awesome, right? Using pipelines saves you many lines of code and allows you to easily cross-validate entire pipelines. You’ll practice with pipelines in the upcoming ToDo.

Now, back to cross-validation routines. One notable variant of K-fold cross-validation is repeated stratified K-fold cross-validation, in which the cross-validation loop is repeated several times with different (random) folds. This way, the cross-validated model performance estimates usually become more stable (i.e., less variance). (Of course, scikit-learn contains a RepeatedStratifiedKFold class.)

Another notable cross-validation scheme, especially for fMRI-based decoding analyses, is group-based cross-validation, in which folds are created based on a particular grouping variable. In fMRI-based decoding analyses, this type of cross-validation is often applied to cross-validate models across runs. Specifically, the leave-one-run-out technique is often used, in which a model is fit on all trials except the trials from a single run (the train set) and is cross-validated to the trials of the left-out run (the test set).

This functionality is implemented in the LeaveOneGroupOut class in scikit-learn:

from sklearn.model_selection import LeaveOneGroupOut

logo = LeaveOneGroupOut()

This cross-validation object is very similar to the other objects (e.g., StratifiedKfold) you have seen, except that when calling the split method, you need to provide an additional parameter groups, which should be an array/list with integers denoting the different groups:

folds = logo.split(data, target, groups)

For our dataset, we can create a groups-variable based on the different runs as follows:

groups = np.concatenate([[i] * N_per_run for i in range(M)])

print(groups)

[0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 0 0 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2

2 2 2 2 2 2 2 2 2 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3

3 3 3 3 3 3 3 3 3 3 3 3]

Create a new Pipeline object with the aforementioned RobustScaler and RidgeClassifier objects (which you have to import yourself) using the make_pipeline function;

Use the previously defined logo object to create a loop across folds, in which you should cross-validate your entire pipeline and compute each fold’s cross-validated accuracy.

After the loop, average the four accuracy values and store this in a variable named acc_cv_logo.

''' Implement your ToDo here. '''

# YOUR CODE HERE

raise NotImplementedError()

''' Tests the above ToDo. '''

from niedu.tests.nipa.week_2 import test_logo_loop

test_logo_loop(R_all, S_all, logo, groups, acc_cv_logo)

YOUR ANSWER HERE

Class imbalance and model performance revisited#

Before moving on to more exciting aspects of decoding pipelines, let’s discuss class imbalance. Class imbalance, the situation in which not all classes within your target variable have the same number of samples, can have a big impact on your classification model (note: this is not relevant for regression models, as they don’t have classes!). This is not uncommon in decoding models, especially when you don’t have control over the feature of interest, such as response-based features (e.g., whether the participant pressed left or right) or between-subjects variables (e.g., when dealing with clinical populations, which cannot always be perfectly balanced due to practical reasons).

To illustrate this, let’s look at what happens with random data and a imbalanced target variable. We’ll simulate some random data (like our previously used data, this contains no “signal” at all: you’d expect 50% accuracy) a couple of times and we’ll calculate the cross-validated accuracy.

# Simulate random (there is no effect!) data

N = 100 # samples

K = 3 # brain features

ratio01 = 0.8 # ratio class 0 / class 1

clf = LogisticRegression(solver='lbfgs')

iters = 10

for i in range(iters):

R_random = np.random.normal(0, 1, size=(N, K))

# Simulate random target with prespecified imbalance ('ratio01')

S0 = np.zeros(int(np.round(N * ratio01))) # 80% is class 0

S1 = np.ones(int(np.round(N * (1 - ratio01)))) # 20% is class 1

S_random = np.concatenate((S0, S1))

# Now, let's split it in a train- test-set

R_train = R_random[::2, :]

R_test = R_random[1::2, :]

S_train = S_random[::2]

S_test = S_random[1::2]

clf.fit(R_train, S_train)

S_pred = clf.predict(R_test)

this_acc = accuracy_score(S_test, S_pred)

print("Accuracy: %.2f" % this_acc)

Accuracy: 0.72

Accuracy: 0.76

Accuracy: 0.80

Accuracy: 0.74

Accuracy: 0.74

Accuracy: 0.80

Accuracy: 0.80

Accuracy: 0.80

Accuracy: 0.80

Accuracy: 0.80

When running the cell above, you should find that the models is able to consistently yield strong above-chance performance, which shouldn’t happen because the data that we simulated is just random noise!

YOUR ANSWER HERE

As you can see, the model “learns” to just predict the majority class (the class with the most samples)! In a way, class imbalance also provides the classifier with a source of “information” that it can use to derive accurate (but theoretically meaningless) predictions.

Therefore, imbalanced datasets therefore need a different evaluation metric than accuracy. Fortunately, scikit-learn has many more performance metrics you can use, including metrics that “correct” for the (potential) bias due to class imbalance (including f1-score, ROC-AUC-score, balanced-accuracy score, and Cohen’s Kappa).

Let’s check out what happens with our performance estimate if we use a different (‘class-imbalance-aware’) metric, the “ROC-AUC-score” we discussed previously, which should take care of the bias induced by imbalance.

from sklearn.metrics import roc_auc_score

for i in range(iters):

R_random = np.random.normal(0, 1, size=(N, K))

# Simulate random target with prespecified imbalance ('ratio01')

S0 = np.zeros(int(np.round(N * ratio01))) # 80% is class 0

S1 = np.ones(int(np.round(N * (1 - ratio01)))) # 20% is class 1

S_random = np.concatenate((S0, S1))

# Now, let's split it in a train- test-set

R_train = R_random[::2, :]

R_test = R_random[1::2, :]

S_train = S_random[::2]

S_test = S_random[1::2]

clf.fit(R_train, S_train)

S_pred = clf.predict(R_test)

this_acc = roc_auc_score(S_test, S_pred)

print("Accuracy: %.2f" % this_acc)

Accuracy: 0.50

Accuracy: 0.50

Accuracy: 0.50

Accuracy: 0.47

Accuracy: 0.49

Accuracy: 0.51

Accuracy: 0.50

Accuracy: 0.50

Accuracy: 0.54

Accuracy: 0.50

Much better! In addition to roc_auc_score, there are other metrics (such as balanced_accuracy and f1_score) that deal appropriately with class imbalance.

However, using class-imbalance-aware metrics only makes sure that the model performance estimate is unbiased (i.e., is not affected by class imbalance), but it doesn’t prevent the model from actually “learning” the useless “information” related to class imbalance (instead of learning the true/useful signal in the data)! In our previous examples which used completely random (null) data, this is not a problem, but it is a problem when the data actually contains some effect. One way to counter this is by using the class_weight parameter that is available in mode scikit-learn models. Setting the parameter to “balanced” will weigh samples from the minority class more strongly than samples from the majority class, forcing the model to learn information that is independent from class frequency. Below, we initialize a logistic regression model with this setting enabled and show that it effectively reduces the influence of class imbalance:

clf = LogisticRegression(solver='lbfgs', class_weight='balanced')

for i in range(iters):

R_random = np.random.normal(0, 1, size=(N, K))

# Simulate random target with prespecified imbalance ('ratio01')

S0 = np.zeros(int(np.round(N * ratio01))) # 80% is class 0

S1 = np.ones(int(np.round(N * (1 - ratio01)))) # 20% is class 1

S_random = np.concatenate((S0, S1))

# Now, let's split it in a train- test-set

R_train = R_random[::2, :]

R_test = R_random[1::2, :]

S_train = S_random[::2]

S_test = S_random[1::2]

clf.fit(R_train, S_train)

S_pred = clf.predict(R_test)

this_acc = accuracy_score(S_test, S_pred)

print("Accuracy: %.2f" % this_acc)

Accuracy: 0.64

Accuracy: 0.52

Accuracy: 0.58

Accuracy: 0.60

Accuracy: 0.50

Accuracy: 0.50

Accuracy: 0.48

Accuracy: 0.48

Accuracy: 0.52

Accuracy: 0.52

# Running this will remove all numpy arrays up to this point

# from memory

%reset -f array

Feature selection/extraction#

Now that we’ve dicussed cross-validation, which allows you to report an unbiased estimate of generalization performance. However, especially when your data contains many brain features (e.g., voxels), your model might still perform poorly simply because it has a hard time distinguishing signal from noise. One way to “help” our model a little is to apply feature selection and/or extraction techniques, which are meant to reduce the number of features to a smaller subset which, hopefully, contain more signal (and less noise).

Feature reduction can be achieved in two principled ways:

feature extraction: transform your features into a set of lower-dimensional components;

feature selection: select a subset of features

Examples of feature extraction are PCA (i.e. transform voxels to orthogonal components) and averaging features within brain regions from an atlas.

Examples of feature selection are ROI-analysis (i.e. restricting your patterns to a specific ROI in the brain) and “univariate feature selection” (UFS). This latter method is an often-used data-driven method to select features based upon their univariate difference, which is basically like using a traditional whole-brain mass-univariate analysis to select potentially useful features!

YOUR ANSWER HERE

Fortunately, scikit-learn has a bunch of feature selection/extraction objects for us to use. These objects (“transformers” in scikit-learn lingo) work similarly to models (“estimators”): they also have a fit(R, S) method, in which for example the univariate differences (in UFS) or PCA-components (in PCA-driven feature extraction) are computed. Then, instead of having a predict(R) method, transformers have a transform(R) method.

Before going on, let’s actually load in some real data. We’ll use the data from a single “face” run from subject 03. We already estimated the single-trial patterns on Fmriprep-preprocessed data for you using Nilearn (you can check out the code here). Let’s download these patterns:

import os

data_dir = os.path.join(os.path.expanduser('~'), 'NI-edu-data')

print("Downloading patterns from sub-03, ses-1, run 1 (+- 40 MB) ...")

!aws s3 sync --no-sign-request s3://openneuro.org/ds003965 {data_dir} --exclude "*" --include "derivatives/pattern_estimation/sub-03/ses-1/*task-face*run-1*"

print("\nDone!")

Downloading patterns from sub-03, ses-1, run 1 (+- 40 MB) ...

Completed 136.8 KiB/286.0 MiB (173.6 KiB/s) with 8 file(s) remaining

download: s3://openneuro.org/ds003965/derivatives/pattern_estimation/sub-03/ses-1/figures/sub-03_ses-1_task-face_run-1_space-MNI152NLin2009cAsym_desc-design_corr.png to ../../../../../../NI-edu-data/derivatives/pattern_estimation/sub-03/ses-1/figures/sub-03_ses-1_task-face_run-1_space-MNI152NLin2009cAsym_desc-design_corr.png

Completed 136.8 KiB/286.0 MiB (173.6 KiB/s) with 7 file(s) remaining

Completed 295.7 KiB/286.0 MiB (372.4 KiB/s) with 7 file(s) remaining

download: s3://openneuro.org/ds003965/derivatives/pattern_estimation/sub-03/ses-1/model/sub-03_ses-1_task-face_run-1_space-MNI152NLin2009cAsym_desc-design_matrix.tsv to ../../../../../../NI-edu-data/derivatives/pattern_estimation/sub-03/ses-1/model/sub-03_ses-1_task-face_run-1_space-MNI152NLin2009cAsym_desc-design_matrix.tsv

Completed 295.7 KiB/286.0 MiB (372.4 KiB/s) with 6 file(s) remaining

Completed 551.7 KiB/286.0 MiB (693.0 KiB/s) with 6 file(s) remaining

Completed 807.7 KiB/286.0 MiB (1003.0 KiB/s) with 6 file(s) remaining

Completed 1.0 MiB/286.0 MiB (1.3 MiB/s) with 6 file(s) remaining

download: s3://openneuro.org/ds003965/derivatives/pattern_estimation/sub-03/ses-1/model/sub-03_ses-1_task-face_run-1_space-MNI152NLin2009cAsym_desc-model_r2.nii.gz to ../../../../../../NI-edu-data/derivatives/pattern_estimation/sub-03/ses-1/model/sub-03_ses-1_task-face_run-1_space-MNI152NLin2009cAsym_desc-model_r2.nii.gz

Completed 1.0 MiB/286.0 MiB (1.3 MiB/s) with 5 file(s) remaining

Completed 1.1 MiB/286.0 MiB (1.3 MiB/s) with 5 file(s) remaining

download: s3://openneuro.org/ds003965/derivatives/pattern_estimation/sub-03/ses-1/figures/sub-03_ses-1_task-face_run-1_space-MNI152NLin2009cAsym_desc-design_matrix.png to ../../../../../../NI-edu-data/derivatives/pattern_estimation/sub-03/ses-1/figures/sub-03_ses-1_task-face_run-1_space-MNI152NLin2009cAsym_desc-design_matrix.png

Completed 1.1 MiB/286.0 MiB (1.3 MiB/s) with 4 file(s) remaining

Completed 1.4 MiB/286.0 MiB (1.5 MiB/s) with 4 file(s) remaining

Completed 1.6 MiB/286.0 MiB (1.8 MiB/s) with 4 file(s) remaining

Completed 1.9 MiB/286.0 MiB (2.1 MiB/s) with 4 file(s) remaining

Completed 2.1 MiB/286.0 MiB (2.3 MiB/s) with 4 file(s) remaining

Completed 2.4 MiB/286.0 MiB (2.6 MiB/s) with 4 file(s) remaining

Completed 2.6 MiB/286.0 MiB (2.8 MiB/s) with 4 file(s) remaining

Completed 2.9 MiB/286.0 MiB (3.1 MiB/s) with 4 file(s) remaining

Completed 3.1 MiB/286.0 MiB (3.4 MiB/s) with 4 file(s) remaining

Completed 3.4 MiB/286.0 MiB (3.6 MiB/s) with 4 file(s) remaining

Completed 3.6 MiB/286.0 MiB (3.9 MiB/s) with 4 file(s) remaining

Completed 3.9 MiB/286.0 MiB (4.2 MiB/s) with 4 file(s) remaining

Completed 4.1 MiB/286.0 MiB (4.4 MiB/s) with 4 file(s) remaining

Completed 4.4 MiB/286.0 MiB (4.7 MiB/s) with 4 file(s) remaining

Completed 4.6 MiB/286.0 MiB (4.9 MiB/s) with 4 file(s) remaining

Completed 4.9 MiB/286.0 MiB (5.2 MiB/s) with 4 file(s) remaining

Completed 4.9 MiB/286.0 MiB (5.2 MiB/s) with 4 file(s) remaining

Completed 5.1 MiB/286.0 MiB (5.5 MiB/s) with 4 file(s) remaining

download: s3://openneuro.org/ds003965/derivatives/pattern_estimation/sub-03/ses-1/patterns/sub-03_ses-1_task-face_run-1_events.tsv to ../../../../../../NI-edu-data/derivatives/pattern_estimation/sub-03/ses-1/patterns/sub-03_ses-1_task-face_run-1_events.tsv

Completed 5.1 MiB/286.0 MiB (5.5 MiB/s) with 3 file(s) remaining

Completed 5.4 MiB/286.0 MiB (5.7 MiB/s) with 3 file(s) remaining

Completed 5.6 MiB/286.0 MiB (6.0 MiB/s) with 3 file(s) remaining

Completed 5.9 MiB/286.0 MiB (6.2 MiB/s) with 3 file(s) remaining

Completed 6.1 MiB/286.0 MiB (6.5 MiB/s) with 3 file(s) remaining

Completed 6.4 MiB/286.0 MiB (6.7 MiB/s) with 3 file(s) remaining

Completed 6.6 MiB/286.0 MiB (7.0 MiB/s) with 3 file(s) remaining

Completed 6.9 MiB/286.0 MiB (7.2 MiB/s) with 3 file(s) remaining

Completed 7.1 MiB/286.0 MiB (7.5 MiB/s) with 3 file(s) remaining

Completed 7.4 MiB/286.0 MiB (7.7 MiB/s) with 3 file(s) remaining

Completed 7.6 MiB/286.0 MiB (7.9 MiB/s) with 3 file(s) remaining

Completed 7.9 MiB/286.0 MiB (8.2 MiB/s) with 3 file(s) remaining

Completed 8.1 MiB/286.0 MiB (8.4 MiB/s) with 3 file(s) remaining

Completed 8.4 MiB/286.0 MiB (8.7 MiB/s) with 3 file(s) remaining

Completed 8.6 MiB/286.0 MiB (8.9 MiB/s) with 3 file(s) remaining

Completed 8.9 MiB/286.0 MiB (9.2 MiB/s) with 3 file(s) remaining

Completed 9.1 MiB/286.0 MiB (9.4 MiB/s) with 3 file(s) remaining

Completed 9.4 MiB/286.0 MiB (9.7 MiB/s) with 3 file(s) remaining

Completed 9.6 MiB/286.0 MiB (9.9 MiB/s) with 3 file(s) remaining

Completed 9.9 MiB/286.0 MiB (10.2 MiB/s) with 3 file(s) remaining

Completed 10.1 MiB/286.0 MiB (10.4 MiB/s) with 3 file(s) remaining

Completed 10.4 MiB/286.0 MiB (10.7 MiB/s) with 3 file(s) remaining

Completed 10.6 MiB/286.0 MiB (10.9 MiB/s) with 3 file(s) remaining

Completed 10.9 MiB/286.0 MiB (11.2 MiB/s) with 3 file(s) remaining

Completed 11.1 MiB/286.0 MiB (11.4 MiB/s) with 3 file(s) remaining

Completed 11.4 MiB/286.0 MiB (11.6 MiB/s) with 3 file(s) remaining

Completed 11.6 MiB/286.0 MiB (11.8 MiB/s) with 3 file(s) remaining

Completed 11.9 MiB/286.0 MiB (12.1 MiB/s) with 3 file(s) remaining

Completed 12.1 MiB/286.0 MiB (12.3 MiB/s) with 3 file(s) remaining

Completed 12.4 MiB/286.0 MiB (12.6 MiB/s) with 3 file(s) remaining

Completed 12.6 MiB/286.0 MiB (12.8 MiB/s) with 3 file(s) remaining

Completed 12.9 MiB/286.0 MiB (13.0 MiB/s) with 3 file(s) remaining

Completed 13.1 MiB/286.0 MiB (13.3 MiB/s) with 3 file(s) remaining

Completed 13.4 MiB/286.0 MiB (13.5 MiB/s) with 3 file(s) remaining

Completed 13.6 MiB/286.0 MiB (13.8 MiB/s) with 3 file(s) remaining

Completed 13.9 MiB/286.0 MiB (14.0 MiB/s) with 3 file(s) remaining

Completed 14.1 MiB/286.0 MiB (14.2 MiB/s) with 3 file(s) remaining

Completed 14.4 MiB/286.0 MiB (14.4 MiB/s) with 3 file(s) remaining

Completed 14.6 MiB/286.0 MiB (14.7 MiB/s) with 3 file(s) remaining

Completed 14.9 MiB/286.0 MiB (14.9 MiB/s) with 3 file(s) remaining

Completed 15.1 MiB/286.0 MiB (15.1 MiB/s) with 3 file(s) remaining

Completed 15.4 MiB/286.0 MiB (15.3 MiB/s) with 3 file(s) remaining

Completed 15.6 MiB/286.0 MiB (15.5 MiB/s) with 3 file(s) remaining

Completed 15.9 MiB/286.0 MiB (15.7 MiB/s) with 3 file(s) remaining

Completed 16.1 MiB/286.0 MiB (15.9 MiB/s) with 3 file(s) remaining

Completed 16.4 MiB/286.0 MiB (16.2 MiB/s) with 3 file(s) remaining

Completed 16.6 MiB/286.0 MiB (16.4 MiB/s) with 3 file(s) remaining

Completed 16.9 MiB/286.0 MiB (16.6 MiB/s) with 3 file(s) remaining

Completed 17.1 MiB/286.0 MiB (16.8 MiB/s) with 3 file(s) remaining

Completed 17.4 MiB/286.0 MiB (17.1 MiB/s) with 3 file(s) remaining

Completed 17.6 MiB/286.0 MiB (17.3 MiB/s) with 3 file(s) remaining

Completed 17.9 MiB/286.0 MiB (17.5 MiB/s) with 3 file(s) remaining

Completed 18.1 MiB/286.0 MiB (17.8 MiB/s) with 3 file(s) remaining

Completed 18.4 MiB/286.0 MiB (18.0 MiB/s) with 3 file(s) remaining

Completed 18.6 MiB/286.0 MiB (18.2 MiB/s) with 3 file(s) remaining

Completed 18.9 MiB/286.0 MiB (18.4 MiB/s) with 3 file(s) remaining

Completed 19.1 MiB/286.0 MiB (18.7 MiB/s) with 3 file(s) remaining

Completed 19.4 MiB/286.0 MiB (18.9 MiB/s) with 3 file(s) remaining

Completed 19.6 MiB/286.0 MiB (19.1 MiB/s) with 3 file(s) remaining

Completed 19.9 MiB/286.0 MiB (19.3 MiB/s) with 3 file(s) remaining

Completed 20.1 MiB/286.0 MiB (19.6 MiB/s) with 3 file(s) remaining

Completed 20.4 MiB/286.0 MiB (19.8 MiB/s) with 3 file(s) remaining

Completed 20.6 MiB/286.0 MiB (20.0 MiB/s) with 3 file(s) remaining

Completed 20.9 MiB/286.0 MiB (20.2 MiB/s) with 3 file(s) remaining

Completed 21.1 MiB/286.0 MiB (20.5 MiB/s) with 3 file(s) remaining

Completed 21.4 MiB/286.0 MiB (20.7 MiB/s) with 3 file(s) remaining

Completed 21.6 MiB/286.0 MiB (20.9 MiB/s) with 3 file(s) remaining

Completed 21.9 MiB/286.0 MiB (21.1 MiB/s) with 3 file(s) remaining

Completed 22.1 MiB/286.0 MiB (21.3 MiB/s) with 3 file(s) remaining

Completed 22.4 MiB/286.0 MiB (21.5 MiB/s) with 3 file(s) remaining

Completed 22.6 MiB/286.0 MiB (21.7 MiB/s) with 3 file(s) remaining

Completed 22.9 MiB/286.0 MiB (21.9 MiB/s) with 3 file(s) remaining

Completed 23.1 MiB/286.0 MiB (22.1 MiB/s) with 3 file(s) remaining

Completed 23.4 MiB/286.0 MiB (22.4 MiB/s) with 3 file(s) remaining

Completed 23.6 MiB/286.0 MiB (22.6 MiB/s) with 3 file(s) remaining

Completed 23.9 MiB/286.0 MiB (22.8 MiB/s) with 3 file(s) remaining

Completed 24.1 MiB/286.0 MiB (23.0 MiB/s) with 3 file(s) remaining

Completed 24.4 MiB/286.0 MiB (23.2 MiB/s) with 3 file(s) remaining

Completed 24.6 MiB/286.0 MiB (23.4 MiB/s) with 3 file(s) remaining

Completed 24.9 MiB/286.0 MiB (23.6 MiB/s) with 3 file(s) remaining

Completed 25.1 MiB/286.0 MiB (23.9 MiB/s) with 3 file(s) remaining

Completed 25.4 MiB/286.0 MiB (24.1 MiB/s) with 3 file(s) remaining

Completed 25.6 MiB/286.0 MiB (24.2 MiB/s) with 3 file(s) remaining

Completed 25.8 MiB/286.0 MiB (24.4 MiB/s) with 3 file(s) remaining

Completed 26.1 MiB/286.0 MiB (24.5 MiB/s) with 3 file(s) remaining

Completed 26.3 MiB/286.0 MiB (24.7 MiB/s) with 3 file(s) remaining

Completed 26.6 MiB/286.0 MiB (25.0 MiB/s) with 3 file(s) remaining

Completed 26.8 MiB/286.0 MiB (25.2 MiB/s) with 3 file(s) remaining

Completed 27.1 MiB/286.0 MiB (25.4 MiB/s) with 3 file(s) remaining

Completed 27.3 MiB/286.0 MiB (25.6 MiB/s) with 3 file(s) remaining

Completed 27.6 MiB/286.0 MiB (25.8 MiB/s) with 3 file(s) remaining

Completed 27.8 MiB/286.0 MiB (26.0 MiB/s) with 3 file(s) remaining

Completed 28.1 MiB/286.0 MiB (26.2 MiB/s) with 3 file(s) remaining

Completed 28.3 MiB/286.0 MiB (26.4 MiB/s) with 3 file(s) remaining

Completed 28.6 MiB/286.0 MiB (26.7 MiB/s) with 3 file(s) remaining

Completed 28.8 MiB/286.0 MiB (26.9 MiB/s) with 3 file(s) remaining

Completed 29.1 MiB/286.0 MiB (27.0 MiB/s) with 3 file(s) remaining

Completed 29.3 MiB/286.0 MiB (27.2 MiB/s) with 3 file(s) remaining

Completed 29.6 MiB/286.0 MiB (27.4 MiB/s) with 3 file(s) remaining

Completed 29.8 MiB/286.0 MiB (27.7 MiB/s) with 3 file(s) remaining

Completed 30.1 MiB/286.0 MiB (27.8 MiB/s) with 3 file(s) remaining

Completed 30.3 MiB/286.0 MiB (28.0 MiB/s) with 3 file(s) remaining

Completed 30.6 MiB/286.0 MiB (28.1 MiB/s) with 3 file(s) remaining

Completed 30.8 MiB/286.0 MiB (28.3 MiB/s) with 3 file(s) remaining

Completed 31.1 MiB/286.0 MiB (28.5 MiB/s) with 3 file(s) remaining

Completed 31.3 MiB/286.0 MiB (28.7 MiB/s) with 3 file(s) remaining

Completed 31.6 MiB/286.0 MiB (28.9 MiB/s) with 3 file(s) remaining

Completed 31.8 MiB/286.0 MiB (29.1 MiB/s) with 3 file(s) remaining

Completed 32.1 MiB/286.0 MiB (29.3 MiB/s) with 3 file(s) remaining

Completed 32.3 MiB/286.0 MiB (29.5 MiB/s) with 3 file(s) remaining

Completed 32.6 MiB/286.0 MiB (29.7 MiB/s) with 3 file(s) remaining

Completed 32.8 MiB/286.0 MiB (29.9 MiB/s) with 3 file(s) remaining

Completed 33.1 MiB/286.0 MiB (30.1 MiB/s) with 3 file(s) remaining

Completed 33.3 MiB/286.0 MiB (30.3 MiB/s) with 3 file(s) remaining

Completed 33.6 MiB/286.0 MiB (30.5 MiB/s) with 3 file(s) remaining

Completed 33.8 MiB/286.0 MiB (30.6 MiB/s) with 3 file(s) remaining

Completed 34.1 MiB/286.0 MiB (30.8 MiB/s) with 3 file(s) remaining

Completed 34.3 MiB/286.0 MiB (31.1 MiB/s) with 3 file(s) remaining

Completed 34.6 MiB/286.0 MiB (31.2 MiB/s) with 3 file(s) remaining

Completed 34.8 MiB/286.0 MiB (31.4 MiB/s) with 3 file(s) remaining

Completed 35.1 MiB/286.0 MiB (31.6 MiB/s) with 3 file(s) remaining

Completed 35.3 MiB/286.0 MiB (31.8 MiB/s) with 3 file(s) remaining

Completed 35.6 MiB/286.0 MiB (32.0 MiB/s) with 3 file(s) remaining

Completed 35.8 MiB/286.0 MiB (32.2 MiB/s) with 3 file(s) remaining

Completed 36.1 MiB/286.0 MiB (32.4 MiB/s) with 3 file(s) remaining

Completed 36.3 MiB/286.0 MiB (32.6 MiB/s) with 3 file(s) remaining

Completed 36.6 MiB/286.0 MiB (32.8 MiB/s) with 3 file(s) remaining

Completed 36.8 MiB/286.0 MiB (33.0 MiB/s) with 3 file(s) remaining

Completed 37.1 MiB/286.0 MiB (33.2 MiB/s) with 3 file(s) remaining

Completed 37.3 MiB/286.0 MiB (33.4 MiB/s) with 3 file(s) remaining

Completed 37.6 MiB/286.0 MiB (33.6 MiB/s) with 3 file(s) remaining

Completed 37.8 MiB/286.0 MiB (33.6 MiB/s) with 3 file(s) remaining

Completed 38.1 MiB/286.0 MiB (33.8 MiB/s) with 3 file(s) remaining

Completed 38.3 MiB/286.0 MiB (33.9 MiB/s) with 3 file(s) remaining

Completed 38.6 MiB/286.0 MiB (34.2 MiB/s) with 3 file(s) remaining

Completed 38.8 MiB/286.0 MiB (34.4 MiB/s) with 3 file(s) remaining

Completed 39.1 MiB/286.0 MiB (34.5 MiB/s) with 3 file(s) remaining

Completed 39.1 MiB/286.0 MiB (34.6 MiB/s) with 3 file(s) remaining

Completed 39.4 MiB/286.0 MiB (34.6 MiB/s) with 3 file(s) remaining

Completed 39.6 MiB/286.0 MiB (34.7 MiB/s) with 3 file(s) remaining

Completed 39.9 MiB/286.0 MiB (34.9 MiB/s) with 3 file(s) remaining

Completed 40.1 MiB/286.0 MiB (35.1 MiB/s) with 3 file(s) remaining

Completed 40.4 MiB/286.0 MiB (35.3 MiB/s) with 3 file(s) remaining

Completed 40.6 MiB/286.0 MiB (35.5 MiB/s) with 3 file(s) remaining

Completed 40.9 MiB/286.0 MiB (35.7 MiB/s) with 3 file(s) remaining

Completed 41.1 MiB/286.0 MiB (35.9 MiB/s) with 3 file(s) remaining

Completed 41.4 MiB/286.0 MiB (36.1 MiB/s) with 3 file(s) remaining

Completed 41.6 MiB/286.0 MiB (36.2 MiB/s) with 3 file(s) remaining

Completed 41.9 MiB/286.0 MiB (36.4 MiB/s) with 3 file(s) remaining

Completed 42.1 MiB/286.0 MiB (36.6 MiB/s) with 3 file(s) remaining

Completed 42.4 MiB/286.0 MiB (36.8 MiB/s) with 3 file(s) remaining

Completed 42.6 MiB/286.0 MiB (37.0 MiB/s) with 3 file(s) remaining

Completed 42.9 MiB/286.0 MiB (37.1 MiB/s) with 3 file(s) remaining

Completed 43.1 MiB/286.0 MiB (37.3 MiB/s) with 3 file(s) remaining

Completed 43.4 MiB/286.0 MiB (37.4 MiB/s) with 3 file(s) remaining

Completed 43.6 MiB/286.0 MiB (37.6 MiB/s) with 3 file(s) remaining

Completed 43.9 MiB/286.0 MiB (37.8 MiB/s) with 3 file(s) remaining

Completed 44.1 MiB/286.0 MiB (37.9 MiB/s) with 3 file(s) remaining

Completed 44.4 MiB/286.0 MiB (38.1 MiB/s) with 3 file(s) remaining

Completed 44.6 MiB/286.0 MiB (38.2 MiB/s) with 3 file(s) remaining

Completed 44.9 MiB/286.0 MiB (38.4 MiB/s) with 3 file(s) remaining

Completed 45.1 MiB/286.0 MiB (38.5 MiB/s) with 3 file(s) remaining

Completed 45.4 MiB/286.0 MiB (38.7 MiB/s) with 3 file(s) remaining

Completed 45.6 MiB/286.0 MiB (38.9 MiB/s) with 3 file(s) remaining

Completed 45.9 MiB/286.0 MiB (39.0 MiB/s) with 3 file(s) remaining

Completed 46.1 MiB/286.0 MiB (39.2 MiB/s) with 3 file(s) remaining

Completed 46.4 MiB/286.0 MiB (39.3 MiB/s) with 3 file(s) remaining

Completed 46.6 MiB/286.0 MiB (39.5 MiB/s) with 3 file(s) remaining

Completed 46.9 MiB/286.0 MiB (39.7 MiB/s) with 3 file(s) remaining

Completed 47.1 MiB/286.0 MiB (39.9 MiB/s) with 3 file(s) remaining

Completed 47.4 MiB/286.0 MiB (40.0 MiB/s) with 3 file(s) remaining

Completed 47.6 MiB/286.0 MiB (40.2 MiB/s) with 3 file(s) remaining

Completed 47.9 MiB/286.0 MiB (40.4 MiB/s) with 3 file(s) remaining

Completed 48.1 MiB/286.0 MiB (40.5 MiB/s) with 3 file(s) remaining

Completed 48.4 MiB/286.0 MiB (40.7 MiB/s) with 3 file(s) remaining

Completed 48.6 MiB/286.0 MiB (40.7 MiB/s) with 3 file(s) remaining

Completed 48.9 MiB/286.0 MiB (40.9 MiB/s) with 3 file(s) remaining

Completed 49.1 MiB/286.0 MiB (41.1 MiB/s) with 3 file(s) remaining

Completed 49.4 MiB/286.0 MiB (41.2 MiB/s) with 3 file(s) remaining

Completed 49.6 MiB/286.0 MiB (41.3 MiB/s) with 3 file(s) remaining

Completed 49.9 MiB/286.0 MiB (41.5 MiB/s) with 3 file(s) remaining

Completed 50.1 MiB/286.0 MiB (41.6 MiB/s) with 3 file(s) remaining

Completed 50.4 MiB/286.0 MiB (41.8 MiB/s) with 3 file(s) remaining

Completed 50.6 MiB/286.0 MiB (42.0 MiB/s) with 3 file(s) remaining

Completed 50.9 MiB/286.0 MiB (42.2 MiB/s) with 3 file(s) remaining

Completed 51.1 MiB/286.0 MiB (42.4 MiB/s) with 3 file(s) remaining

Completed 51.4 MiB/286.0 MiB (42.6 MiB/s) with 3 file(s) remaining

Completed 51.6 MiB/286.0 MiB (42.7 MiB/s) with 3 file(s) remaining

Completed 51.9 MiB/286.0 MiB (42.9 MiB/s) with 3 file(s) remaining

Completed 52.1 MiB/286.0 MiB (43.1 MiB/s) with 3 file(s) remaining

Completed 52.4 MiB/286.0 MiB (43.2 MiB/s) with 3 file(s) remaining

Completed 52.6 MiB/286.0 MiB (43.4 MiB/s) with 3 file(s) remaining

Completed 52.9 MiB/286.0 MiB (43.6 MiB/s) with 3 file(s) remaining

Completed 53.1 MiB/286.0 MiB (43.7 MiB/s) with 3 file(s) remaining

Completed 53.4 MiB/286.0 MiB (43.9 MiB/s) with 3 file(s) remaining

Completed 53.6 MiB/286.0 MiB (44.0 MiB/s) with 3 file(s) remaining

Completed 53.9 MiB/286.0 MiB (44.1 MiB/s) with 3 file(s) remaining

Completed 54.1 MiB/286.0 MiB (44.3 MiB/s) with 3 file(s) remaining

Completed 54.4 MiB/286.0 MiB (44.5 MiB/s) with 3 file(s) remaining

Completed 54.6 MiB/286.0 MiB (44.7 MiB/s) with 3 file(s) remaining

Completed 54.9 MiB/286.0 MiB (44.8 MiB/s) with 3 file(s) remaining

Completed 55.1 MiB/286.0 MiB (45.0 MiB/s) with 3 file(s) remaining

Completed 55.4 MiB/286.0 MiB (45.2 MiB/s) with 3 file(s) remaining

Completed 55.6 MiB/286.0 MiB (45.4 MiB/s) with 3 file(s) remaining

Completed 55.9 MiB/286.0 MiB (45.5 MiB/s) with 3 file(s) remaining

Completed 56.1 MiB/286.0 MiB (45.7 MiB/s) with 3 file(s) remaining

Completed 56.4 MiB/286.0 MiB (45.9 MiB/s) with 3 file(s) remaining

Completed 56.6 MiB/286.0 MiB (46.1 MiB/s) with 3 file(s) remaining

Completed 56.9 MiB/286.0 MiB (46.2 MiB/s) with 3 file(s) remaining

Completed 57.1 MiB/286.0 MiB (46.4 MiB/s) with 3 file(s) remaining

Completed 57.4 MiB/286.0 MiB (46.5 MiB/s) with 3 file(s) remaining

Completed 57.6 MiB/286.0 MiB (46.7 MiB/s) with 3 file(s) remaining

Completed 57.9 MiB/286.0 MiB (46.9 MiB/s) with 3 file(s) remaining

Completed 58.1 MiB/286.0 MiB (47.0 MiB/s) with 3 file(s) remaining

Completed 58.4 MiB/286.0 MiB (47.2 MiB/s) with 3 file(s) remaining

Completed 58.6 MiB/286.0 MiB (47.4 MiB/s) with 3 file(s) remaining

Completed 58.9 MiB/286.0 MiB (47.5 MiB/s) with 3 file(s) remaining

Completed 59.1 MiB/286.0 MiB (47.5 MiB/s) with 3 file(s) remaining

Completed 59.4 MiB/286.0 MiB (47.6 MiB/s) with 3 file(s) remaining

Completed 59.6 MiB/286.0 MiB (47.8 MiB/s) with 3 file(s) remaining

Completed 59.9 MiB/286.0 MiB (48.0 MiB/s) with 3 file(s) remaining

Completed 60.1 MiB/286.0 MiB (48.1 MiB/s) with 3 file(s) remaining

Completed 60.4 MiB/286.0 MiB (48.3 MiB/s) with 3 file(s) remaining

Completed 60.6 MiB/286.0 MiB (48.4 MiB/s) with 3 file(s) remaining

Completed 60.9 MiB/286.0 MiB (48.6 MiB/s) with 3 file(s) remaining

Completed 61.1 MiB/286.0 MiB (48.8 MiB/s) with 3 file(s) remaining

Completed 61.4 MiB/286.0 MiB (48.9 MiB/s) with 3 file(s) remaining

Completed 61.6 MiB/286.0 MiB (49.1 MiB/s) with 3 file(s) remaining

Completed 61.9 MiB/286.0 MiB (49.2 MiB/s) with 3 file(s) remaining

Completed 62.1 MiB/286.0 MiB (49.3 MiB/s) with 3 file(s) remaining

Completed 62.4 MiB/286.0 MiB (49.5 MiB/s) with 3 file(s) remaining

Completed 62.6 MiB/286.0 MiB (49.7 MiB/s) with 3 file(s) remaining

Completed 62.9 MiB/286.0 MiB (49.9 MiB/s) with 3 file(s) remaining

Completed 63.1 MiB/286.0 MiB (50.0 MiB/s) with 3 file(s) remaining

Completed 63.4 MiB/286.0 MiB (50.2 MiB/s) with 3 file(s) remaining

Completed 63.6 MiB/286.0 MiB (50.4 MiB/s) with 3 file(s) remaining

Completed 63.9 MiB/286.0 MiB (50.6 MiB/s) with 3 file(s) remaining

Completed 64.1 MiB/286.0 MiB (50.7 MiB/s) with 3 file(s) remaining

Completed 64.4 MiB/286.0 MiB (50.9 MiB/s) with 3 file(s) remaining

Completed 64.6 MiB/286.0 MiB (51.0 MiB/s) with 3 file(s) remaining

Completed 64.9 MiB/286.0 MiB (51.2 MiB/s) with 3 file(s) remaining

Completed 65.1 MiB/286.0 MiB (51.4 MiB/s) with 3 file(s) remaining

Completed 65.4 MiB/286.0 MiB (51.5 MiB/s) with 3 file(s) remaining

Completed 65.6 MiB/286.0 MiB (51.6 MiB/s) with 3 file(s) remaining

Completed 65.9 MiB/286.0 MiB (51.7 MiB/s) with 3 file(s) remaining

Completed 66.1 MiB/286.0 MiB (51.9 MiB/s) with 3 file(s) remaining

Completed 66.4 MiB/286.0 MiB (52.1 MiB/s) with 3 file(s) remaining

Completed 66.6 MiB/286.0 MiB (52.2 MiB/s) with 3 file(s) remaining

Completed 66.9 MiB/286.0 MiB (52.4 MiB/s) with 3 file(s) remaining

Completed 67.1 MiB/286.0 MiB (52.6 MiB/s) with 3 file(s) remaining

Completed 67.4 MiB/286.0 MiB (52.7 MiB/s) with 3 file(s) remaining

Completed 67.6 MiB/286.0 MiB (52.9 MiB/s) with 3 file(s) remaining

Completed 67.9 MiB/286.0 MiB (53.1 MiB/s) with 3 file(s) remaining

Completed 68.1 MiB/286.0 MiB (53.2 MiB/s) with 3 file(s) remaining

Completed 68.4 MiB/286.0 MiB (53.4 MiB/s) with 3 file(s) remaining

Completed 68.6 MiB/286.0 MiB (53.5 MiB/s) with 3 file(s) remaining

Completed 68.9 MiB/286.0 MiB (53.7 MiB/s) with 3 file(s) remaining

Completed 69.1 MiB/286.0 MiB (53.9 MiB/s) with 3 file(s) remaining

Completed 69.4 MiB/286.0 MiB (54.0 MiB/s) with 3 file(s) remaining

Completed 69.6 MiB/286.0 MiB (54.1 MiB/s) with 3 file(s) remaining

Completed 69.9 MiB/286.0 MiB (54.3 MiB/s) with 3 file(s) remaining

Completed 70.1 MiB/286.0 MiB (54.5 MiB/s) with 3 file(s) remaining

Completed 70.4 MiB/286.0 MiB (54.6 MiB/s) with 3 file(s) remaining

Completed 70.6 MiB/286.0 MiB (54.7 MiB/s) with 3 file(s) remaining

Completed 70.9 MiB/286.0 MiB (54.9 MiB/s) with 3 file(s) remaining

Completed 71.1 MiB/286.0 MiB (55.0 MiB/s) with 3 file(s) remaining

Completed 71.4 MiB/286.0 MiB (55.2 MiB/s) with 3 file(s) remaining

Completed 71.6 MiB/286.0 MiB (55.4 MiB/s) with 3 file(s) remaining

Completed 71.9 MiB/286.0 MiB (55.5 MiB/s) with 3 file(s) remaining

Completed 72.1 MiB/286.0 MiB (55.6 MiB/s) with 3 file(s) remaining

Completed 72.4 MiB/286.0 MiB (55.8 MiB/s) with 3 file(s) remaining

Completed 72.6 MiB/286.0 MiB (55.9 MiB/s) with 3 file(s) remaining

Completed 72.9 MiB/286.0 MiB (56.1 MiB/s) with 3 file(s) remaining

Completed 73.1 MiB/286.0 MiB (56.2 MiB/s) with 3 file(s) remaining

Completed 73.4 MiB/286.0 MiB (56.4 MiB/s) with 3 file(s) remaining

Completed 73.6 MiB/286.0 MiB (56.5 MiB/s) with 3 file(s) remaining

Completed 73.9 MiB/286.0 MiB (56.6 MiB/s) with 3 file(s) remaining

Completed 74.1 MiB/286.0 MiB (56.8 MiB/s) with 3 file(s) remaining

Completed 74.4 MiB/286.0 MiB (56.9 MiB/s) with 3 file(s) remaining

Completed 74.6 MiB/286.0 MiB (57.1 MiB/s) with 3 file(s) remaining

Completed 74.9 MiB/286.0 MiB (57.3 MiB/s) with 3 file(s) remaining

Completed 75.1 MiB/286.0 MiB (57.5 MiB/s) with 3 file(s) remaining

Completed 75.4 MiB/286.0 MiB (57.6 MiB/s) with 3 file(s) remaining

Completed 75.6 MiB/286.0 MiB (57.8 MiB/s) with 3 file(s) remaining

Completed 75.9 MiB/286.0 MiB (57.9 MiB/s) with 3 file(s) remaining

Completed 76.1 MiB/286.0 MiB (58.1 MiB/s) with 3 file(s) remaining

Completed 76.4 MiB/286.0 MiB (58.2 MiB/s) with 3 file(s) remaining

Completed 76.6 MiB/286.0 MiB (58.4 MiB/s) with 3 file(s) remaining

Completed 76.9 MiB/286.0 MiB (58.5 MiB/s) with 3 file(s) remaining

Completed 77.1 MiB/286.0 MiB (58.6 MiB/s) with 3 file(s) remaining

Completed 77.4 MiB/286.0 MiB (58.8 MiB/s) with 3 file(s) remaining

Completed 77.6 MiB/286.0 MiB (58.9 MiB/s) with 3 file(s) remaining

Completed 77.9 MiB/286.0 MiB (59.0 MiB/s) with 3 file(s) remaining

Completed 78.1 MiB/286.0 MiB (59.1 MiB/s) with 3 file(s) remaining

Completed 78.4 MiB/286.0 MiB (59.2 MiB/s) with 3 file(s) remaining

Completed 78.6 MiB/286.0 MiB (59.3 MiB/s) with 3 file(s) remaining

Completed 78.9 MiB/286.0 MiB (59.5 MiB/s) with 3 file(s) remaining

Completed 79.1 MiB/286.0 MiB (59.6 MiB/s) with 3 file(s) remaining

Completed 79.4 MiB/286.0 MiB (59.8 MiB/s) with 3 file(s) remaining

Completed 79.6 MiB/286.0 MiB (59.9 MiB/s) with 3 file(s) remaining

Completed 79.9 MiB/286.0 MiB (60.0 MiB/s) with 3 file(s) remaining

Completed 80.1 MiB/286.0 MiB (60.2 MiB/s) with 3 file(s) remaining

Completed 80.4 MiB/286.0 MiB (60.3 MiB/s) with 3 file(s) remaining

Completed 80.6 MiB/286.0 MiB (60.4 MiB/s) with 3 file(s) remaining

Completed 80.9 MiB/286.0 MiB (60.6 MiB/s) with 3 file(s) remaining

Completed 81.1 MiB/286.0 MiB (60.7 MiB/s) with 3 file(s) remaining

Completed 81.4 MiB/286.0 MiB (60.9 MiB/s) with 3 file(s) remaining

Completed 81.6 MiB/286.0 MiB (61.0 MiB/s) with 3 file(s) remaining

Completed 81.9 MiB/286.0 MiB (61.1 MiB/s) with 3 file(s) remaining

Completed 82.1 MiB/286.0 MiB (61.3 MiB/s) with 3 file(s) remaining

Completed 82.4 MiB/286.0 MiB (61.4 MiB/s) with 3 file(s) remaining

Completed 82.6 MiB/286.0 MiB (61.6 MiB/s) with 3 file(s) remaining

Completed 82.9 MiB/286.0 MiB (61.7 MiB/s) with 3 file(s) remaining

Completed 83.1 MiB/286.0 MiB (61.9 MiB/s) with 3 file(s) remaining

Completed 83.4 MiB/286.0 MiB (61.9 MiB/s) with 3 file(s) remaining

Completed 83.6 MiB/286.0 MiB (62.1 MiB/s) with 3 file(s) remaining

Completed 83.9 MiB/286.0 MiB (62.2 MiB/s) with 3 file(s) remaining

Completed 84.1 MiB/286.0 MiB (62.3 MiB/s) with 3 file(s) remaining

Completed 84.4 MiB/286.0 MiB (62.5 MiB/s) with 3 file(s) remaining

Completed 84.6 MiB/286.0 MiB (62.6 MiB/s) with 3 file(s) remaining

Completed 84.9 MiB/286.0 MiB (62.8 MiB/s) with 3 file(s) remaining

Completed 85.1 MiB/286.0 MiB (62.9 MiB/s) with 3 file(s) remaining

Completed 85.4 MiB/286.0 MiB (63.1 MiB/s) with 3 file(s) remaining

Completed 85.6 MiB/286.0 MiB (63.2 MiB/s) with 3 file(s) remaining

Completed 85.9 MiB/286.0 MiB (63.3 MiB/s) with 3 file(s) remaining

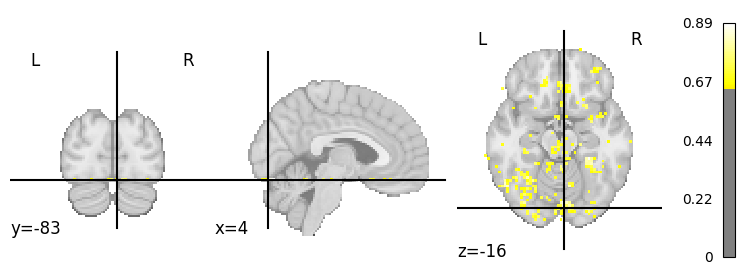

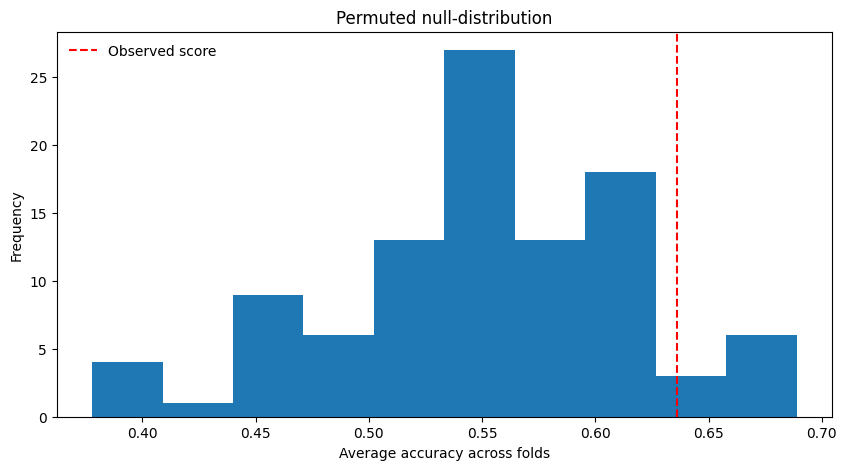

Completed 86.1 MiB/286.0 MiB (63.5 MiB/s) with 3 file(s) remaining